Weixuan Xiong

MGADN: A Multi-task Graph Anomaly Detection Network for Multivariate Time Series

Nov 27, 2022Abstract:Anomaly detection of time series, especially multivariate time series(time series with multiple sensors), has been focused on for several years. Though existing method has achieved great progress, there are several challenging problems to be solved. Firstly, existing method including neural network only concentrate on the relationship in terms of timestamp. To be exact, they only want to know how does the data in the past influence which in the future. However, one sensor sometimes intervenes in other sensor such as the speed of wind may cause decrease of temperature. Secondly, there exist two categories of model for time series anomaly detection: prediction model and reconstruction model. Prediction model is adept at learning timely representation while short of capability when faced with sparse anomaly. Conversely, reconstruction model is opposite. Therefore, how can we efficiently get the relationship both in terms of both timestamp and sensors becomes our main topic. Our approach uses GAT, which is originated from graph neural network, to obtain connection between sensors. And LSTM is used to obtain relationships timely. Our approach is also designed to be double headed to calculate both prediction loss and reconstruction loss via VAE(Variational Auto-Encoder). In order to take advantage of two sorts of model, multi-task optimization algorithm is used in this model.

Tackling the Imbalance for GNNs

Oct 17, 2021

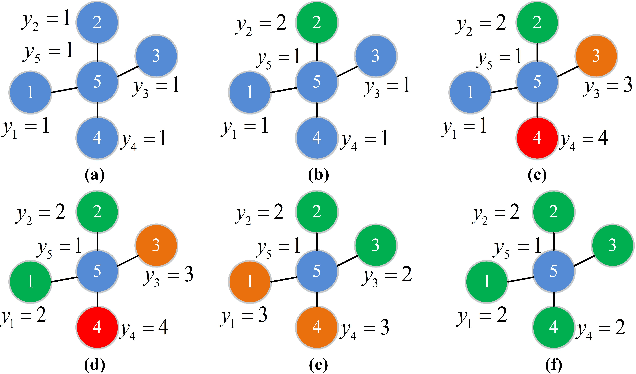

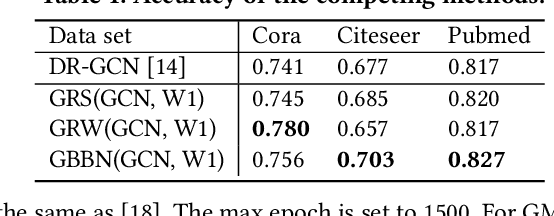

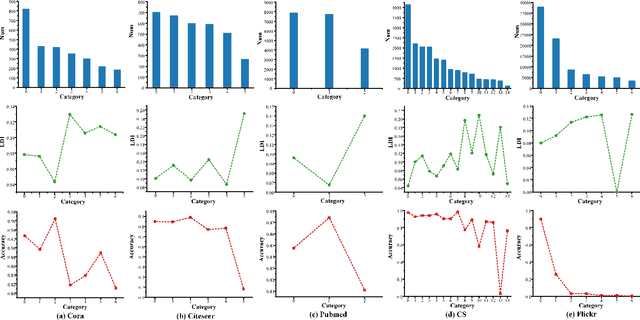

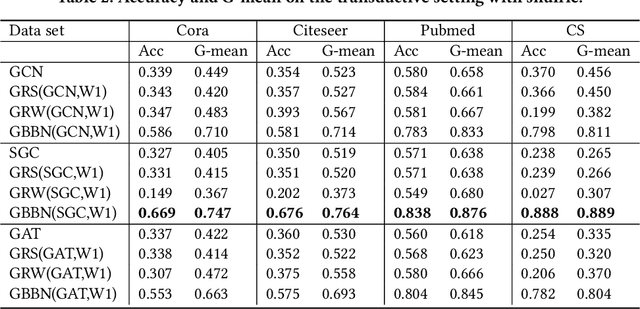

Abstract:Different from deep neural networks for non-graph data classification, graph neural networks (GNNs) leverage the information exchange between nodes (or samples) when representing nodes. The category distribution shows an imbalance or even a highly-skewed trend on nearly all existing benchmark GNN data sets. The imbalanced distribution will cause misclassification of nodes in the minority classes, and even cause the classification performance on the entire data set to decrease. This study explores the effects of the imbalance problem on the performances of GNNs and proposes new methodologies to solve it. First, a node-level index, namely, the label difference index ($LDI$), is defined to quantitatively analyze the relationship between imbalance and misclassification. The less samples in a class, the higher the value of its average $LDI$; the higher the $LDI$ of a sample, the more likely the sample will be misclassified. We define a new loss and propose four new methods based on $LDI$. Experimental results indicate that the classification accuracies of the three among our proposed four new methods are better in both transductive and inductive settings. The $LDI$ can be applied to other GNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge