Washington L. S. Ramos

Leveraging Synthetic Data to Learn Video Stabilization Under Adverse Conditions

Aug 26, 2022

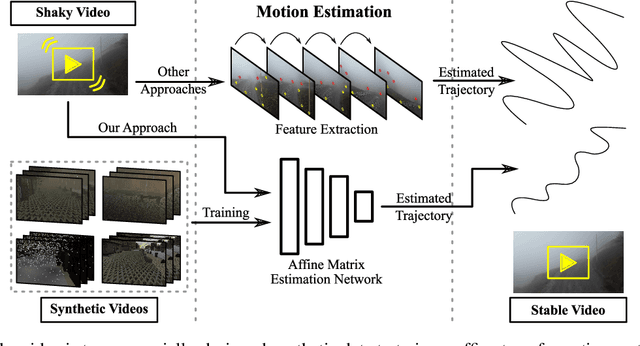

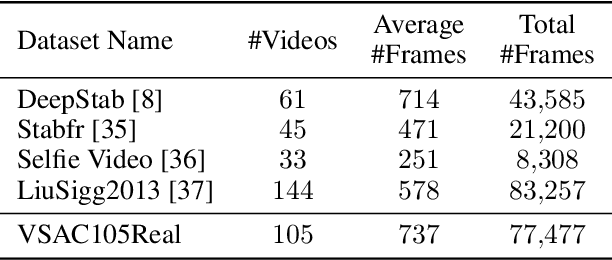

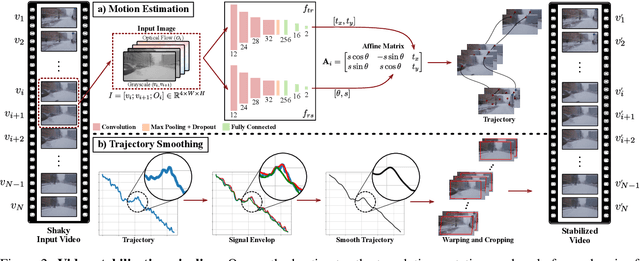

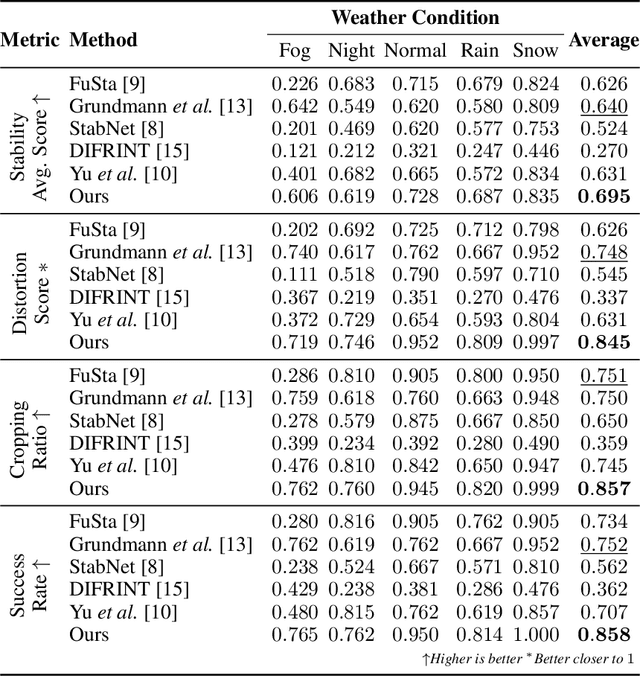

Abstract:Video stabilization plays a central role to improve videos quality. However, despite the substantial progress made by these methods, they were, mainly, tested under standard weather and lighting conditions, and may perform poorly under adverse conditions. In this paper, we propose a synthetic-aware adverse weather robust algorithm for video stabilization that does not require real data and can be trained only on synthetic data. We also present Silver, a novel rendering engine to generate the required training data with an automatic ground-truth extraction procedure. Our approach uses our specially generated synthetic data for training an affine transformation matrix estimator avoiding the feature extraction issues faced by current methods. Additionally, since no video stabilization datasets under adverse conditions are available, we propose the novel VSAC105Real dataset for evaluation. We compare our method to five state-of-the-art video stabilization algorithms using two benchmarks. Our results show that current approaches perform poorly in at least one weather condition, and that, even training in a small dataset with synthetic data only, we achieve the best performance in terms of stability average score, distortion score, success rate, and average cropping ratio when considering all weather conditions. Hence, our video stabilization model generalizes well on real-world videos and does not require large-scale synthetic training data to converge.

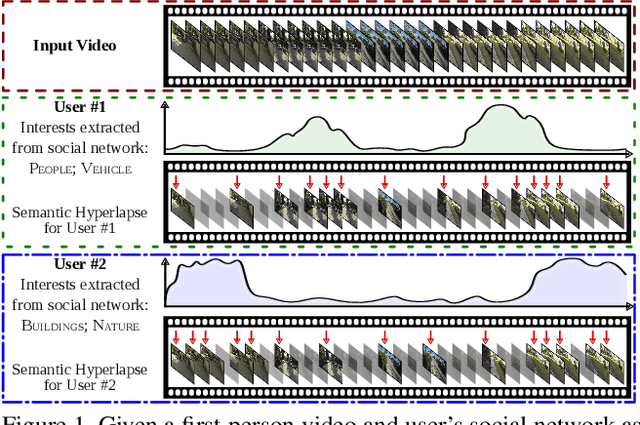

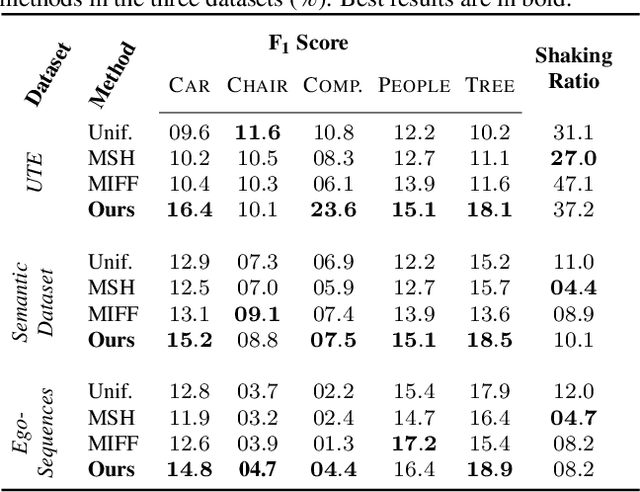

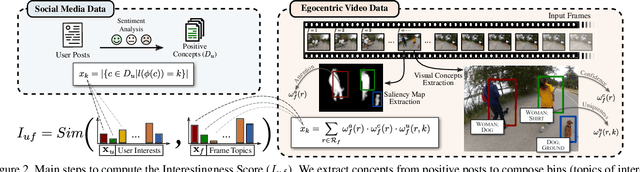

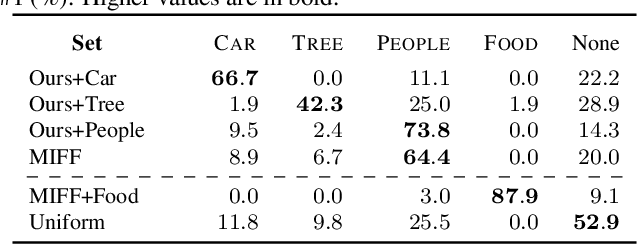

Personalizing Fast-Forward Videos Based on Visual and Textual Features from Social Network

Dec 29, 2019

Abstract:The growth of Social Networks has fueled the habit of people logging their day-to-day activities, and long First-Person Videos (FPVs) are one of the main tools in this new habit. Semantic-aware fast-forward methods are able to decrease the watch time and select meaningful moments, which is key to increase the chances of these videos being watched. However, these methods can not handle semantics in terms of personalization. In this work, we present a new approach to automatically creating personalized fast-forward videos for FPVs. Our approach explores the availability of text-centric data from the user's social networks such as status updates to infer her/his topics of interest and assigns scores to the input frames according to her/his preferences. Extensive experiments are conducted on three different datasets with simulated and real-world users as input, achieving an average F1 score of up to 12.8 percentage points higher than the best competitors. We also present a user study to demonstrate the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge