Walid Maalej

How Do Programming Students Use Generative AI?

Jan 17, 2025Abstract:Programming students have a widespread access to powerful Generative AI tools like ChatGPT. While this can help understand the learning material and assist with exercises, educators are voicing more and more concerns about an over-reliance on generated outputs and lack of critical thinking skills. It is thus important to understand how students actually use generative AI and what impact this could have on their learning behavior. To this end, we conducted a study including an exploratory experiment with 37 programming students, giving them monitored access to ChatGPT while solving a code understanding and improving exercise. While only 23 of the students actually opted to use the chatbot, the majority of those eventually prompted it to simply generate a full solution. We observed two prevalent usage strategies: to seek knowledge about general concepts and to directly generate solutions. Instead of using the bot to comprehend the code and their own mistakes, students often got trapped in a vicious cycle of submitting wrong generated code and then asking the bot for a fix. Those who self-reported using generative AI regularly were more likely to prompt the bot to generate a solution. Our findings indicate that concerns about potential decrease in programmers' agency and productivity with Generative AI are justified. We discuss how researchers and educators can respond to the potential risk of students uncritically over-relying on generative AI. We also discuss potential modifications to our study design for large-scale replications.

Getting Inspiration for Feature Elicitation: App Store- vs. LLM-based Approach

Aug 30, 2024Abstract:Over the past decade, app store (AppStore)-inspired requirements elicitation has proven to be highly beneficial. Developers often explore competitors' apps to gather inspiration for new features. With the advance of Generative AI, recent studies have demonstrated the potential of large language model (LLM)-inspired requirements elicitation. LLMs can assist in this process by providing inspiration for new feature ideas. While both approaches are gaining popularity in practice, there is a lack of insight into their differences. We report on a comparative study between AppStore- and LLM-based approaches for refining features into sub-features. By manually analyzing 1,200 sub-features recommended from both approaches, we identified their benefits, challenges, and key differences. While both approaches recommend highly relevant sub-features with clear descriptions, LLMs seem more powerful particularly concerning novel unseen app scopes. Moreover, some recommended features are imaginary with unclear feasibility, which suggests the importance of a human-analyst in the elicitation loop.

Can Developers Prompt? A Controlled Experiment for Code Documentation Generation

Aug 01, 2024Abstract:Large language models (LLMs) bear great potential for automating tedious development tasks such as creating and maintaining code documentation. However, it is unclear to what extent developers can effectively prompt LLMs to create concise and useful documentation. We report on a controlled experiment with 20 professionals and 30 computer science students tasked with code documentation generation for two Python functions. The experimental group freely entered ad-hoc prompts in a ChatGPT-like extension of Visual Studio Code, while the control group executed a predefined few-shot prompt. Our results reveal that professionals and students were unaware of or unable to apply prompt engineering techniques. Especially students perceived the documentation produced from ad-hoc prompts as significantly less readable, less concise, and less helpful than documentation from prepared prompts. Some professionals produced higher quality documentation by just including the keyword Docstring in their ad-hoc prompts. While students desired more support in formulating prompts, professionals appreciated the flexibility of ad-hoc prompting. Participants in both groups rarely assessed the output as perfect. Instead, they understood the tools as support to iteratively refine the documentation. Further research is needed to understand which prompting skills and preferences developers have and which support they need for certain tasks.

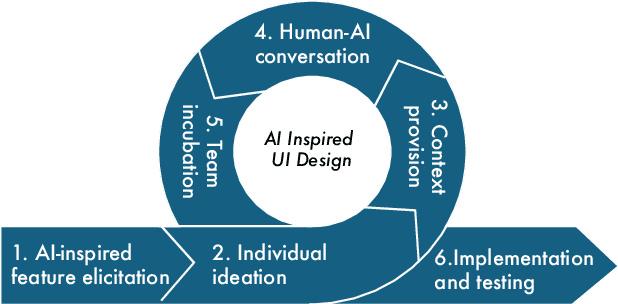

On AI-Inspired UI-Design

Jun 19, 2024

Abstract:Graphical User Interface (or simply UI) is a primary mean of interaction between users and their device. In this paper, we discuss three major complementary approaches on how to use Artificial Intelligence (AI) to support app designers create better, more diverse, and creative UI of mobile apps. First, designers can prompt a Large Language Model (LLM) like GPT to directly generate and adjust one or multiple UIs. Second, a Vision-Language Model (VLM) enables designers to effectively search a large screenshot dataset, e.g. from apps published in app stores. The third approach is to train a Diffusion Model (DM) specifically designed to generate app UIs as inspirational images. We discuss how AI should be used, in general, to inspire and assist creative app design rather than automating it.

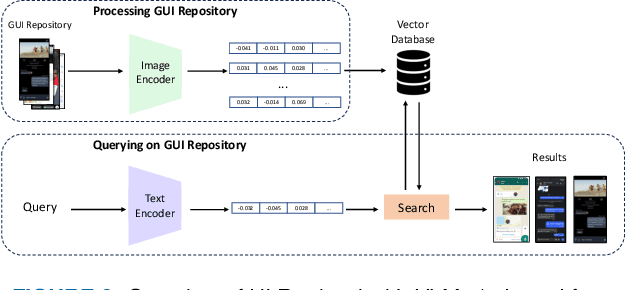

GUing: A Mobile GUI Search Engine using a Vision-Language Model

Apr 30, 2024

Abstract:App developers use the Graphical User Interface (GUI) of other apps as an important source of inspiration to design and improve their own apps. In recent years, research suggested various approaches to retrieve GUI designs that fit a certain text query from screenshot datasets acquired through automated GUI exploration. However, such text-to-GUI retrieval approaches only leverage the textual information of the GUI elements in the screenshots, neglecting visual information such as icons or background images. In addition, the retrieved screenshots are not steered by app developers and often lack important app features, e.g. whose UI pages require user authentication. To overcome these limitations, this paper proposes GUing, a GUI search engine based on a vision-language model called UIClip, which we trained specifically for the app GUI domain. For this, we first collected app introduction images from Google Play, which usually display the most representative screenshots selected and often captioned (i.e. labeled) by app vendors. Then, we developed an automated pipeline to classify, crop, and extract the captions from these images. This finally results in a large dataset which we share with this paper: including 303k app screenshots, out of which 135k have captions. We used this dataset to train a novel vision-language model, which is, to the best of our knowledge, the first of its kind in GUI retrieval. We evaluated our approach on various datasets from related work and in manual experiment. The results demonstrate that our model outperforms previous approaches in text-to-GUI retrieval achieving a Recall@10 of up to 0.69 and a HIT@10 of 0.91. We also explored the performance of UIClip for other GUI tasks including GUI classification and Sketch-to-GUI retrieval with encouraging results.

Tailoring Requirements Engineering for Responsible AI

Feb 21, 2023Abstract:Requirements Engineering (RE) is the discipline for identifying, analyzing, as well as ensuring the implementation and delivery of user, technical, and societal requirements. Recently reported issues concerning the acceptance of Artificial Intelligence (AI) solutions after deployment, e.g. in the medical, automotive, or scientific domains, stress the importance of RE for designing and delivering Responsible AI systems. In this paper, we argue that RE should not only be carefully conducted but also tailored for Responsible AI. We outline related challenges for research and practice.

Beyond Duplicates: Towards Understanding and Predicting Link Types in Issue Tracking Systems

Apr 27, 2022

Abstract:Software projects use Issue Tracking Systems (ITS) like JIRA to track issues and organize the workflows around them. Issues are often inter-connected via different links such as the default JIRA link types Duplicate, Relate, Block, or Subtask. While previous research has mostly focused on analyzing and predicting duplication links, this work aims at understanding the various other link types, their prevalence, and characteristics towards a more reliable link type prediction. For this, we studied 607,208 links connecting 698,790 issues in 15 public JIRA repositories. Besides the default types, the custom types Depend, Incorporate, Split, and Cause were also common. We manually grouped all 75 link types used in the repositories into five general categories: General Relation, Duplication, Composition, Temporal / Causal, and Workflow. Comparing the structures of the corresponding graphs, we observed several trends. For instance, Duplication links tend to represent simpler issue graphs often with two components and Composition links present the highest amount of hierarchical tree structures (97.7%). Surprisingly, General Relation links have a significantly higher transitivity score than Duplication and Temporal / Causal links. Motivated by the differences between the link types and by their popularity, we evaluated the robustness of two state-of-the-art duplicate detection approaches from the literature on the JIRA dataset. We found that current deep-learning approaches confuse between Duplication and other links in almost all repositories. On average, the classification accuracy dropped by 6% for one approach and 12% for the other. Extending the training sets with other link types seems to partly solve this issue. We discuss our findings and their implications for research and practice.

Efficient, Uncertainty-based Moderation of Neural Networks Text Classifiers

Apr 04, 2022

Abstract:To maximize the accuracy and increase the overall acceptance of text classifiers, we propose a framework for the efficient, in-operation moderation of classifiers' output. Our framework focuses on use cases in which F1-scores of modern Neural Networks classifiers (ca.~90%) are still inapplicable in practice. We suggest a semi-automated approach that uses prediction uncertainties to pass unconfident, probably incorrect classifications to human moderators. To minimize the workload, we limit the human moderated data to the point where the accuracy gains saturate and further human effort does not lead to substantial improvements. A series of benchmarking experiments based on three different datasets and three state-of-the-art classifiers show that our framework can improve the classification F1-scores by 5.1 to 11.2% (up to approx.~98 to 99%), while reducing the moderation load up to 73.3% compared to a random moderation.

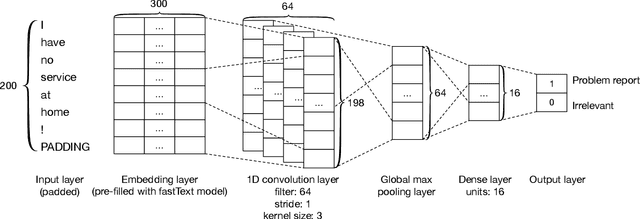

Automatically Matching Bug Reports With Related App Reviews

Feb 14, 2021

Abstract:App stores allow users to give valuable feedback on apps, and developers to find this feedback and use it for the software evolution. However, finding user feedback that matches existing bug reports in issue trackers is challenging as users and developers often use a different language. In this work, we introduce DeepMatcher, an automatic approach using state-of-the-art deep learning methods to match problem reports in app reviews to bug reports in issue trackers. We evaluated DeepMatcher with four open-source apps quantitatively and qualitatively. On average, DeepMatcher achieved a hit ratio of 0.71 and a Mean Average Precision of 0.55. For 91 problem reports, DeepMatcher did not find any matching bug report. When manually analyzing these 91 problem reports and the issue trackers of the studied apps, we found that in 47 cases, users actually described a problem before developers discovered and documented it in the issue tracker. We discuss our findings and different use cases for DeepMatcher.

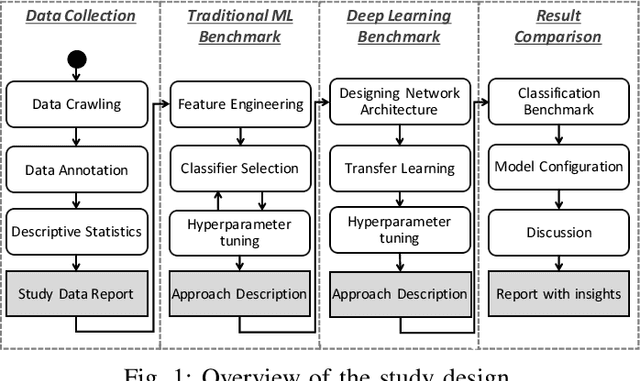

Classifying Multilingual User Feedback using Traditional Machine Learning and Deep Learning

Sep 12, 2019

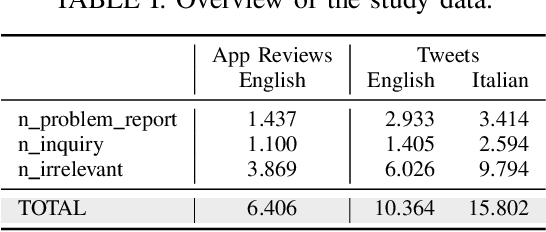

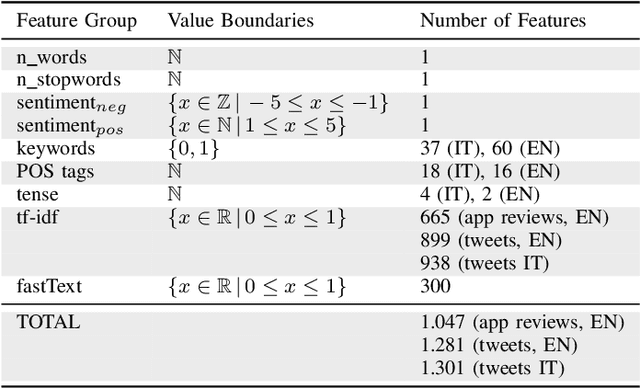

Abstract:With the rise of social media like Twitter and of software distribution platforms like app stores, users got various ways to express their opinion about software products. Popular software vendors get user feedback thousandfold per day. Research has shown that such feedback contains valuable information for software development teams such as problem reports or feature and support inquires. Since the manual analysis of user feedback is cumbersome and hard to manage many researchers and tool vendors suggested to use automated analyses based on traditional supervised machine learning approaches. In this work, we compare the results of traditional machine learning and deep learning in classifying user feedback in English and Italian into problem reports, inquiries, and irrelevant. Our results show that using traditional machine learning, we can still achieve comparable results to deep learning, although we collected thousands of labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge