W. Clem Karl

Data and Image Prior Integration for Image Reconstruction Using Consensus Equilibrium

Aug 31, 2020

Abstract:Image domain prior models have been shown to improve the quality of reconstructed images, especially when data are limited. Pre-processing of raw data, through the implicit or explicit inclusion of data domain priors have separately also shown utility in improving reconstructions. In this work, a principled approach is presented allowing the unified integration of both data and image domain priors for improved image reconstruction. The consensus equilibrium framework is extended to integrate physical sensor models, data models, and image models. In order to achieve this integration, the conventional image variables used in consensus equilibrium are augmented with variables representing data domain quantities. The overall result produces combined estimates of both the data and the reconstructed image that is consistent with the physical models and prior models being utilized. The prior models used in both domains in this work are created using deep neural networks. The superior quality allowed by incorporating both data and image domain prior models is demonstrated for two applications: limited-angle CT and accelerated MRI. The prior data model in both these applications is focused on recovering missing data. Experimental results are presented for a 90 degree limited-angle tomography problem from a real checked-bagged CT dataset and a 4x accelerated MRI problem on a simulated dataset. The new framework is very flexible and can be easily applied to other computational imaging problems with imperfect data.

Integrating Data and Image Domain Deep Learning for Limited Angle Tomography using Consensus Equilibrium

Aug 31, 2019

Abstract:Computed Tomography (CT) is a non-invasive imaging modality with applications ranging from healthcare to security. It reconstructs cross-sectional images of an object using a collection of projection data collected at different angles. Conventional methods, such as FBP, require that the projection data be uniformly acquired over the complete angular range. In some applications, it is not possible to acquire such data. Security is one such domain where non-rotational scanning configurations are being developed which violate the complete data assumption. Conventional methods produce images from such data that are filled with artifacts. The recent success of deep learning (DL) methods has inspired researchers to post-process these artifact laden images using deep neural networks (DNNs). This approach has seen limited success on real CT problems. Another approach has been to pre-process the incomplete data using DNNs aiming to avoid the creation of artifacts altogether. Due to imperfections in the learning process, this approach can still leave perceptible residual artifacts. In this work, we aim to combine the power of deep learning in both the data and image domains through a two-step process based on the consensus equilibrium (CE) framework. Specifically, we use conditional generative adversarial networks (cGANs) in both the data and the image domain for enhanced performance and efficient computation and combine them through a consensus process. We demonstrate the effectiveness of our approach on a real security CT dataset for a challenging 90 degree limited-angle problem. The same framework can be applied to other limited data problems arising in applications such as electron microscopy, non-destructive evaluation, and medical imaging.

Fast Accurate CT Metal Artifact Reduction using Data Domain Deep Learning

Apr 10, 2019

Abstract:Filtered back projection (FBP) is the most widely used method for image reconstruction in X-ray computed tomography (CT) scanners. The presence of hyper-dense materials in a scene, such as metals, can strongly attenuate X-rays, producing severe streaking artifacts in the reconstruction. These metal artifacts can greatly limit subsequent object delineation and information extraction from the images, restricting their diagnostic value. This problem is particularly acute in the security domain, where there is great heterogeneity in the objects that can appear in a scene, highly accurate decisions must be made quickly. The standard practical approaches to reducing metal artifacts in CT imagery are either simplistic non-adaptive interpolation-based projection data completion methods or direct image post-processing methods. These standard approaches have had limited success. Motivated primarily by security applications, we present a new deep-learning-based metal artifact reduction (MAR) approach that tackles the problem in the projection data domain. We treat the projection data corresponding to metal objects as missing data and train an adversarial deep network to complete the missing data in the projection domain. The subsequent complete projection data is then used with FBP to reconstruct image intended to be free of artifacts. This new approach results in an end-to-end MAR algorithm that is computationally efficient so practical and fits well into existing CT workflows allowing easy adoption in existing scanners. Training deep networks can be challenging, and another contribution of our work is to demonstrate that training data generated using an accurate X-ray simulation can be used to successfully train the deep network when combined with transfer learning using limited real data sets. We demonstrate the effectiveness and potential of our algorithm on simulated and real examples.

Sensing-Aware Kernel SVM

Mar 13, 2014

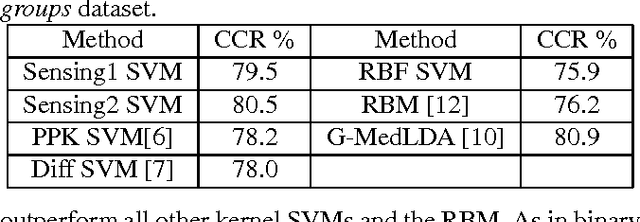

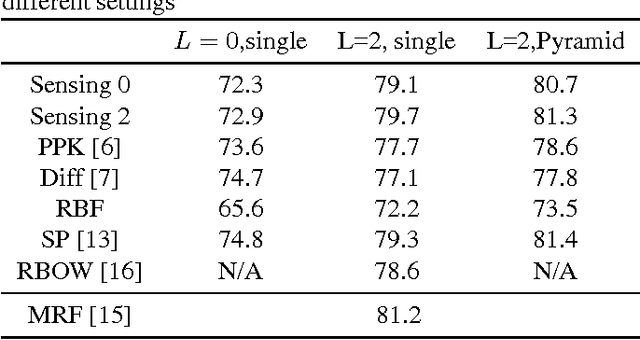

Abstract:We propose a novel approach for designing kernels for support vector machines (SVMs) when the class label is linked to the observation through a latent state and the likelihood function of the observation given the state (the sensing model) is available. We show that the Bayes-optimum decision boundary is a hyperplane under a mapping defined by the likelihood function. Combining this with the maximum margin principle yields kernels for SVMs that leverage knowledge of the sensing model in an optimal way. We derive the optimum kernel for the bag-of-words (BoWs) sensing model and demonstrate its superior performance over other kernels in document and image classification tasks. These results indicate that such optimum sensing-aware kernel SVMs can match the performance of rather sophisticated state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge