Vo Thanh Khang Nguyen

Efficient and Concise Explanations for Object Detection with Gaussian-Class Activation Mapping Explainer

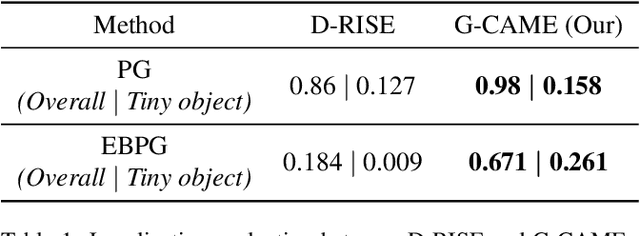

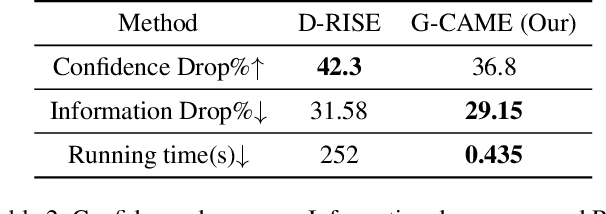

Apr 20, 2024Abstract:To address the challenges of providing quick and plausible explanations in Explainable AI (XAI) for object detection models, we introduce the Gaussian Class Activation Mapping Explainer (G-CAME). Our method efficiently generates concise saliency maps by utilizing activation maps from selected layers and applying a Gaussian kernel to emphasize critical image regions for the predicted object. Compared with other Region-based approaches, G-CAME significantly reduces explanation time to 0.5 seconds without compromising the quality. Our evaluation of G-CAME, using Faster-RCNN and YOLOX on the MS-COCO 2017 dataset, demonstrates its ability to offer highly plausible and faithful explanations, especially in reducing the bias on tiny object detection.

LangXAI: Integrating Large Vision Models for Generating Textual Explanations to Enhance Explainability in Visual Perception Tasks

Feb 19, 2024Abstract:LangXAI is a framework that integrates Explainable Artificial Intelligence (XAI) with advanced vision models to generate textual explanations for visual recognition tasks. Despite XAI advancements, an understanding gap persists for end-users with limited domain knowledge in artificial intelligence and computer vision. LangXAI addresses this by furnishing text-based explanations for classification, object detection, and semantic segmentation model outputs to end-users. Preliminary results demonstrate LangXAI's enhanced plausibility, with high BERTScore across tasks, fostering a more transparent and reliable AI framework on vision tasks for end-users.

Examining Monitoring System: Detecting Abnormal Behavior In Online Examinations

Feb 19, 2024Abstract:Cheating in online exams has become a prevalent issue over the past decade, especially during the COVID-19 pandemic. To address this issue of academic dishonesty, our "Exam Monitoring System: Detecting Abnormal Behavior in Online Examinations" is designed to assist proctors in identifying unusual student behavior. Our system demonstrates high accuracy and speed in detecting cheating in real-time scenarios, providing valuable information, and aiding proctors in decision-making. This article outlines our methodology and the effectiveness of our system in mitigating the widespread problem of cheating in online exams.

Enhancing the Fairness and Performance of Edge Cameras with Explainable AI

Jan 18, 2024Abstract:The rising use of Artificial Intelligence (AI) in human detection on Edge camera systems has led to accurate but complex models, challenging to interpret and debug. Our research presents a diagnostic method using Explainable AI (XAI) for model debugging, with expert-driven problem identification and solution creation. Validated on the Bytetrack model in a real-world office Edge network, we found the training dataset as the main bias source and suggested model augmentation as a solution. Our approach helps identify model biases, essential for achieving fair and trustworthy models.

A Novel Explainable Artificial Intelligence Model in Image Classification problem

Jul 09, 2023Abstract:In recent years, artificial intelligence is increasingly being applied widely in many different fields and has a profound and direct impact on human life. Following this is the need to understand the principles of the model making predictions. Since most of the current high-precision models are black boxes, neither the AI scientist nor the end-user deeply understands what's going on inside these models. Therefore, many algorithms are studied for the purpose of explaining AI models, especially those in the problem of image classification in the field of computer vision such as LIME, CAM, GradCAM. However, these algorithms still have limitations such as LIME's long execution time and CAM's confusing interpretation of concreteness and clarity. Therefore, in this paper, we propose a new method called Segmentation - Class Activation Mapping (SeCAM) that combines the advantages of these algorithms above, while at the same time overcoming their disadvantages. We tested this algorithm with various models, including ResNet50, Inception-v3, VGG16 from ImageNet Large Scale Visual Recognition Challenge (ILSVRC) data set. Outstanding results when the algorithm has met all the requirements for a specific explanation in a remarkably concise time.

Towards Better Explanations for Object Detection

Jun 06, 2023

Abstract:Recent advances in Artificial Intelligence (AI) technology have promoted their use in almost every field. The growing complexity of deep neural networks (DNNs) makes it increasingly difficult and important to explain the inner workings and decisions of the network. However, most current techniques for explaining DNNs focus mainly on interpreting classification tasks. This paper proposes a method to explain the decision for any object detection model called D-CLOSE. To closely track the model's behavior, we used multiple levels of segmentation on the image and a process to combine them. We performed tests on the MS-COCO dataset with the YOLOX model, which shows that our method outperforms D-RISE and can give a better quality and less noise explanation.

G-CAME: Gaussian-Class Activation Mapping Explainer for Object Detectors

Jun 06, 2023

Abstract:Nowadays, deep neural networks for object detection in images are very prevalent. However, due to the complexity of these networks, users find it hard to understand why these objects are detected by models. We proposed Gaussian Class Activation Mapping Explainer (G-CAME), which generates a saliency map as the explanation for object detection models. G-CAME can be considered a CAM-based method that uses the activation maps of selected layers combined with the Gaussian kernel to highlight the important regions in the image for the predicted box. Compared with other Region-based methods, G-CAME can transcend time constraints as it takes a very short time to explain an object. We also evaluated our method qualitatively and quantitatively with YOLOX on the MS-COCO 2017 dataset and guided to apply G-CAME into the two-stage Faster-RCNN model.

Towards Trust of Explainable AI in Thyroid Nodule Diagnosis

Mar 08, 2023Abstract:The ability to explain the prediction of deep learning models to end-users is an important feature to leverage the power of artificial intelligence (AI) for the medical decision-making process, which is usually considered non-transparent and challenging to comprehend. In this paper, we apply state-of-the-art eXplainable artificial intelligence (XAI) methods to explain the prediction of the black-box AI models in the thyroid nodule diagnosis application. We propose new statistic-based XAI methods, namely Kernel Density Estimation and Density map, to explain the case of no nodule detected. XAI methods' performances are considered under a qualitative and quantitative comparison as feedback to improve the data quality and the model performance. Finally, we survey to assess doctors' and patients' trust in XAI explanations of the model's decisions on thyroid nodule images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge