Vladislav Skripniuk

Responsible Disclosure of Generative Models Using Scalable Fingerprinting

Dec 16, 2020

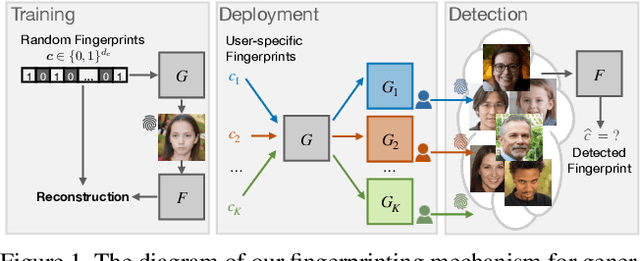

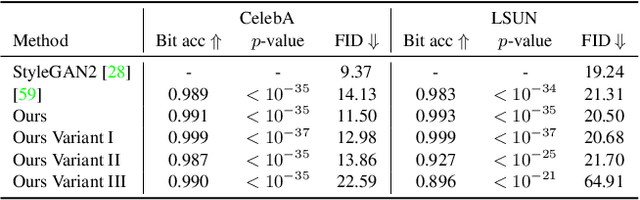

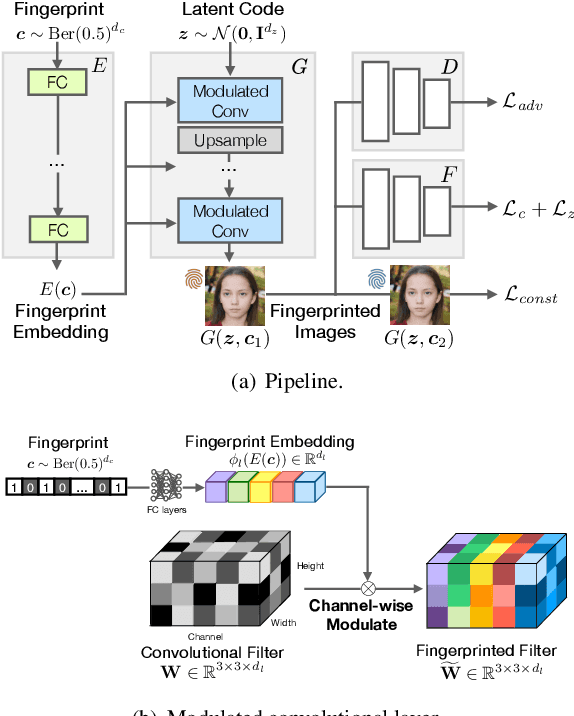

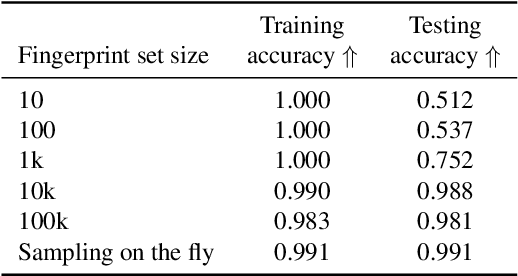

Abstract:Over the past five years, deep generative models have achieved a qualitative new level of performance. Generated data has become difficult, if not impossible, to be distinguished from real data. While there are plenty of use cases that benefit from this technology, there are also strong concerns on how this new technology can be misused to spoof sensors, generate deep fakes, and enable misinformation at scale. Unfortunately, current deep fake detection methods are not sustainable, as the gap between real and fake continues to close. In contrast, our work enables a responsible disclosure of such state-of-the-art generative models, that allows researchers and companies to fingerprint their models, so that the generated samples containing a fingerprint can be accurately detected and attributed to a source. Our technique achieves this by an efficient and scalable ad-hoc generation of a large population of models with distinct fingerprints. Our recommended operation point uses a 128-bit fingerprint which in principle results in more than $10^{36}$ identifiable models. Experimental results show that our method fulfills key properties of a fingerprinting mechanism and achieves effectiveness in deep fake detection and attribution.

Black-Box Watermarking for Generative Adversarial Networks

Aug 03, 2020

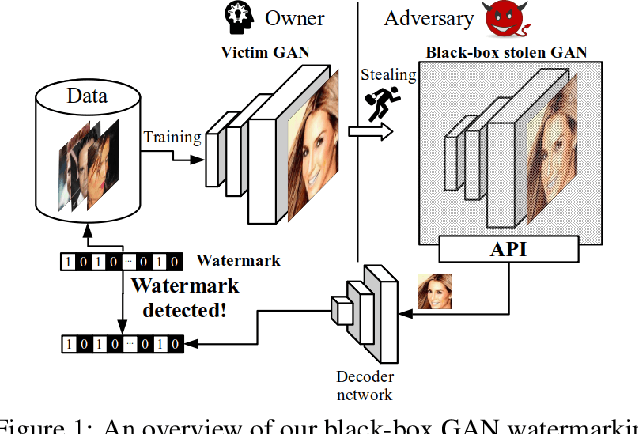

Abstract:As companies start using deep learning to provide value to their customers, the demand for solutions to protect the ownership of trained models becomes evident. Several watermarking approaches have been proposed for protecting discriminative models. However, rapid progress in the task of photorealistic image synthesis, boosted by Generative Adversarial Networks (GANs), raises an urgent need for extending protection to generative models. We propose the first watermarking solution for GAN models. We leverage steganography techniques to watermark GAN training dataset, transfer the watermark from dataset to GAN models, and then verify the watermark from generated images. In the experiments, we show that the hidden encoding characteristic of steganography allows preserving generation quality and supports the watermark secrecy against steganalysis attacks. We validate that our watermark verification is robust in wide ranges against several image and model perturbation attacks. Critically, our solution treats GAN models as an independent component: watermark embedding is agnostic to GAN details and watermark verification relies only on accessing the APIs of black-box GANs. We further extend our watermarking applications to generated image detection and attribution, which delivers a practical potential to facilitate forensics against deep fakes and responsibility tracking of GAN misuse.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge