Viviane Clay

The Thousand Brains Project: A New Paradigm for Sensorimotor Intelligence

Dec 24, 2024Abstract:Artificial intelligence has advanced rapidly in the last decade, driven primarily by progress in the scale of deep-learning systems. Despite these advances, the creation of intelligent systems that can operate effectively in diverse, real-world environments remains a significant challenge. In this white paper, we outline the Thousand Brains Project, an ongoing research effort to develop an alternative, complementary form of AI, derived from the operating principles of the neocortex. We present an early version of a thousand-brains system, a sensorimotor agent that is uniquely suited to quickly learn a wide range of tasks and eventually implement any capabilities the human neocortex has. Core to its design is the use of a repeating computational unit, the learning module, modeled on the cortical columns found in mammalian brains. Each learning module operates as a semi-independent unit that can model entire objects, represents information through spatially structured reference frames, and both estimates and is able to effect movement in the world. Learning is a quick, associative process, similar to Hebbian learning in the brain, and leverages inductive biases around the spatial structure of the world to enable rapid and continual learning. Multiple learning modules can interact with one another both hierarchically and non-hierarchically via a "cortical messaging protocol" (CMP), creating more abstract representations and supporting multimodal integration. We outline the key principles motivating the design of thousand-brains systems and provide details about the implementation of Monty, our first instantiation of such a system. Code can be found at https://github.com/thousandbrainsproject/tbp.monty, along with more detailed documentation at https://thousandbrainsproject.readme.io/.

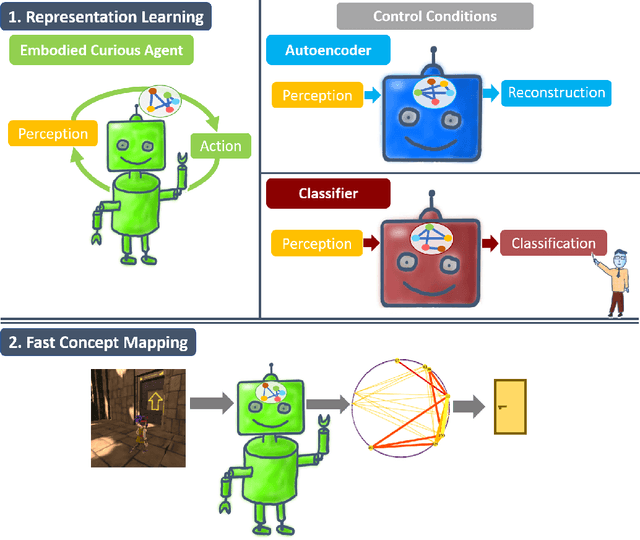

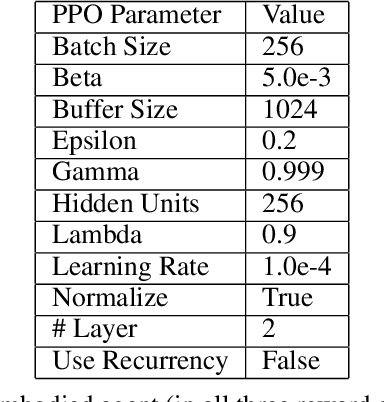

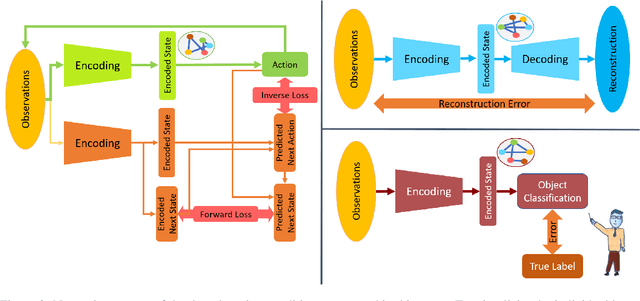

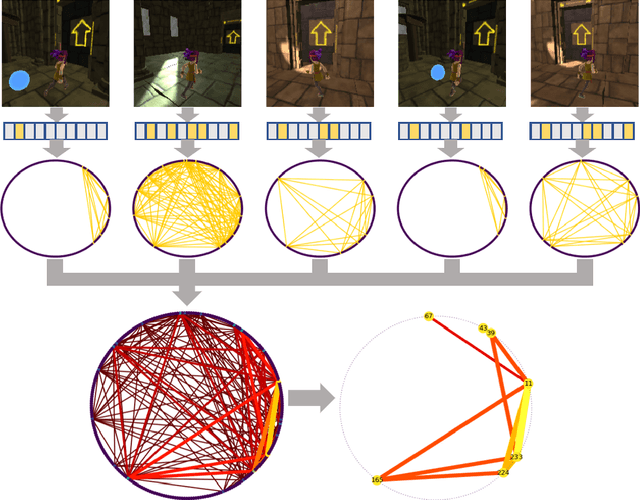

Fast Concept Mapping: The Emergence of Human Abilities in Artificial Neural Networks when Learning Embodied and Self-Supervised

Feb 03, 2021

Abstract:Most artificial neural networks used for object detection and recognition are trained in a fully supervised setup. This is not only very resource consuming as it requires large data sets of labeled examples but also very different from how humans learn. We introduce a setup in which an artificial agent first learns in a simulated world through self-supervised exploration. Following this, the representations learned through interaction with the world can be used to associate semantic concepts such as different types of doors. To do this, we use a method we call fast concept mapping which uses correlated firing patterns of neurons to define and detect semantic concepts. This association works instantaneous with very few labeled examples, similar to what we observe in humans in a phenomenon called fast mapping. Strikingly, this method already identifies objects with as little as one labeled example which highlights the quality of the encoding learned self-supervised through embodiment using curiosity-driven exploration. It therefor presents a feasible strategy for learning concepts without much supervision and shows that through pure interaction with the world meaningful representations of an environment can be learned.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge