Virginijus Marcinkevičius

Hyperspectral Unmixing of Agricultural Images taken from UAV Using Adapted U-Net Architecture

Sep 29, 2024

Abstract:The hyperspectral unmixing method is an algorithm that extracts material (usually called endmember) data from hyperspectral data cube pixels along with their abundances. Due to a lower spatial resolution of hyperspectral sensors data in each of the pixels may contain mixed information from multiple endmembers. In this paper we create a hyperspectral unmixing dataset, created from blueberry field data gathered by a hyperspectral camera mounted on a UAV. We also propose a hyperspectral unmixing algorithm based on U-Net network architecture to achieve more accurate unmixing results on existing and newly created hyperspectral unmixing datasets.

DINO Pre-training for Vision-based End-to-end Autonomous Driving

Jul 15, 2024

Abstract:In this article, we focus on the pre-training of visual autonomous driving agents in the context of imitation learning. Current methods often rely on a classification-based pre-training, which we hypothesise to be holding back from extending capabilities of implicit image understanding. We propose pre-training the visual encoder of a driving agent using the self-distillation with no labels (DINO) method, which relies on a self-supervised learning paradigm.% and is trained on an unrelated task. Our experiments in CARLA environment in accordance with the Leaderboard benchmark reveal that the proposed pre-training is more efficient than classification-based pre-training, and is on par with the recently proposed pre-training based on visual place recognition (VPRPre).

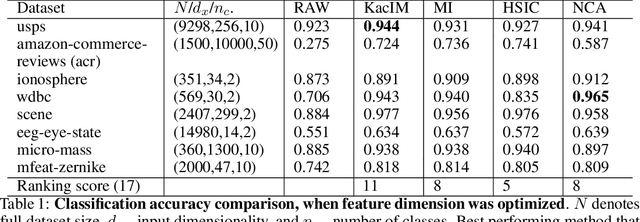

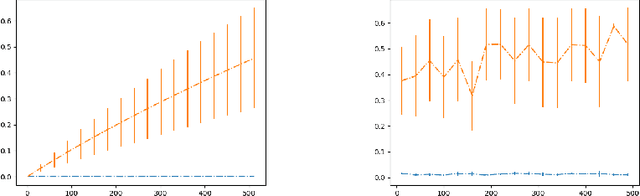

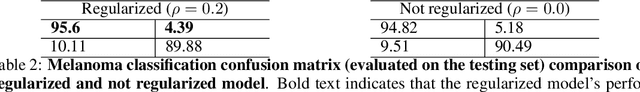

Measuring Statistical Dependencies via Maximum Norm and Characteristic Functions

Aug 16, 2022

Abstract:In this paper, we focus on the problem of statistical dependence estimation using characteristic functions. We propose a statistical dependence measure, based on the maximum-norm of the difference between joint and product-marginal characteristic functions. The proposed measure can detect arbitrary statistical dependence between two random vectors of possibly different dimensions, is differentiable, and easily integrable into modern machine learning and deep learning pipelines. We also conduct experiments both with simulated and real data. Our simulations show, that the proposed method can measure statistical dependencies in high-dimensional, non-linear data, and is less affected by the curse of dimensionality, compared to the previous work in this line of research. The experiments with real data demonstrate the potential applicability of our statistical measure for two different empirical inference scenarios, showing statistically significant improvement in the performance characteristics when applied for supervised feature extraction and deep neural network regularization. In addition, we provide a link to the accompanying open-source repository https://bit.ly/3d4ch5I.

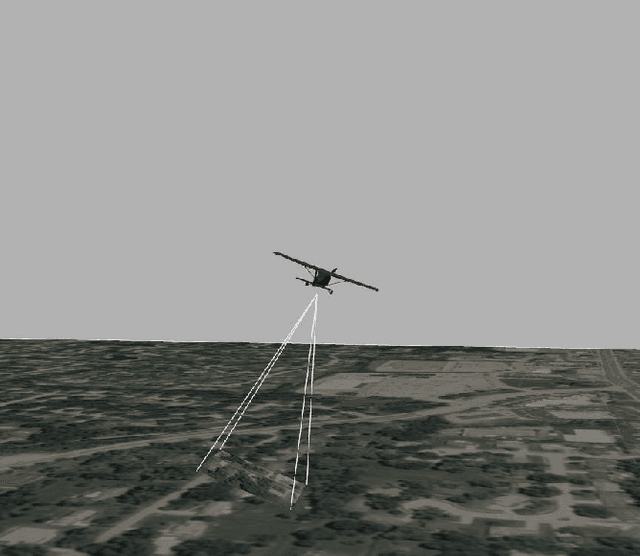

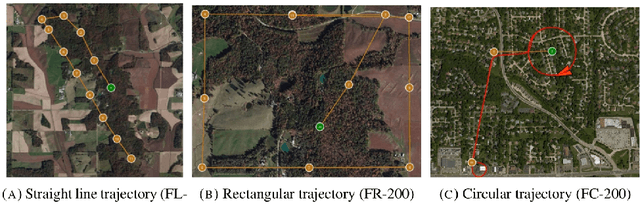

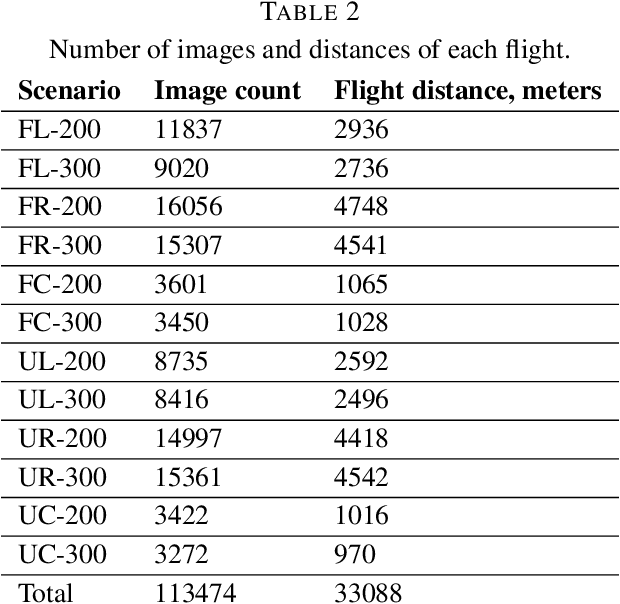

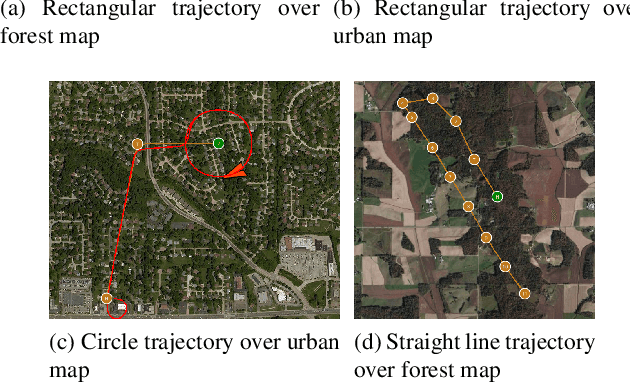

A data set of aerial imagery from robotics simulator for map-based localization systems benchmark

Oct 28, 2019

Abstract:Purpose: This paper presents a new dataset of Aerial Imagery from Robotics simulator (abbr. AIR). AIR dataset aims to provide a starting point for localization system development and to become a typical benchmark for accuracy comparison of map-based localization algorithms, visual odometry, and SLAM for high altitude flights. Design/methodology/approach: The presented dataset contains over 100 thousand aerial images captured from Gazebo robotics simulator using orthophoto maps as a ground plane. Flights with 3 different trajectories are performed on maps from urban and forest environment at different altitudes, totaling over 33 kilometers of flight distance. Findings: The review of previous researches shows, that the presented dataset is the largest currently available public dataset with downward facing camera imagery. Originality/value: This paper presents the problem of missing publicly available datasets for high altitude (100--3000 meters) UAV flights, the current state-of-the-art researches performed to develop map-based localization system for UAVs, depend on real-life test flights and custom simulated datasets for accuracy evaluation of the algorithms. The presented new dataset solves this problem and aims to help the researchers to improve and benchmark new algorithms for high-altitude flights.

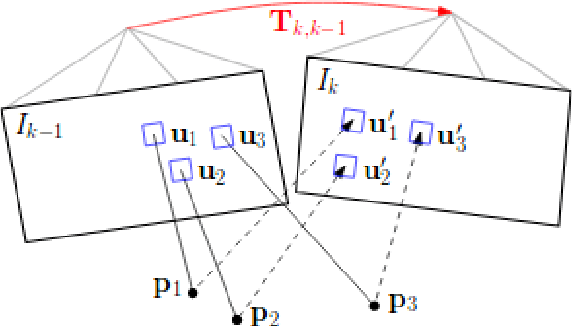

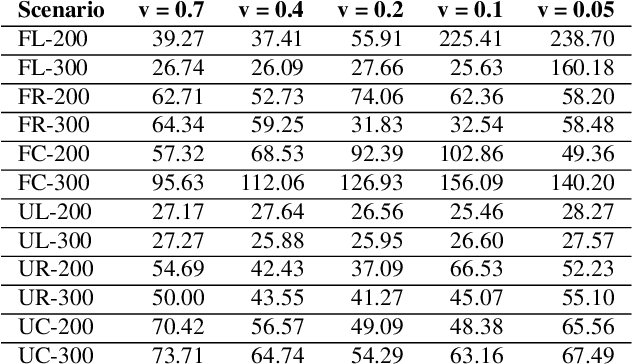

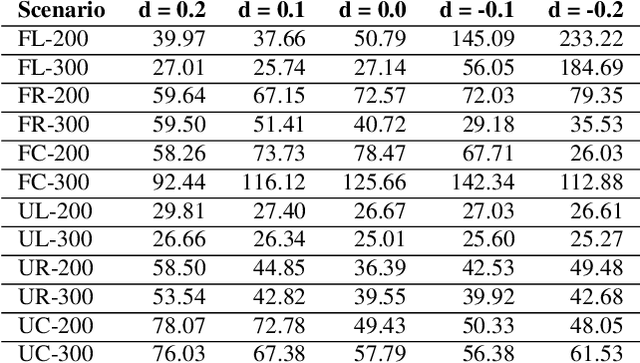

Robust GNSS Denied Localization for UAV Using Particle Filter and Visual Odometry

Oct 26, 2019

Abstract:Conventional autonomous Unmanned Air Vehicle (abbr. UAV) autopilot systems use Global Navigation Satellite System (abbr. GNSS) signal for navigation. However, autopilot systems fail to navigate due to lost or jammed GNSS signal. To solve this problem, information from other sensors such as optical sensors are used. Monocular Simultaneous Localization and Mapping algorithms have been developed over the last few years and achieved state-of-the-art accuracy. Also, map matching localization approaches are used for UAV localization relatively to imagery from static maps such as Google Maps. Unfortunately, the accuracy and robustness of these algorithms are very dependent on up-to-date maps. The purpose of this research is to improve the accuracy and robustness of map relative Particle Filter based localization using a downward-facing optical camera mounted on an autonomous aircraft. This research shows how image similarity to likelihood conversion function impacts the results of Particle Filter localization algorithm. Two parametric image similarity to likelihood conversion functions (logistic and rectifying) are proposed. A dataset of simulated aerial imagery is used for experiments. The experiment results are shown, that the Particle Filter localization algorithm using the logistic function was able to surpass the accuracy of state-of-the-art ORB-SLAM2 algorithm by 2.6 times. The algorithm is shown to be able to navigate using up-to-date maps more accurately and with an average decrease of precision by 30% using out-of-date maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge