Rokas Jurevičius

A data set of aerial imagery from robotics simulator for map-based localization systems benchmark

Oct 28, 2019

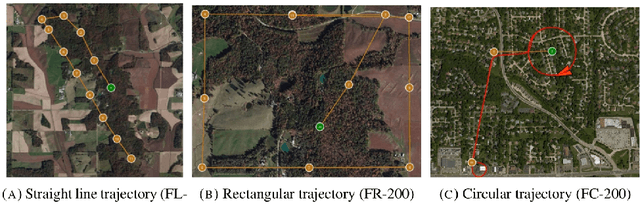

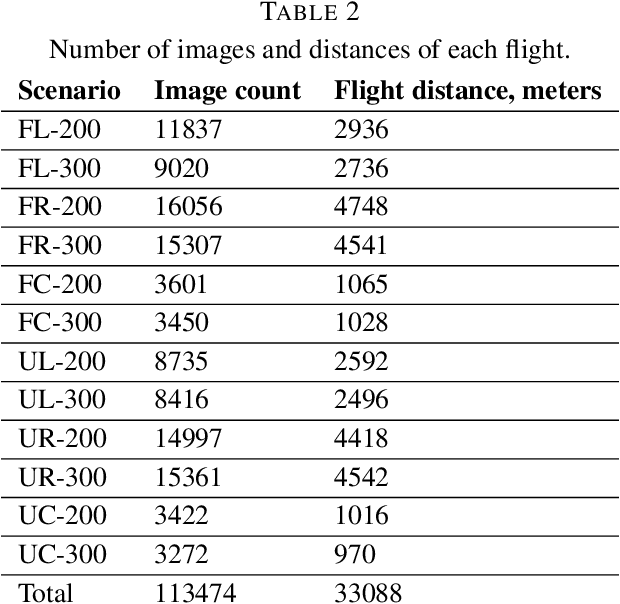

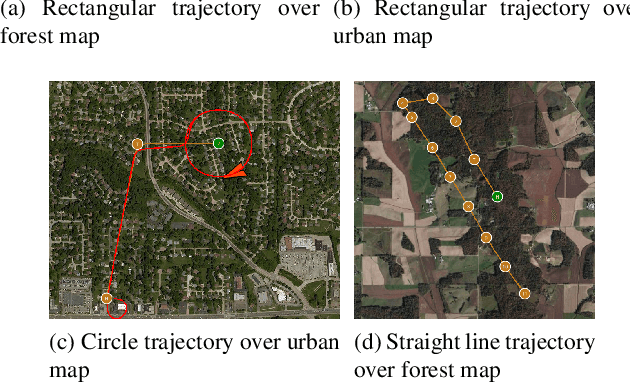

Abstract:Purpose: This paper presents a new dataset of Aerial Imagery from Robotics simulator (abbr. AIR). AIR dataset aims to provide a starting point for localization system development and to become a typical benchmark for accuracy comparison of map-based localization algorithms, visual odometry, and SLAM for high altitude flights. Design/methodology/approach: The presented dataset contains over 100 thousand aerial images captured from Gazebo robotics simulator using orthophoto maps as a ground plane. Flights with 3 different trajectories are performed on maps from urban and forest environment at different altitudes, totaling over 33 kilometers of flight distance. Findings: The review of previous researches shows, that the presented dataset is the largest currently available public dataset with downward facing camera imagery. Originality/value: This paper presents the problem of missing publicly available datasets for high altitude (100--3000 meters) UAV flights, the current state-of-the-art researches performed to develop map-based localization system for UAVs, depend on real-life test flights and custom simulated datasets for accuracy evaluation of the algorithms. The presented new dataset solves this problem and aims to help the researchers to improve and benchmark new algorithms for high-altitude flights.

Robust GNSS Denied Localization for UAV Using Particle Filter and Visual Odometry

Oct 26, 2019

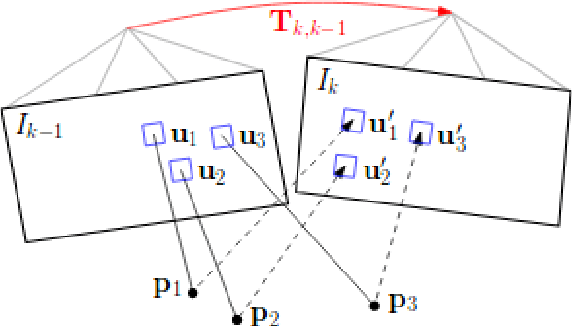

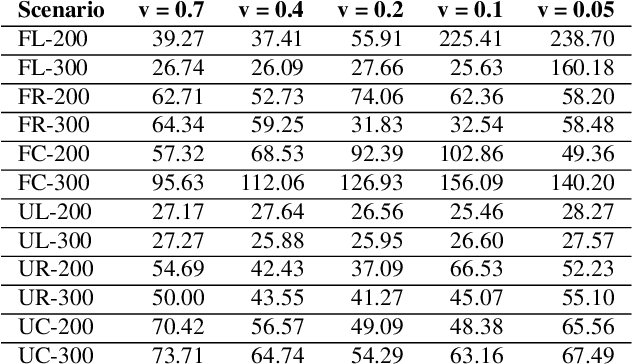

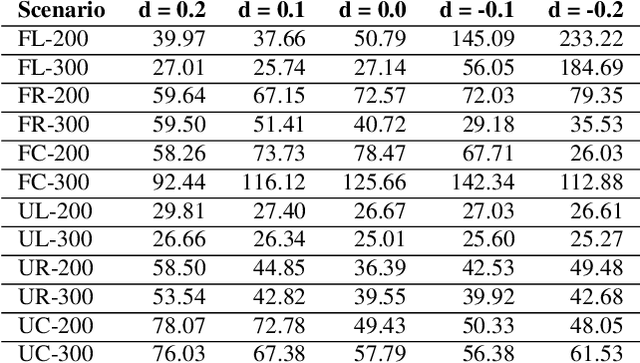

Abstract:Conventional autonomous Unmanned Air Vehicle (abbr. UAV) autopilot systems use Global Navigation Satellite System (abbr. GNSS) signal for navigation. However, autopilot systems fail to navigate due to lost or jammed GNSS signal. To solve this problem, information from other sensors such as optical sensors are used. Monocular Simultaneous Localization and Mapping algorithms have been developed over the last few years and achieved state-of-the-art accuracy. Also, map matching localization approaches are used for UAV localization relatively to imagery from static maps such as Google Maps. Unfortunately, the accuracy and robustness of these algorithms are very dependent on up-to-date maps. The purpose of this research is to improve the accuracy and robustness of map relative Particle Filter based localization using a downward-facing optical camera mounted on an autonomous aircraft. This research shows how image similarity to likelihood conversion function impacts the results of Particle Filter localization algorithm. Two parametric image similarity to likelihood conversion functions (logistic and rectifying) are proposed. A dataset of simulated aerial imagery is used for experiments. The experiment results are shown, that the Particle Filter localization algorithm using the logistic function was able to surpass the accuracy of state-of-the-art ORB-SLAM2 algorithm by 2.6 times. The algorithm is shown to be able to navigate using up-to-date maps more accurately and with an average decrease of precision by 30% using out-of-date maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge