Vineeth S. Bhaskara

GraN-GAN: Piecewise Gradient Normalization for Generative Adversarial Networks

Nov 04, 2021

Abstract:Modern generative adversarial networks (GANs) predominantly use piecewise linear activation functions in discriminators (or critics), including ReLU and LeakyReLU. Such models learn piecewise linear mappings, where each piece handles a subset of the input space, and the gradients per subset are piecewise constant. Under such a class of discriminator (or critic) functions, we present Gradient Normalization (GraN), a novel input-dependent normalization method, which guarantees a piecewise K-Lipschitz constraint in the input space. In contrast to spectral normalization, GraN does not constrain processing at the individual network layers, and, unlike gradient penalties, strictly enforces a piecewise Lipschitz constraint almost everywhere. Empirically, we demonstrate improved image generation performance across multiple datasets (incl. CIFAR-10/100, STL-10, LSUN bedrooms, and CelebA), GAN loss functions, and metrics. Further, we analyze altering the often untuned Lipschitz constant K in several standard GANs, not only attaining significant performance gains, but also finding connections between K and training dynamics, particularly in low-gradient loss plateaus, with the common Adam optimizer.

Exploiting Uncertainty of Loss Landscape for Stochastic Optimization

May 30, 2019

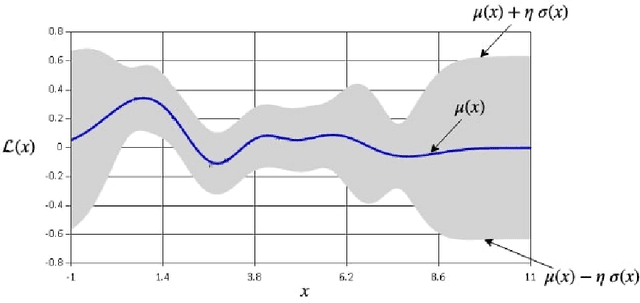

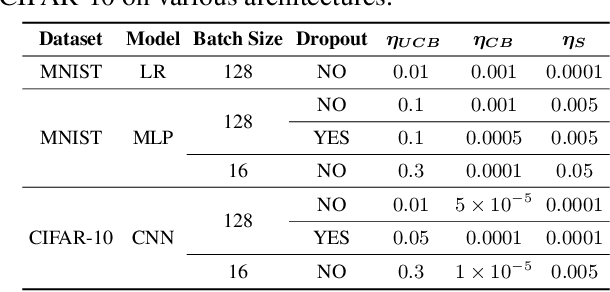

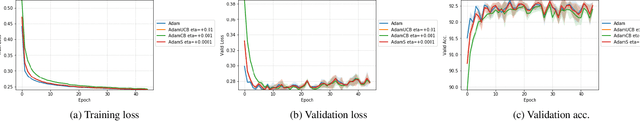

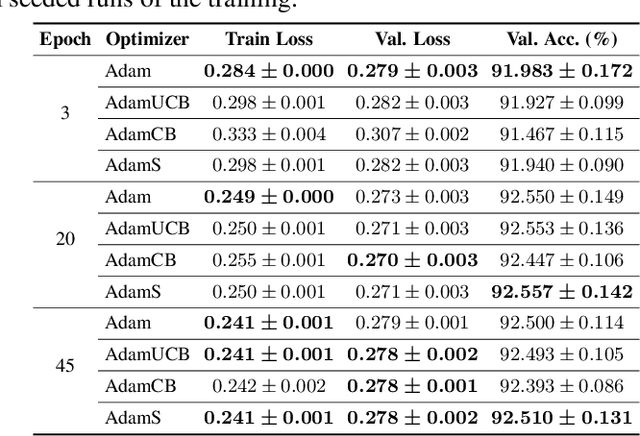

Abstract:We introduce novel variants of momentum by incorporating the variance of the stochastic loss function. The variance characterizes the confidence or uncertainty of the local features of the averaged loss surface across the i.i.d. subsets of the training data defined by the mini-batches. We show two applications of the gradient of the variance of the loss function. First, as a bias to the conventional momentum update to encourage conformity of the local features of the loss function (e.g. local minima) across mini-batches to improve generalization and the cumulative training progress made per epoch. Second, as an alternative direction for "exploration" in the parameter space, especially, for non-convex objectives, that exploits both the optimistic and pessimistic views of the loss function in the face of uncertainty. We also introduce a novel data-driven stochastic regularization technique through the parameter update rule that is model-agnostic and compatible with arbitrary architectures. We further establish connections to probability distributions over loss functions and the REINFORCE policy gradient update with baseline in RL. Finally, we incorporate the new variants of momentum proposed into Adam, and empirically show that our methods improve the rate of convergence of training based on our experiments on the MNIST and CIFAR-10 datasets.

Emulating malware authors for proactive protection using GANs over a distributed image visualization of dynamic file behavior

Jul 30, 2018

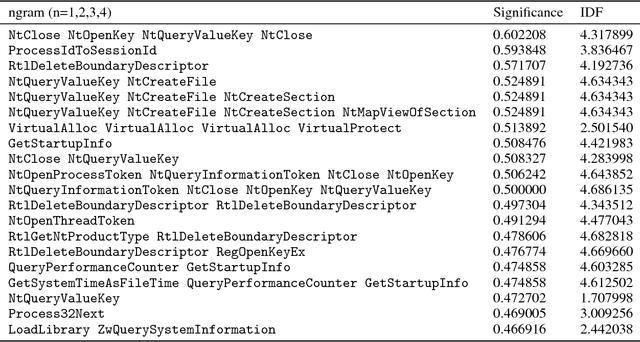

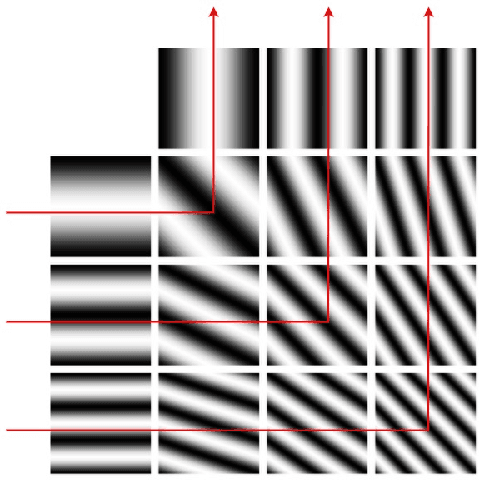

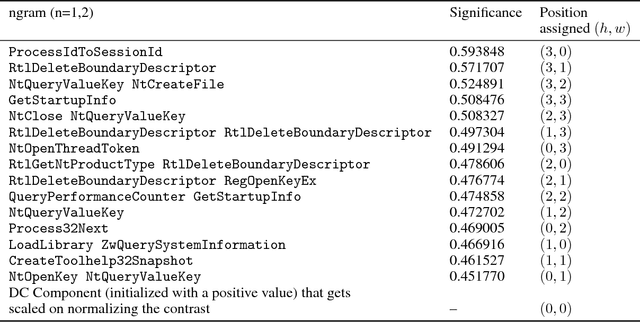

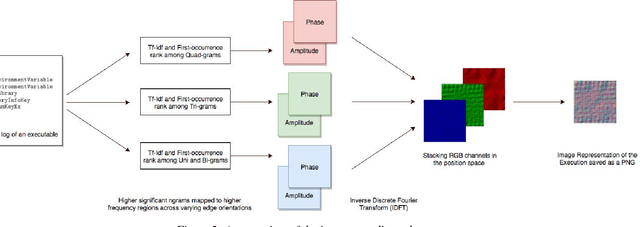

Abstract:Malware authors have always been at an advantage of being able to adversarially test and augment their malicious code, before deploying the payload, using anti-malware products at their disposal. The anti-malware developers and threat experts, on the other hand, do not have such a privilege of tuning anti-malware products against zero-day attacks pro-actively. This allows the malware authors to being a step ahead of the anti-malware products, fundamentally biasing the cat and mouse game played by the two parties. In this paper, we propose a way that would enable machine learning based threat prevention models to bridge that gap by being able to tune against a deep generative adversarial network (GAN), which takes up the role of a malware author and generates new types of malware. The GAN is trained over a reversible distributed RGB image representation of known malware behaviors, encoding the sequence of API call ngrams and the corresponding term frequencies. The generated images represent synthetic malware that can be decoded back to the underlying API call sequence information. The image representation is not only demonstrated as a general technique of incorporating necessary priors for exploiting convolutional neural network architectures for generative or discriminative modeling, but also as a visualization method for easy manual software or malware categorization, by having individual API ngram information distributed across the image space. In addition, we also propose using smart-definitions for detecting malwares based on perceptual hashing of these images. Such hashes are potentially more effective than cryptographic hashes that do not carry any meaningful similarity metric, and hence, do not generalize well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge