Victor Araujo

Mitigating Bias in Facial Analysis Systems by Incorporating Label Diversity

Apr 13, 2022

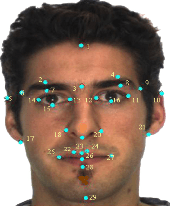

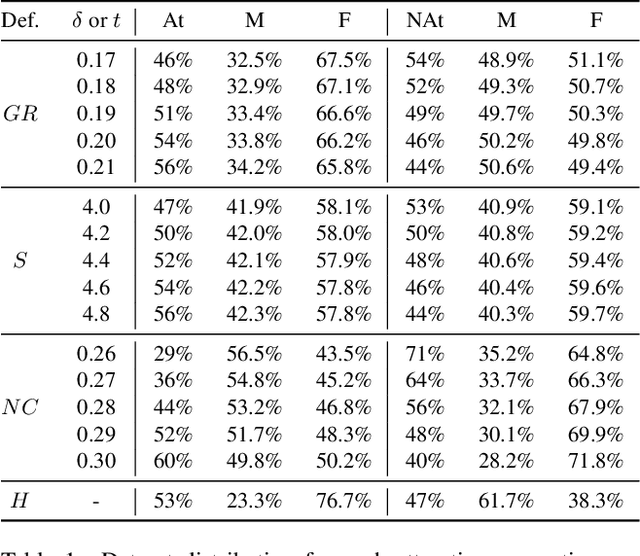

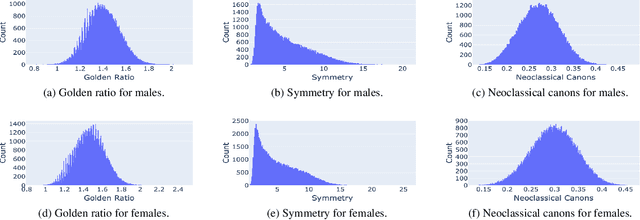

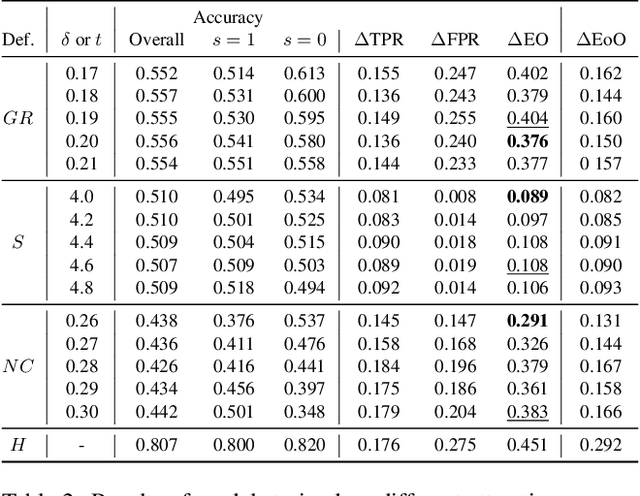

Abstract:Facial analysis models are increasingly applied in real-world applications that have significant impact on peoples' lives. However, as previously shown, models that automatically classify facial attributes might exhibit algorithmic discrimination behavior with respect to protected groups, potentially posing negative impacts on individuals and society. It is therefore critical to develop techniques that can mitigate unintended biases in facial classifiers. Hence, in this work, we introduce a novel learning method that combines both subjective human-based labels and objective annotations based on mathematical definitions of facial traits. Specifically, we generate new objective annotations from a large-scale human-annotated dataset, each capturing a different perspective of the analyzed facial trait. We then propose an ensemble learning method, which combines individual models trained on different types of annotations. We provide an in-depth analysis of the annotation procedure as well as the dataset distribution. Moreover, we empirically demonstrate that, by incorporating label diversity, and without additional synthetic images, our method successfully mitigates unintended biases, while maintaining significant accuracy on the downstream task.

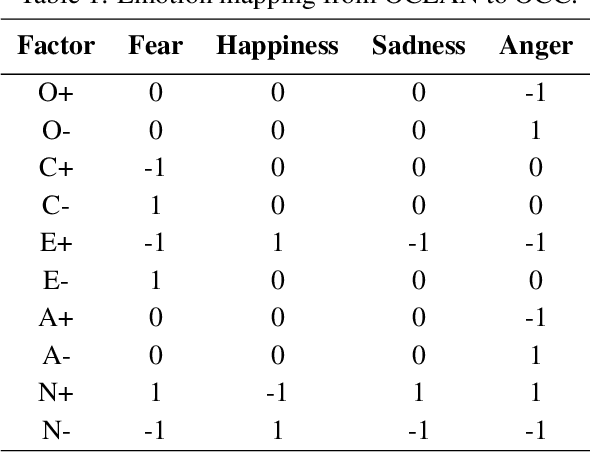

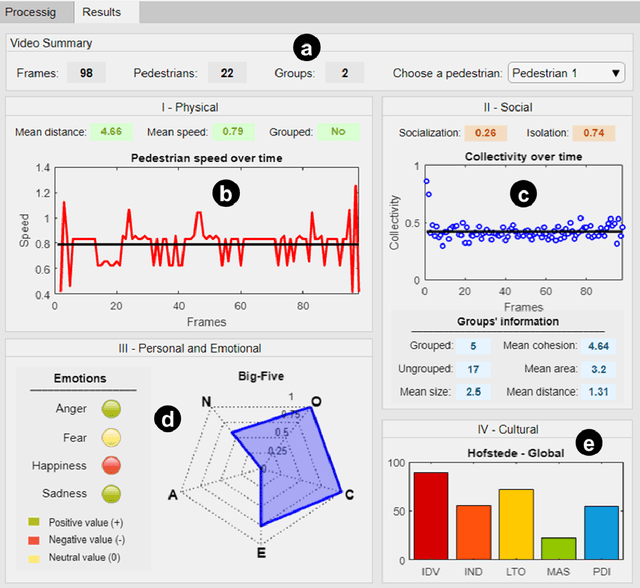

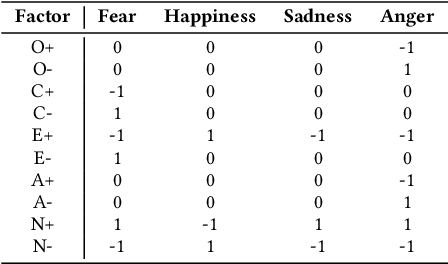

A Software to Detect OCC Emotion, Big-Five Personality and Hofstede Cultural Dimensions of Pedestrians from Video Sequences

Aug 18, 2019

Abstract:This paper presents a video analysis application to detect personality, emotion and cultural aspects from pedestrians in video sequences, along with a visualizer of features. The proposed model considers a series of characteristics of the pedestrians and the crowd, such as number and size of groups, distances, speeds, among others, and performs the mapping of these characteristics in personalities, emotions and cultural aspects, considering the Cultural Dimensions of Hofstede (HCD), the Big-Five Personality Model (OCEAN) and the OCC Emotional Model. The main hypothesis is that there is a relationship between so-called intrinsic human variables (such as emotion) and the way people behave in space and time. The software was tested in a set of videos from different countries and results seem promising in order to identify these three different levels of psychological traits in the filmed sequences. In addition, the data of the people present in the videos can be seen in a crowd viewer.

How much do you perceive this? An analysis on perceptions of geometric features, personalities and emotions in virtual humans

Apr 24, 2019

Abstract:This work aims to evaluate people's perception regarding geometric features, personalities and emotions characteristics in virtual humans. For this, we use as a basis, a dataset containing the tracking files of pedestrians captured from spontaneous videos and visualized them as identical virtual humans. The goal is to focus on their behavior and not being distracted by other features. In addition to tracking files containing their positions, the dataset also contains pedestrian emotions and personalities detected using Computer Vision and Pattern Recognition techniques. We proceed with our analysis in order to answer the question if subjects can perceive geometric features as distances/speeds as well as emotions and personalities in video sequences when pedestrians are represented by virtual humans. Regarding the participants, an amount of 73 people volunteered for the experiment. The analysis was divided in two parts: i) evaluation on perception of geometric characteristics, such as density, angular variation, distances and speeds, and ii) evaluation on personality and emotion perceptions. Results indicate that, even without explaining to the participants the concepts of each personality or emotion and how they were calculated (considering geometric characteristics), in most of the cases, participants perceived the personality and emotion expressed by the virtual agents, in accordance with the available ground truth.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge