Vasudevan Lakshminarayanan

OW-SLR: Overlapping Windows on Semi-Local Region for Image Super-Resolution

Nov 16, 2023Abstract:There has been considerable progress in implicit neural representation to upscale an image to any arbitrary resolution. However, existing methods are based on defining a function to predict the Red, Green and Blue (RGB) value from just four specific loci. Relying on just four loci is insufficient as it leads to losing fine details from the neighboring region(s). We show that by taking into account the semi-local region leads to an improvement in performance. In this paper, we propose applying a new technique called Overlapping Windows on Semi-Local Region (OW-SLR) to an image to obtain any arbitrary resolution by taking the coordinates of the semi-local region around a point in the latent space. This extracted detail is used to predict the RGB value of a point. We illustrate the technique by applying the algorithm to the Optical Coherence Tomography-Angiography (OCT-A) images and show that it can upscale them to random resolution. This technique outperforms the existing state-of-the-art methods when applied to the OCT500 dataset. OW-SLR provides better results for classifying healthy and diseased retinal images such as diabetic retinopathy and normals from the given set of OCT-A images. The project page is available at https://rishavbb.github.io/ow-slr/index.html

Direct Estimation of Pupil Parameters Using Deep Learning for Visible Light Pupillometry

May 10, 2023

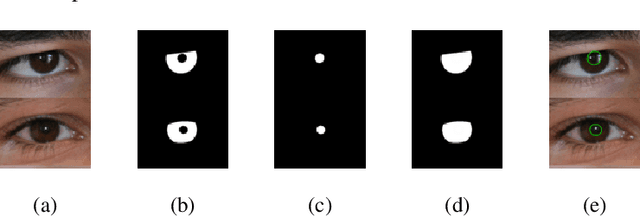

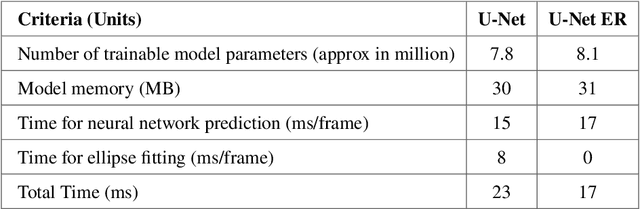

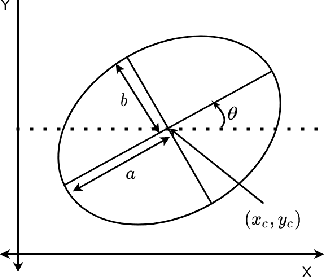

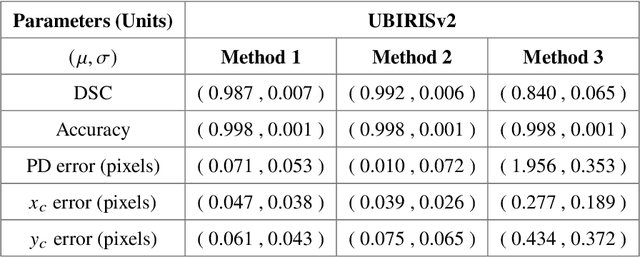

Abstract:Pupil reflex to variations in illumination and associated dynamics are of importance in neurology and ophthalmology. This is typically measured using a near Infrared (IR) pupillometer to avoid Purkinje reflections that appear when strong Visible Light (VL) illumination is present. Previously we demonstrated the use of deep learning techniques to accurately detect the pupil pixels (segmentation mask) in case of VL images for performing VL pupillometry. Here, we present a method to obtain the parameters of the elliptical pupil boundary along with the segmentation mask is presented. This eliminates the need for an additional, computationally expensive post-processing step of ellipse fitting and also improves segmentation accuracy. Using the time-varying ellipse parameters of pupil, we can compute the dynamics of the Pupillary Light Reflex (PLR). We also present preliminary evaluations of our deep-learning algorithms on clinical data. This work is a significant push in our goal to develop and validate a VL pupillometer based on a smartphone that can be used in the field.

FAZSeg: A New User-Friendly Software for Quantification of the Foveal Avascular Zone

Nov 22, 2021

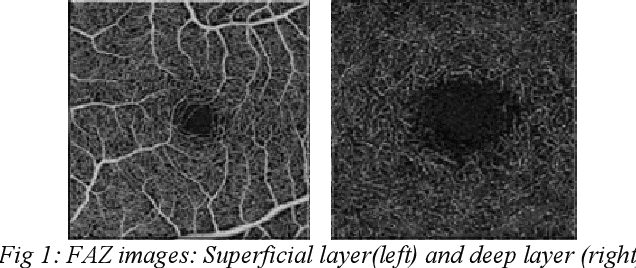

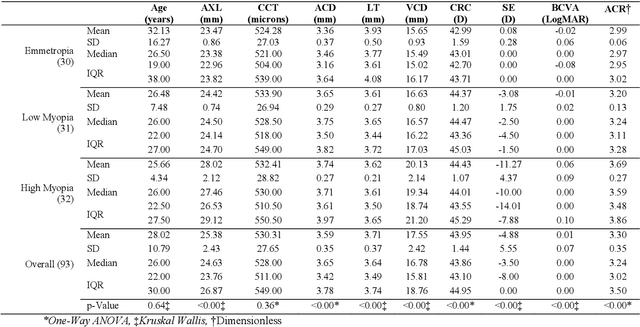

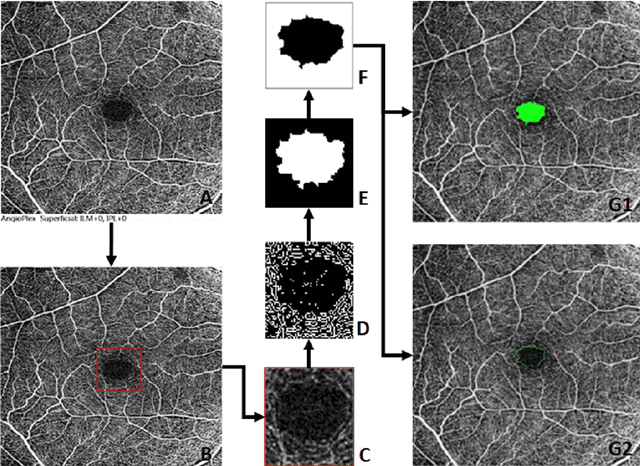

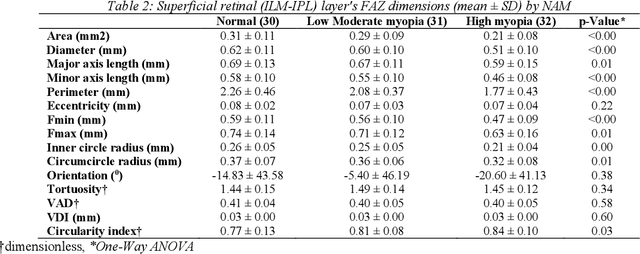

Abstract:Various ocular diseases and high myopia influence the anatomical reference point Foveal Avascular Zone (FAZ) dimensions. Therefore, it is important to segment and quantify the FAZs dimensions accurately. To the best of our knowledge, there is no automated tool or algorithms available to segment the FAZ's deep retinal layer. The paper describes a new open-access software with a user-friendly Graphical User Interface (GUI) and compares the results with the ground truth (manual segmentation).

Automated Detection and Diagnosis of Diabetic Retinopathy: A Comprehensive Survey

Jun 30, 2021

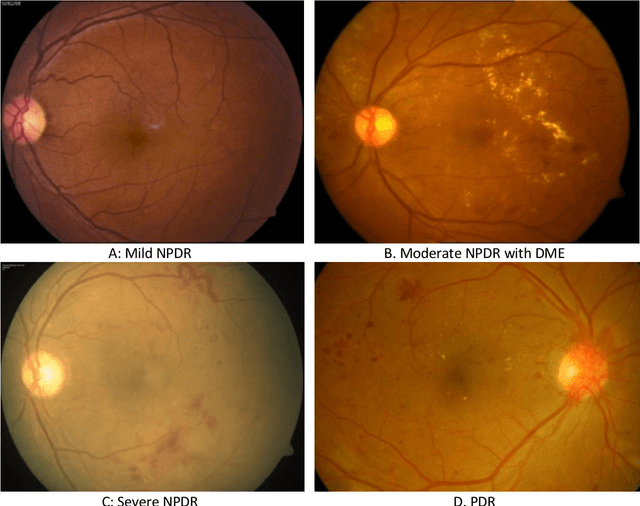

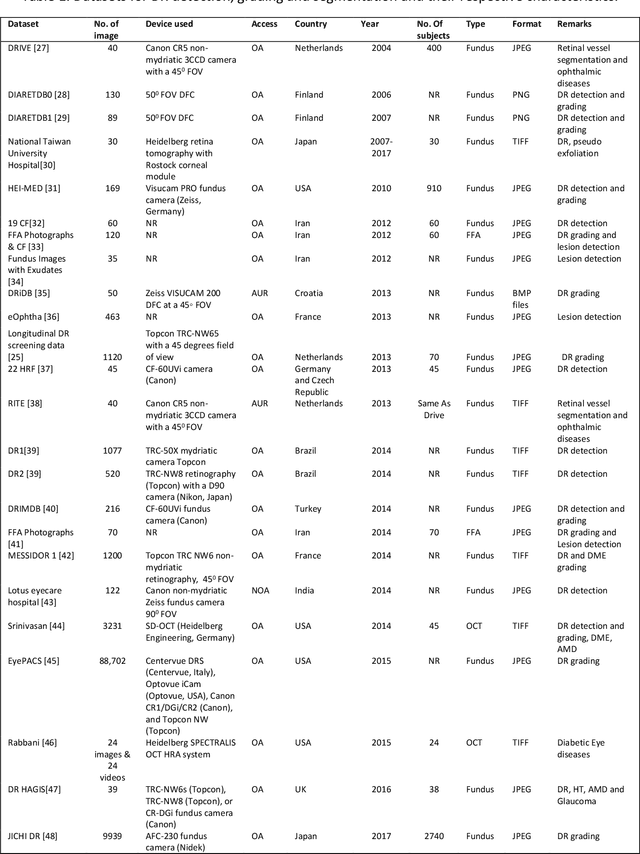

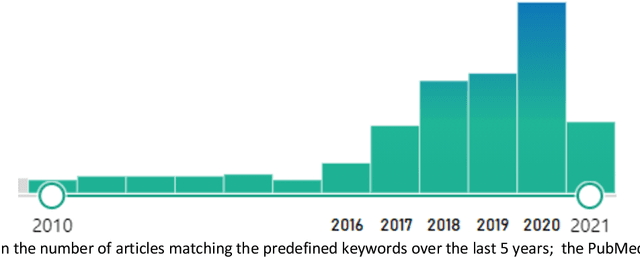

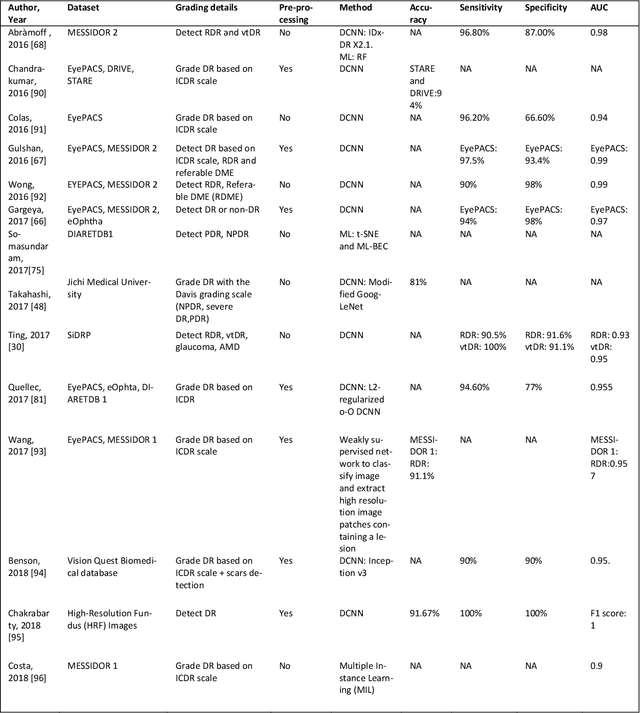

Abstract:Diabetic Retinopathy (DR) is a leading cause of vision loss in the world,. In the past few Diabetic Retinopathy (DR) is a leading cause of vision loss in the world. In the past few years, Artificial Intelligence (AI) based approaches have been used to detect and grade DR. Early detection enables appropriate treatment and thus prevents vision loss, Both fundus and optical coherence tomography (OCT) images are used to image the retina. With deep learning/machine learning apprroaches it is possible to extract features from the images and detect the presence of DR. Multiple strategies are implemented to detect and grade the presence of DR using classification, segmentation, and hybrid techniques. This review covers the literature dealing with AI approaches to DR that have been published in the open literature over a five year span (2016-2021). In addition a comprehensive list of available DR datasets is reported. Both the PICO (P-patient, I-intervention, C-control O-outcome) and Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA)2009 search strategies were employed. We summarize a total of 114 published articles which conformed to the scope of the review. In addition a list of 43 major datasets is presented.

Rapid Classification of Glaucomatous Fundus Images

Feb 08, 2021

Abstract:We propose a new method for training convolutional neural networks which integrates reinforcement learning along with supervised learning and use ti for transfer learning for classification of glaucoma from colored fundus images. The training method uses hill climbing techniques via two different climber types, viz "random movment" and "random detection" integrated with supervised learning model though stochastic gradient descent with momentum (SGDM) model. The model was trained and tested using the Drishti GS and RIM-ONE-r2 datasets having glaucomatous and normal fundus images. The performance metrics for prediction was tested by transfer learning on five CNN architectures, namely GoogLenet, DesnseNet-201, NASNet, VGG-19 and Inception-resnet-v2. A fivefold classification was used for evaluating the perfroamnace and high sensitivities while high maintaining high accuracies were achieved. Of the models tested, the denseNet-201 architecture performed the best in terms of sensitivity and area under the curve (AUC). This method of training allows transfer learning on small datasets and can be applied for tele-ophthalmology applications including training with local datasets.

Uncertainty aware and explainable diagnosis of retinal disease

Jan 26, 2021

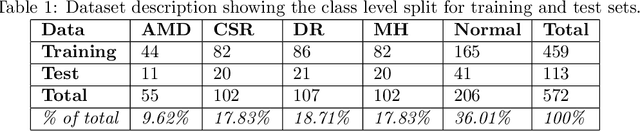

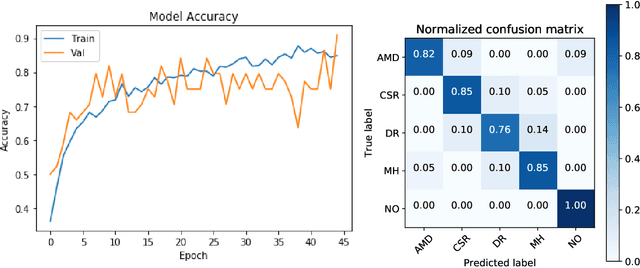

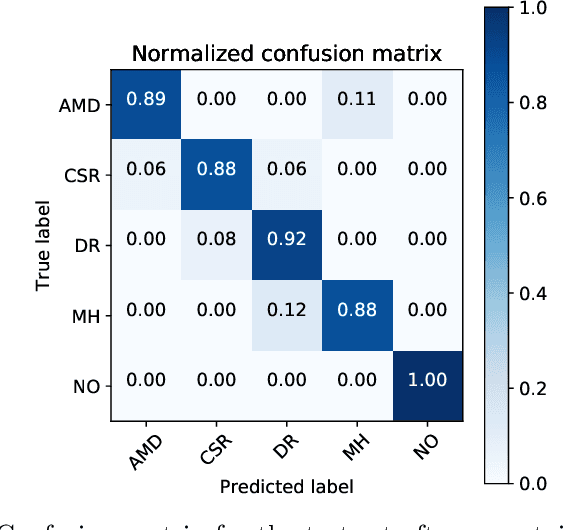

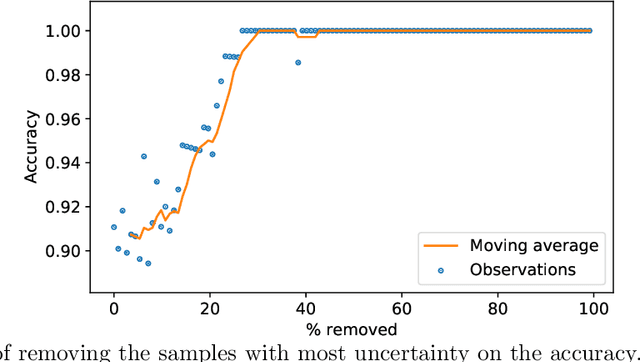

Abstract:Deep learning methods for ophthalmic diagnosis have shown considerable success in tasks like segmentation and classification. However, their widespread application is limited due to the models being opaque and vulnerable to making a wrong decision in complicated cases. Explainability methods show the features that a system used to make prediction while uncertainty awareness is the ability of a system to highlight when it is not sure about the decision. This is one of the first studies using uncertainty and explanations for informed clinical decision making. We perform uncertainty analysis of a deep learning model for diagnosis of four retinal diseases - age-related macular degeneration (AMD), central serous retinopathy (CSR), diabetic retinopathy (DR), and macular hole (MH) using images from a publicly available (OCTID) dataset. Monte Carlo (MC) dropout is used at the test time to generate a distribution of parameters and the predictions approximate the predictive posterior of a Bayesian model. A threshold is computed using the distribution and uncertain cases can be referred to the ophthalmologist thus avoiding an erroneous diagnosis. The features learned by the model are visualized using a proven attribution method from a previous study. The effects of uncertainty on model performance and the relationship between uncertainty and explainability are discussed in terms of clinical significance. The uncertainty information along with the heatmaps make the system more trustworthy for use in clinical settings.

DenseNet for Breast Tumor Classification in Mammographic Images

Jan 24, 2021

Abstract:Breast cancer is the most common invasive cancer in women, and the second main cause of death. Breast cancer screening is an efficient method to detect indeterminate breast lesions early. The common approaches of screening for women are tomosynthesis and mammography images. However, the traditional manual diagnosis requires an intense workload by pathologists, who are prone to diagnostic errors. Thus, the aim of this study is to build a deep convolutional neural network method for automatic detection, segmentation, and classification of breast lesions in mammography images. Based on deep learning the Mask-CNN (RoIAlign) method was developed to features selection and extraction; and the classification was carried out by DenseNet architecture. Finally, the precision and accuracy of the model is evaluated by cross validation matrix and AUC curve. To summarize, the findings of this study may provide a helpful to improve the diagnosis and efficiency in the automatic tumor localization through the medical image classification.

MRI Images, Brain Lesions and Deep Learning

Jan 14, 2021

Abstract:Medical brain image analysis is a necessary step in Computer Assisted /Aided Diagnosis (CAD) systems. Advancements in both hardware and software in the past few years have led to improved segmentation and classification of various diseases. In the present work, we review the published literature on systems and algorithms that allow for classification, identification, and detection of White Matter Hyperintensities (WMHs) of brain MRI images specifically in cases of ischemic stroke and demyelinating diseases. For the selection criteria, we used the bibliometric networks. Out of a total of 140 documents we selected 38 articles that deal with the main objectives of this study. Based on the analysis and discussion of the revised documents, there is constant growth in the research and proposal of new models of deep learning to achieve the highest accuracy and reliability of the segmentation of ischemic and demyelinating lesions. Models with indicators (Dice Score, DSC: 0.99) were found, however with little practical application due to the uses of small datasets and lack of reproducibility. Therefore, the main conclusion is to establish multidisciplinary research groups to overcome the gap between CAD developments and their complete utilization in the clinical environment.

Deep Learning Based Computer-Aided Systems for Breast Cancer Imaging : A Critical Review

Sep 30, 2020

Abstract:This paper provides a critical review of the literature on deep learning applications in breast tumor diagnosis using ultrasound and mammography images. It also summarizes recent advances in computer-aided diagnosis (CAD) systems, which make use of new deep learning methods to automatically recognize images and improve the accuracy of diagnosis made by radiologists. This review is based upon published literature in the past decade (January 2010 January 2020). The main findings in the classification process reveal that new DL-CAD methods are useful and effective screening tools for breast cancer, thus reducing the need for manual feature extraction. The breast tumor research community can utilize this survey as a basis for their current and future studies.

Quantitative and Qualitative Evaluation of Explainable Deep Learning Methods for Ophthalmic Diagnosis

Sep 26, 2020

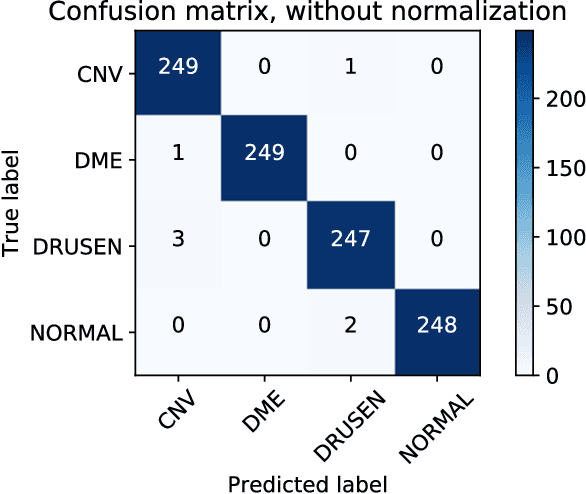

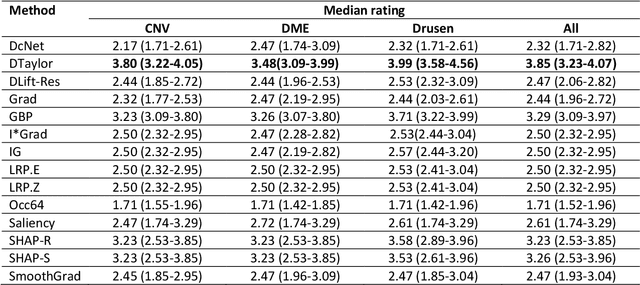

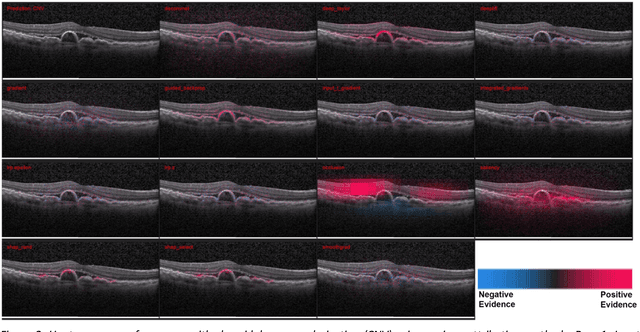

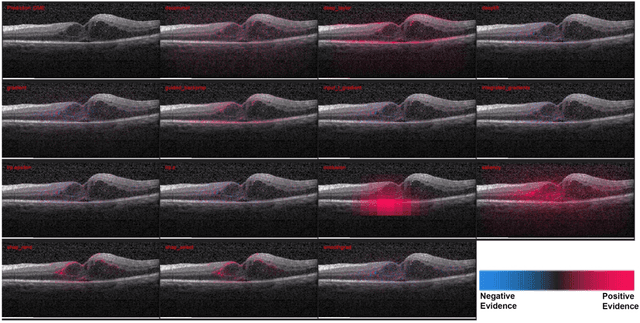

Abstract:Background: The lack of explanations for the decisions made by algorithms such as deep learning has hampered their acceptance by the clinical community despite highly accurate results on multiple problems. Recently, attribution methods have emerged for explaining deep learning models, and they have been tested on medical imaging problems. The performance of attribution methods is compared on standard machine learning datasets and not on medical images. In this study, we perform a comparative analysis to determine the most suitable explainability method for retinal OCT diagnosis. Methods: A commonly used deep learning model known as Inception v3 was trained to diagnose 3 retinal diseases - choroidal neovascularization (CNV), diabetic macular edema (DME), and drusen. The explanations from 13 different attribution methods were rated by a panel of 14 clinicians for clinical significance. Feedback was obtained from the clinicians regarding the current and future scope of such methods. Results: An attribution method based on a Taylor series expansion, called Deep Taylor was rated the highest by clinicians with a median rating of 3.85/5. It was followed by two other attribution methods, Guided backpropagation and SHAP (SHapley Additive exPlanations). Conclusion: Explanations of deep learning models can make them more transparent for clinical diagnosis. This study compared different explanations methods in the context of retinal OCT diagnosis and found that the best performing method may not be the one considered best for other deep learning tasks. Overall, there was a high degree of acceptance from the clinicians surveyed in the study. Keywords: explainable AI, deep learning, machine learning, image processing, Optical coherence tomography, retina, Diabetic macular edema, Choroidal Neovascularization, Drusen

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge