Vania V. Estrela

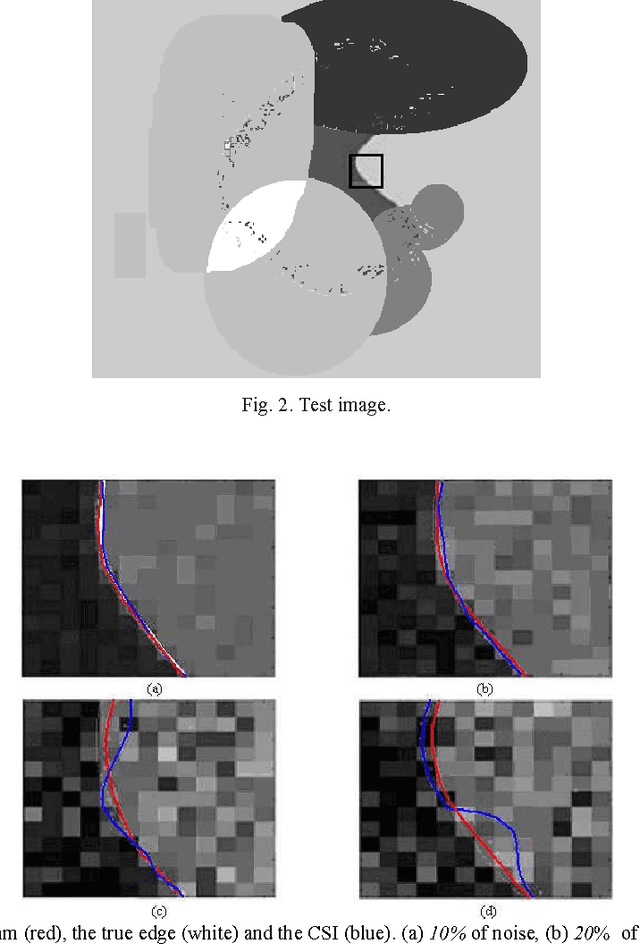

Oriented bounding boxes using multiresolution contours for fast interference detection of arbitrary geometry objects

Nov 11, 2016

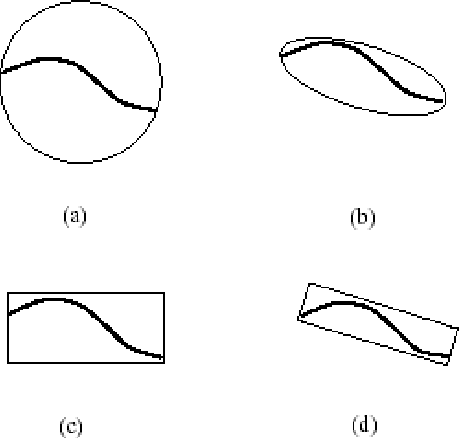

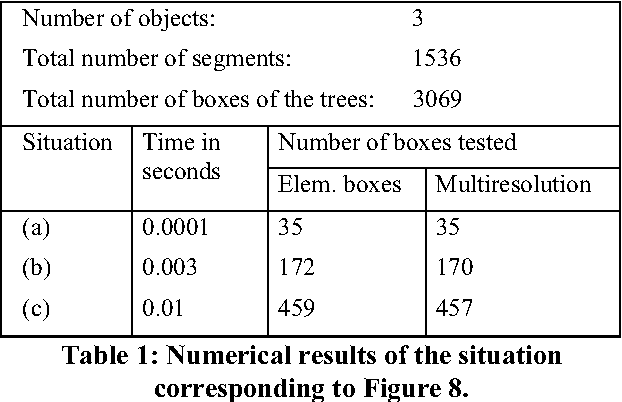

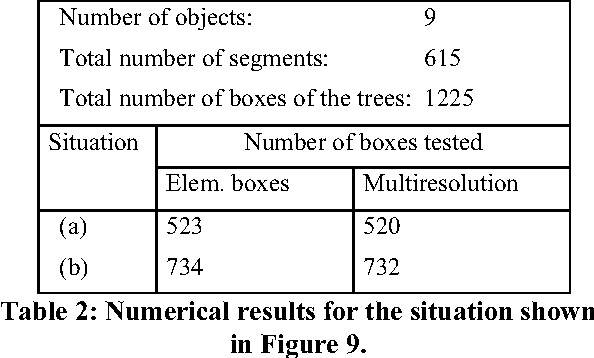

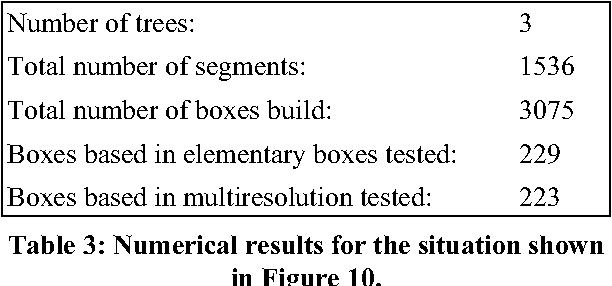

Abstract:Interference detection of arbitrary geometric objects is not a trivial task due to the heavy computational load imposed by implementation issues. The hierarchically structured bounding boxes help us to quickly isolate the contour of segments in interference. In this paper, a new approach is introduced to treat the interference detection problem involving the representation of arbitrary shaped objects. Our proposed method relies upon searching for the best possible way to represent contours by means of hierarchically structured rectangular oriented bounding boxes. This technique handles 2D objects boundaries defined by closed B-spline curves with roughness details. Each oriented box is adapted and fitted to the segments of the contour using second order statistical indicators from some elements of the segments of the object contour in a multiresolution framework. Our method is efficient and robust when it comes to 2D animations in real time. It can deal with smooth curves and polygonal approximations as well results are present to illustrate the performance of the new method.

* 8 pages, 10 figures

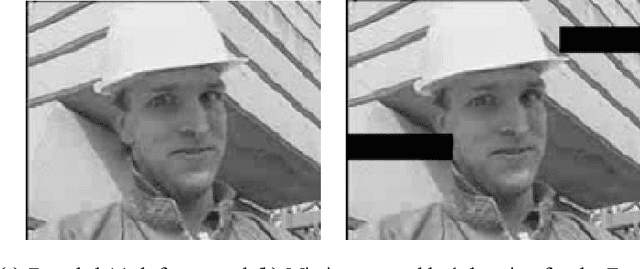

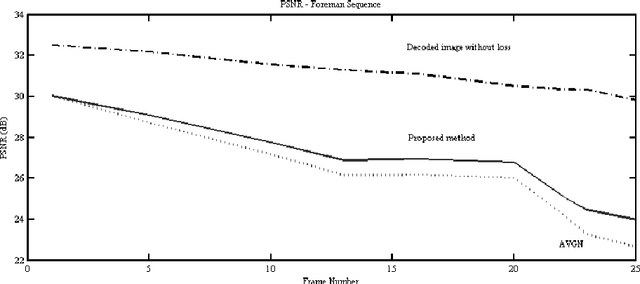

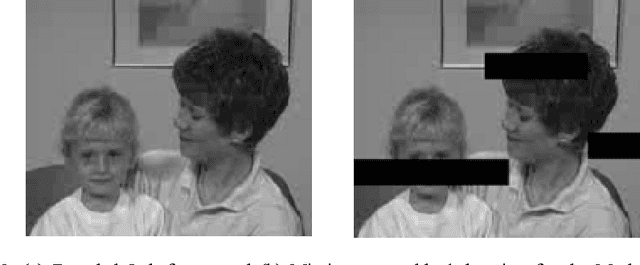

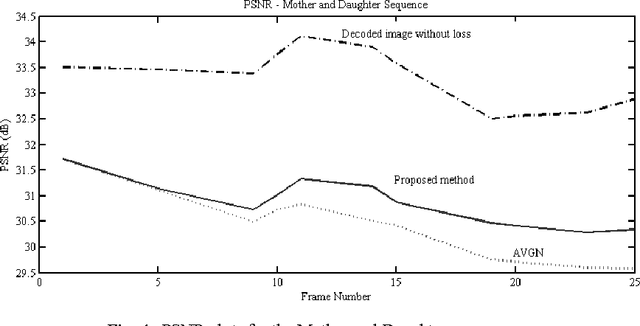

Error concealment by means of motion refinement and regularized Bregman divergence

Nov 10, 2016

Abstract:This work addresses the problem of error concealment in video transmission systems over noisy channels employing Bregman divergences along with regularization. Error concealment intends to improve the effects of disturbances at the reception due to bit-errors or cell loss in packet networks. Bregman regularization gives accurate answers after just some iterations with fast convergence, better accuracy, and stability. This technique has an adaptive nature: the regularization functional is updated according to Bregman functions that change from iteration to iteration according to the nature of the neighborhood under study at iteration n. Numerical experiments show that high-quality regularization parameter estimates can be obtained. The convergence is sped up while turning the regularization parameter estimation less empiric, and more automatic.

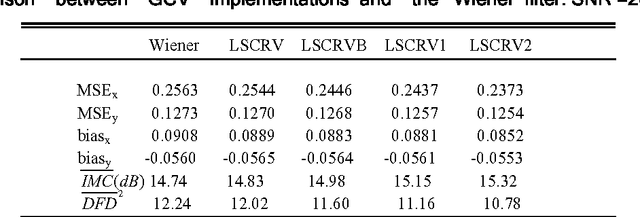

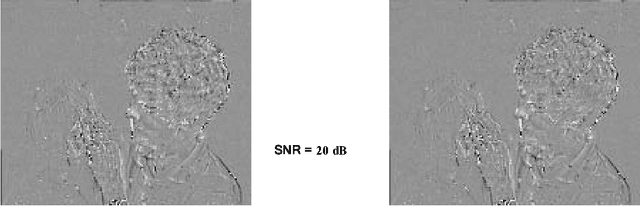

Regularized Pel-Recursive Motion Estimation Using Generalized Cross-Validation and Spatial Adaptation

Nov 04, 2016

Abstract:The computation of 2-D optical flow by means of regularized pel-recursive algorithms raises a host of issues, which include the treatment of outliers, motion discontinuities and occlusion among other problems. We propose a new approach which allows us to deal with these issues within a common framework. Our approach is based on the use of a technique called Generalized Cross-Validation to estimate the best regularization scheme for a given pixel. In our model, the regularization parameter is a matrix whose entries can account for diverse sources of error. The estimation of the motion vectors takes into consideration local properties of the image following a spatially adaptive approach where each moving pixel is supposed to have its own regularization matrix. Preliminary experiments indicate that this approach provides robust estimates of the optical flow.

Adaptive mixed norm optical flow estimation

Nov 03, 2016Abstract:The pel-recursive computation of 2-D optical flow has been extensively studied in computer vision to estimate motion from image sequences, but it still raises a wealth of issues, such as the treatment of outliers, motion discontinuities and occlusion. It relies on spatio-temporal brightness variations due to motion. Our proposed adaptive regularized approach deals with these issues within a common framework. It relies on the use of a data-driven technique called Mixed Norm (MN) to estimate the best motion vector for a given pixel. In our model, various types of noise can be handled, representing different sources of error. The motion vector estimation takes into consideration local image properties and it results from the minimization of a mixed norm functional with a regularization parameter depending on the kurtosis. This parameter determines the relative importance of the fourth norm and makes the functional convex. The main advantage of the developed procedure is that no knowledge of the noise distribution is necessary. Experiments indicate that this approach provides robust estimates of the optical flow.

* 8 pages, 4 figures. arXiv admin note: text overlap with arXiv:1403.7365

EM-Based Mixture Models Applied to Video Event Detection

Oct 10, 2016

Abstract:Surveillance system (SS) development requires hi-tech support to prevail over the shortcomings related to the massive quantity of visual information from SSs. Anything but reduced human monitoring became impossible by means of its physical and economic implications, and an advance towards an automated surveillance becomes the only way out. When it comes to a computer vision system, automatic video event comprehension is a challenging task due to motion clutter, event understanding under complex scenes, multilevel semantic event inference, contextualization of events and views obtained from multiple cameras, unevenness of motion scales, shape changes, occlusions and object interactions among lots of other impairments. In recent years, state-of-the-art models for video event classification and recognition include modeling events to discern context, detecting incidents with only one camera, low-level feature extraction and description, high-level semantic event classification, and recognition. Even so, it is still very burdensome to recuperate or label a specific video part relying solely on its content. Principal component analysis (PCA) has been widely known and used, but when combined with other techniques such as the expectation-maximization (EM) algorithm its computation becomes more efficient. This chapter introduces advances associated with the concept of Probabilistic PCA (PPCA) analysis of video event and it also aims at looking closely to ways and metrics to evaluate these less intensive EM implementations of PCA and KPCA.

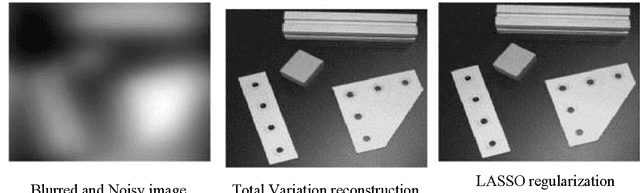

Total Variation Applications in Computer Vision

Mar 31, 2016

Abstract:The objectives of this chapter are: (i) to introduce a concise overview of regularization; (ii) to define and to explain the role of a particular type of regularization called total variation norm (TV-norm) in computer vision tasks; (iii) to set up a brief discussion on the mathematical background of TV methods; and (iv) to establish a relationship between models and a few existing methods to solve problems cast as TV-norm. For the most part, image-processing algorithms blur the edges of the estimated images, however TV regularization preserves the edges with no prior information on the observed and the original images. The regularization scalar parameter {\lambda} controls the amount of regularization allowed and it is an essential to obtain a high-quality regularized output. A wide-ranging review of several ways to put into practice TV regularization as well as its advantages and limitations are discussed.

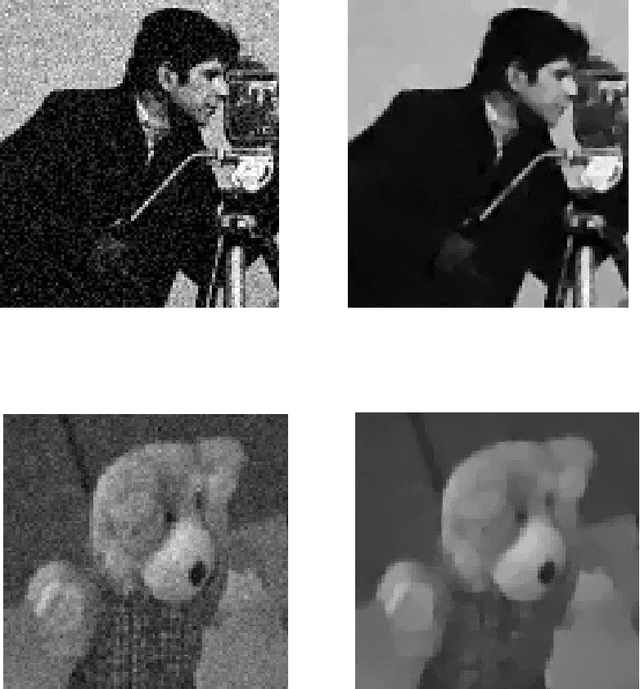

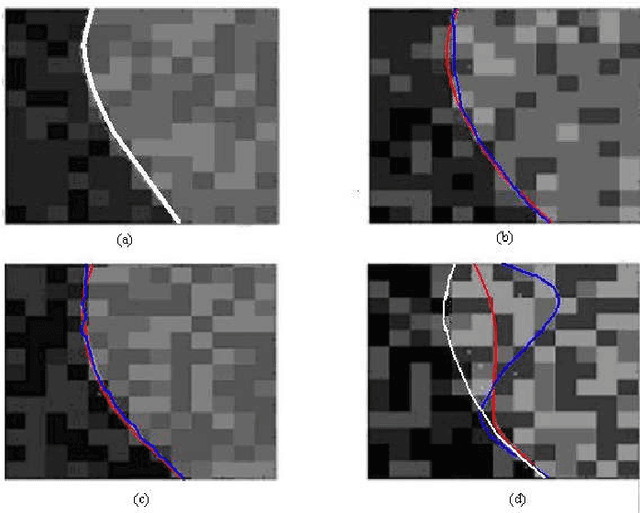

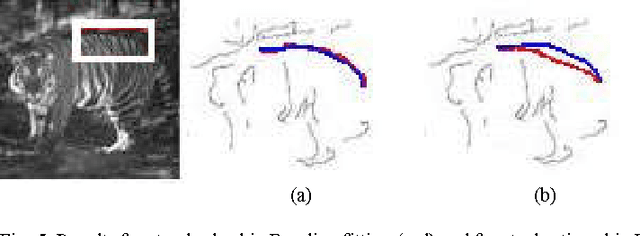

Sub-pixel accuracy edge fitting by means of B-spline

Mar 31, 2016

Abstract:Local perturbations around contours strongly disturb the final result of computer vision tasks. It is common to introduce a priori information in the estimation process. Improvement can be achieved via a deformable model such as the snake model. In recent works, the deformable contour is modeled by means of B-spline snakes which allows local control, concise representation, and the use of fewer parameters. The estimation of the sub-pixel edges using a global B-spline model relies on the contour global determination according to a maximum likelihood framework and using the observed data likelihood. This procedure guarantees that the noisiest data will be filtered out. The data likelihood is computed as a consequence of the observation model which includes both orientation and position information. Comparative experiments of this algorithm and the classical spline interpolation have shown that the proposed algorithm outperforms the classical approach for Gaussian and Salt & Pepper noise.

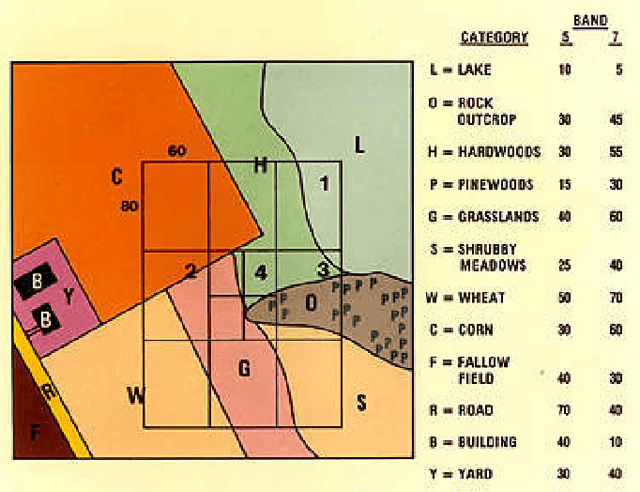

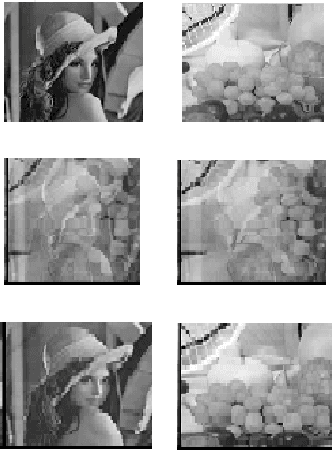

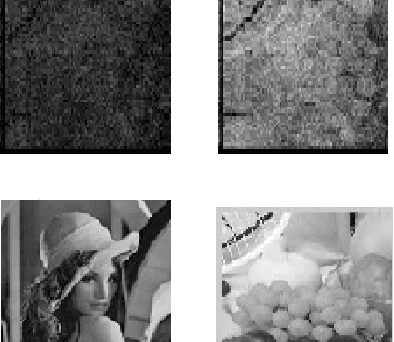

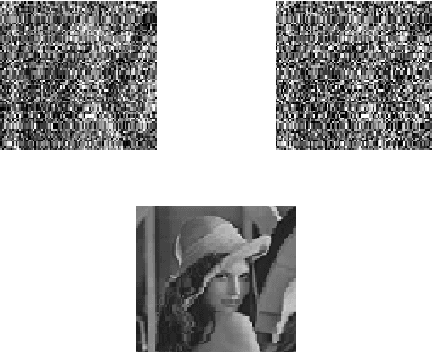

Blind signal separation and identification of mixtures of images

Mar 26, 2016

Abstract:In this paper, a fresh procedure to handle image mixtures by means of blind signal separation relying on a combination of second order and higher order statistics techniques are introduced. The problem of blind signal separation is reassigned to the wavelet domain. The key idea behind this method is that the image mixture can be decomposed into the sum of uncorrelated and/or independent sub-bands using wavelet transform. Initially, the observed image is pre-whitened in the space domain. Afterwards, an initial separation matrix is estimated from the second order statistics de-correlation model in the wavelet domain. Later, this matrix will be used as an initial separation matrix for the higher order statistics stage in order to find the best separation matrix. The suggested algorithm was tested using natural images.Experiments have confirmed that the use of the proposed process provides promising outcomes in identifying an image from noisy mixtures of images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge