Valentin Thorey

RobustSleepNet: Transfer learning for automated sleep staging at scale

Jan 07, 2021

Abstract:Sleep disorder diagnosis relies on the analysis of polysomnography (PSG) records. Sleep stages are systematically determined as a preliminary step of this examination. In practice, sleep stage classification relies on the visual inspection of 30-seconds epochs of polysomnography signals. Numerous automatic approaches have been developed to replace this tedious and expensive task. Although these methods demonstrated better performance than human sleep experts on specific datasets, they remain largely unused in sleep clinics. The main reason is that each sleep clinic uses a specific PSG montage that most automatic approaches are unable to handle out-of-the-box. Moreover, even when the PSG montage is compatible, publications have shown that automatic approaches perform poorly on unseen data with different demographics. To address these issues, we introduce RobustSleepNet, a deep learning model for automatic sleep stage classification able to handle arbitrary PSG montages. We trained and evaluated this model in a leave-one-out-dataset fashion on a large corpus of 8 heterogeneous sleep staging datasets to make it robust to demographic changes. When evaluated on an unseen dataset, RobustSleepNet reaches 97% of the F1 of a model trained specifically on this dataset. We then show that finetuning RobustSleepNet, using a part of the unseen dataset, increase the F1 by 2% when compared to a model trained specifically for this dataset. Hence, RobustSleepNet unlocks the possibility to perform high-quality out-of-the-box automatic sleep staging with any clinical setup. It can also be finetuned to reach a state-of-the-art level of performance on a specific population.

Dreem Open Datasets: Multi-Scored Sleep Datasets to compare Human and Automated sleep staging

Nov 12, 2019

Abstract:Sleep stage classification constitutes an important element of sleep disorder diagnosis. It relies on the visual inspection of polysomnography records by trained sleep technologists. Automated approaches have been designed to alleviate this resource-intensive task. However, such approaches are usually compared to a single human scorer annotation despite an inter-rater agreement of about 85 % only. The present study introduces two publicly-available datasets, DOD-H including 25 healthy volunteers and DOD-O including 55 patients suffering from obstructive sleep apnea (OSA). Both datasets have been scored by 5 sleep technologists from different sleep centers. We developed a framework to compare automated approaches to a consensus of multiple human scorers. Using this framework, we benchmarked and compared the main literature approaches. We also developed and benchmarked a new deep learning method, SimpleSleepNet, inspired by current state-of-the-art. We demonstrated that many methods can reach human-level performance on both datasets. SimpleSleepNet achieved an F1 of 89.9 % vs 86.8 % on average for human scorers on DOD-H, and an F1 of 88.3 % vs 84.8 % on DOD-O. Our study highlights that using state-of-the-art automated sleep staging outperforms human scorers performance for healthy volunteers and patients suffering from OSA. Consideration could be made to use automated approaches in the clinical setting.

AI vs Humans for the diagnosis of sleep apnea

Jun 20, 2019

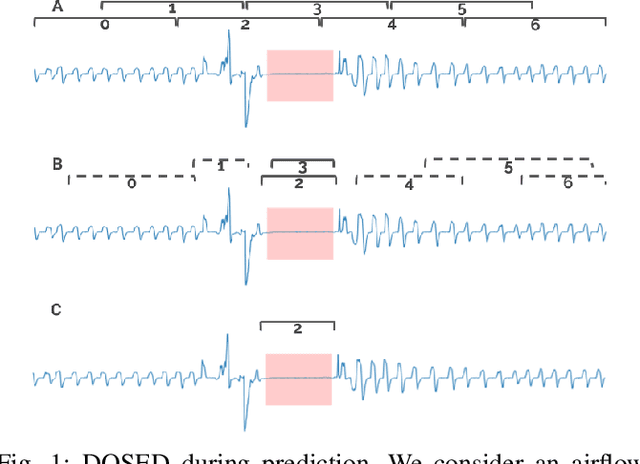

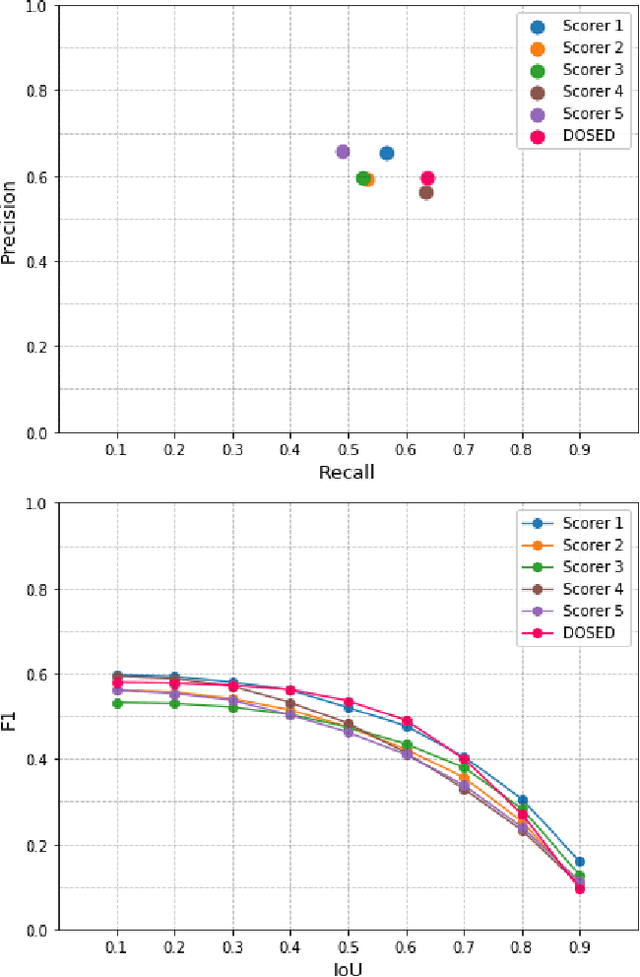

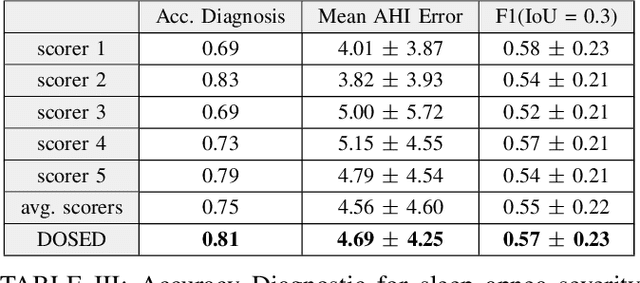

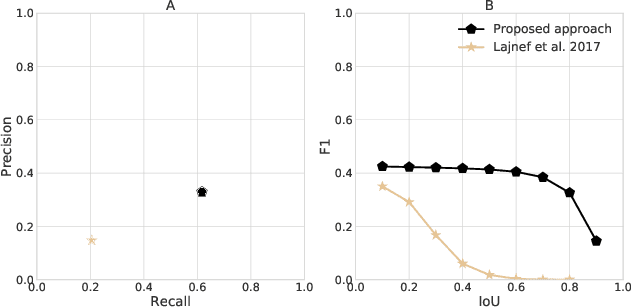

Abstract:Polysomnography (PSG) is the gold standard for diagnosing sleep obstructive apnea (OSA). It allows monitoring of breathing events throughout the night. The detection of these events is usually done by trained sleep experts. However, this task is tedious, highly time-consuming and subject to important inter-scorer variability. In this study, we adapted our state-of-the-art deep learning method for sleep event detection, DOSED, to the detection of sleep breathing events in PSG for the diagnosis of OSA. We used a dataset of 52 PSG recordings with apnea-hypopnea event scoring from 5 trained sleep experts. We assessed the performance of the automatic approach and compared it to the inter-scorer performance for both the diagnosis of OSA severity and, at the microscale, for the detection of single breathing events. We observed that human sleep experts reached an average accuracy of 75\% while the automatic approach reached 81\% for sleep apnea severity diagnosis. The F1 score for individual event detection was 0.55 for experts and 0.57 for the automatic approach, on average. These results demonstrate that the automatic approach can perform at a sleep expert level for the diagnosis of OSA.

Towards a Flexible Deep Learning Method for Automatic Detection of Clinically Relevant Multi-Modal Events in the Polysomnogram

May 16, 2019

Abstract:Much attention has been given to automatic sleep staging algorithms in past years, but the detection of discrete events in sleep studies is also crucial for precise characterization of sleep patterns and possible diagnosis of sleep disorders. We propose here a deep learning model for automatic detection and annotation of arousals and leg movements. Both of these are commonly seen during normal sleep, while an excessive amount of either is linked to disrupted sleep patterns, excessive daytime sleepiness impacting quality of life, and various sleep disorders. Our model was trained on 1,485 subjects and tested on 1,000 separate recordings of sleep. We tested two different experimental setups and found optimal arousal detection was attained by including a recurrent neural network module in our default model with a dynamic default event window (F1 = 0.75), while optimal leg movement detection was attained using a static event window (F1 = 0.65). Our work show promise while still allowing for improvements. Specifically, future research will explore the proposed model as a general-purpose sleep analysis model.

DOSED: a deep learning approach to detect multiple sleep micro-events in EEG signal

Dec 07, 2018

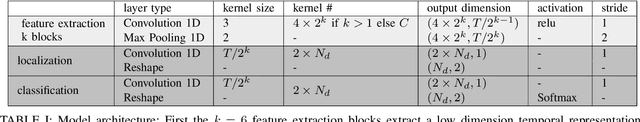

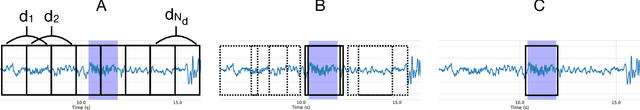

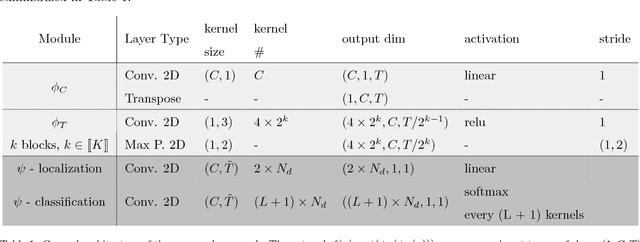

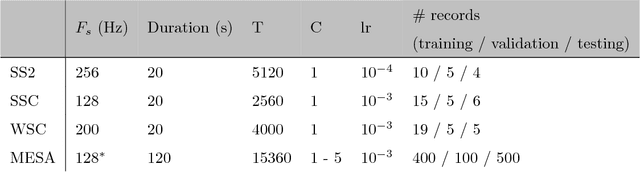

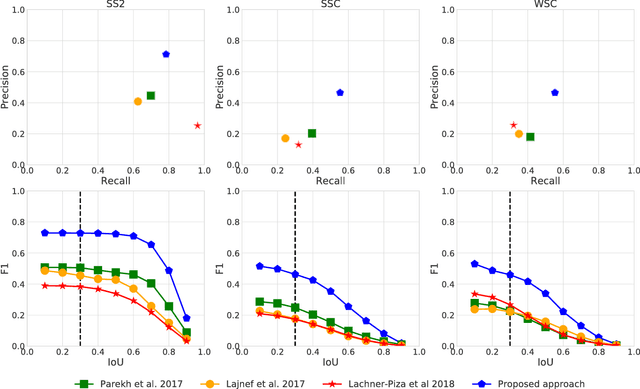

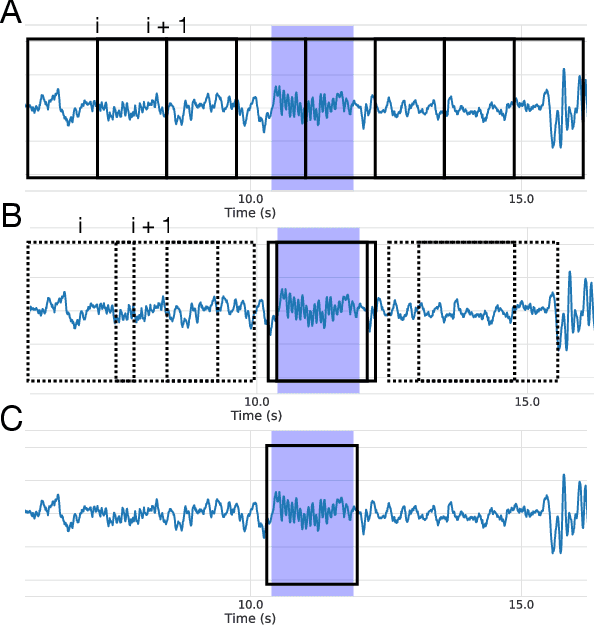

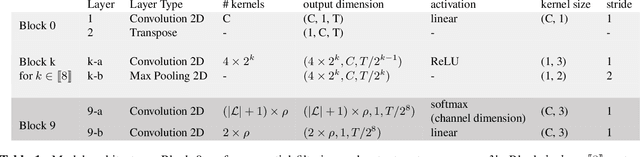

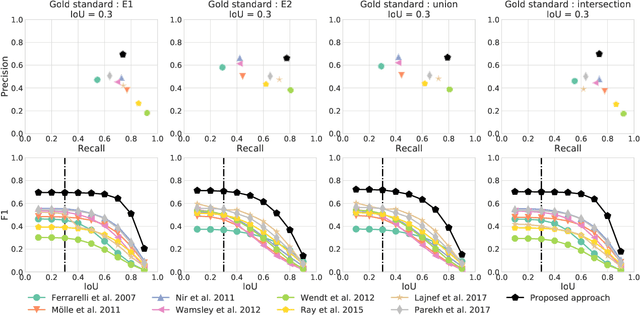

Abstract:Background: Electroencephalography (EEG) monitors brain activity during sleep and is used to identify sleep disorders. In sleep medicine, clinicians interpret raw EEG signals in so-called sleep stages, which are assigned by experts to every 30s window of signal. For diagnosis, they also rely on shorter prototypical micro-architecture events which exhibit variable durations and shapes, such as spindles, K-complexes or arousals. Annotating such events is traditionally performed by a trained sleep expert, making the process time consuming, tedious and subject to inter-scorer variability. To automate this procedure, various methods have been developed, yet these are event-specific and rely on the extraction of hand-crafted features. New method: We propose a novel deep learning architecure called Dreem One Shot Event Detector (DOSED). DOSED jointly predicts locations, durations and types of events in EEG time series. The proposed approach, applied here on sleep related micro-architecture events, is inspired by object detectors developed for computer vision such as YOLO and SSD. It relies on a convolutional neural network that builds a feature representation from raw EEG signals, as well as two modules performing localization and classification respectively. Results and comparison with other methods: The proposed approach is tested on 4 datasets and 3 types of events (spindles, K-complexes, arousals) and compared to the current state-of-the-art detection algorithms. Conclusions: Results demonstrate the versatility of this new approach and improved performance compared to the current state-of-the-art detection methods.

A deep learning architecture to detect events in EEG signals during sleep

Jul 11, 2018

Abstract:Electroencephalography (EEG) during sleep is used by clinicians to evaluate various neurological disorders. In sleep medicine, it is relevant to detect macro-events (> 10s) such as sleep stages, and micro-events (<2s) such as spindles and K-complexes. Annotations of such events require a trained sleep expert, a time consuming and tedious process with a large inter-scorer variability. Automatic algorithms have been developed to detect various types of events but these are event-specific. We propose a deep learning method that jointly predicts locations, durations and types of events in EEG time series. It relies on a convolutional neural network that builds a feature representation from raw EEG signals. Numerical experiments demonstrate efficiency of this new approach on various event detection tasks compared to current state-of-the-art, event specific, algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge