DOSED: a deep learning approach to detect multiple sleep micro-events in EEG signal

Paper and Code

Dec 07, 2018

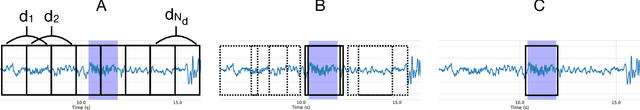

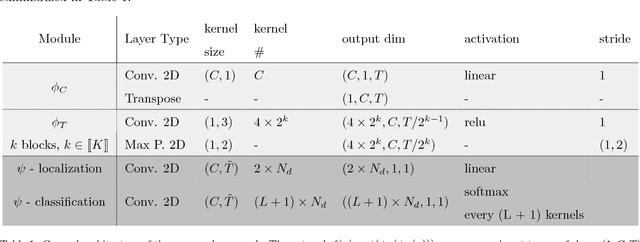

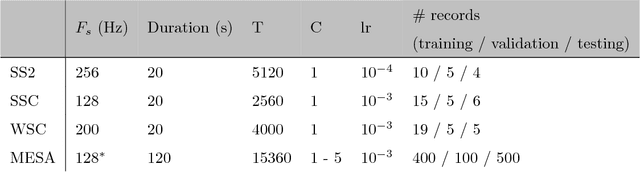

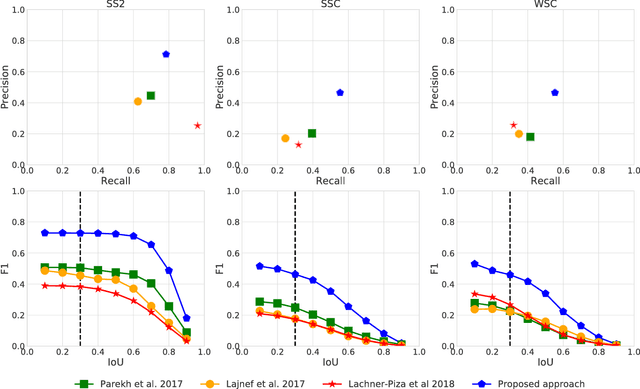

Background: Electroencephalography (EEG) monitors brain activity during sleep and is used to identify sleep disorders. In sleep medicine, clinicians interpret raw EEG signals in so-called sleep stages, which are assigned by experts to every 30s window of signal. For diagnosis, they also rely on shorter prototypical micro-architecture events which exhibit variable durations and shapes, such as spindles, K-complexes or arousals. Annotating such events is traditionally performed by a trained sleep expert, making the process time consuming, tedious and subject to inter-scorer variability. To automate this procedure, various methods have been developed, yet these are event-specific and rely on the extraction of hand-crafted features. New method: We propose a novel deep learning architecure called Dreem One Shot Event Detector (DOSED). DOSED jointly predicts locations, durations and types of events in EEG time series. The proposed approach, applied here on sleep related micro-architecture events, is inspired by object detectors developed for computer vision such as YOLO and SSD. It relies on a convolutional neural network that builds a feature representation from raw EEG signals, as well as two modules performing localization and classification respectively. Results and comparison with other methods: The proposed approach is tested on 4 datasets and 3 types of events (spindles, K-complexes, arousals) and compared to the current state-of-the-art detection algorithms. Conclusions: Results demonstrate the versatility of this new approach and improved performance compared to the current state-of-the-art detection methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge