Vaibhav B Sinha

DANTE: Deep AlterNations for Training nEural networks

Feb 01, 2019

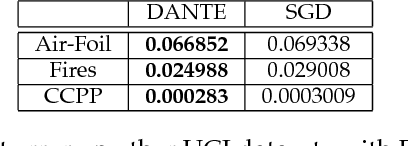

Abstract:We present DANTE, a novel method for training neural networks using the alternating minimization principle. DANTE provides an alternate perspective to traditional gradient-based backpropagation techniques commonly used to train deep networks. It utilizes an adaptation of quasi-convexity to cast training a neural network as a bi-quasi-convex optimization problem. We show that for neural network configurations with both differentiable (e.g. sigmoid) and non-differentiable (e.g. ReLU) activation functions, we can perform the alternations very effectively. DANTE can also be extended to networks with multiple hidden layers. In experiments on standard datasets, neural networks trained using the proposed method were found to be very promising and competitive to traditional backpropagation techniques, both in terms of quality of the solution, as well as training speed.

Fast Dawid-Skene: A Fast Vote Aggregation Scheme for Sentiment Classification

Sep 07, 2018

Abstract:Many real world problems can now be effectively solved using supervised machine learning. A major roadblock is often the lack of an adequate quantity of labeled data for training. A possible solution is to assign the task of labeling data to a crowd, and then infer the true label using aggregation methods. A well-known approach for aggregation is the Dawid-Skene (DS) algorithm, which is based on the principle of Expectation-Maximization (EM). We propose a new simple, yet effective, EM-based algorithm, which can be interpreted as a `hard' version of DS, that allows much faster convergence while maintaining similar accuracy in aggregation. We show the use of this algorithm as a quick and effective technique for online, real-time sentiment annotation. We also prove that our algorithm converges to the estimated labels at a linear rate. Our experiments on standard datasets show a significant speedup in time taken for aggregation - upto $\sim$8x over Dawid-Skene and $\sim$6x over other fast EM methods, at competitive accuracy performance. The code for the implementation of the algorithms can be found at https://github.com/GoodDeeds/Fast-Dawid-Skene

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge