Vage Taamazyan

Michael Pokorny

Humanity's Last Exam

Jan 24, 2025Abstract:Benchmarks are important tools for tracking the rapid advancements in large language model (LLM) capabilities. However, benchmarks are not keeping pace in difficulty: LLMs now achieve over 90\% accuracy on popular benchmarks like MMLU, limiting informed measurement of state-of-the-art LLM capabilities. In response, we introduce Humanity's Last Exam (HLE), a multi-modal benchmark at the frontier of human knowledge, designed to be the final closed-ended academic benchmark of its kind with broad subject coverage. HLE consists of 3,000 questions across dozens of subjects, including mathematics, humanities, and the natural sciences. HLE is developed globally by subject-matter experts and consists of multiple-choice and short-answer questions suitable for automated grading. Each question has a known solution that is unambiguous and easily verifiable, but cannot be quickly answered via internet retrieval. State-of-the-art LLMs demonstrate low accuracy and calibration on HLE, highlighting a significant gap between current LLM capabilities and the expert human frontier on closed-ended academic questions. To inform research and policymaking upon a clear understanding of model capabilities, we publicly release HLE at https://lastexam.ai.

Collision Avoidance Metric for 3D Camera Evaluation

May 16, 2024Abstract:3D cameras have emerged as a critical source of information for applications in robotics and autonomous driving. These cameras provide robots with the ability to capture and utilize point clouds, enabling them to navigate their surroundings and avoid collisions with other objects. However, current standard camera evaluation metrics often fail to consider the specific application context. These metrics typically focus on measures like Chamfer distance (CD) or Earth Mover's Distance (EMD), which may not directly translate to performance in real-world scenarios. To address this limitation, we propose a novel metric for point cloud evaluation, specifically designed to assess the suitability of 3D cameras for the critical task of collision avoidance. This metric incorporates application-specific considerations and provides a more accurate measure of a camera's effectiveness in ensuring safe robot navigation.

Shape from Mixed Polarization

Jun 11, 2016

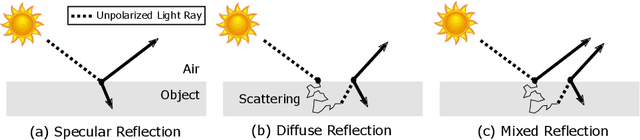

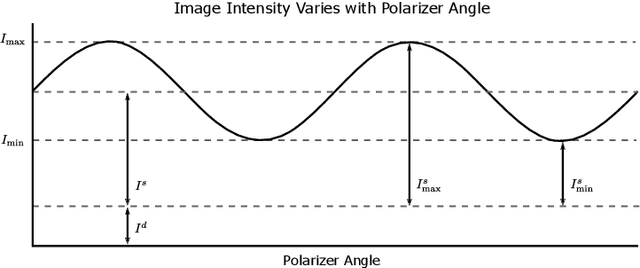

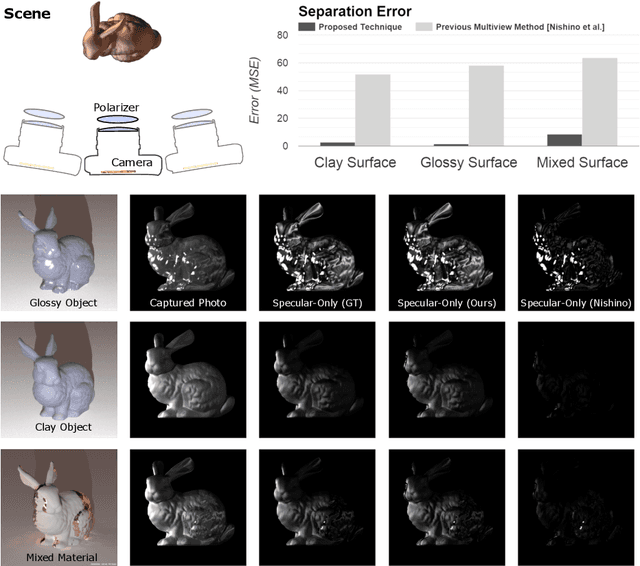

Abstract:Shape from Polarization (SfP) estimates surface normals using photos captured at different polarizer rotations. Fundamentally, the SfP model assumes that light is reflected either diffusely or specularly. However, this model is not valid for many real-world surfaces exhibiting a mixture of diffuse and specular properties. To address this challenge, previous methods have used a sequential solution: first, use an existing algorithm to separate the scene into diffuse and specular components, then apply the appropriate SfP model. In this paper, we propose a new method that jointly uses viewpoint and polarization data to holistically separate diffuse and specular components, recover refractive index, and ultimately recover 3D shape. By involving the physics of polarization in the separation process, we demonstrate competitive results with a benchmark method, while recovering additional information (e.g. refractive index).

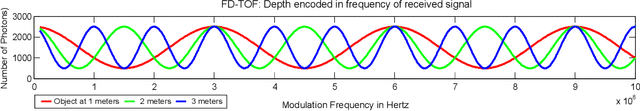

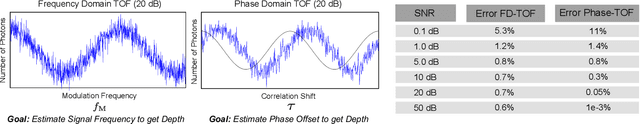

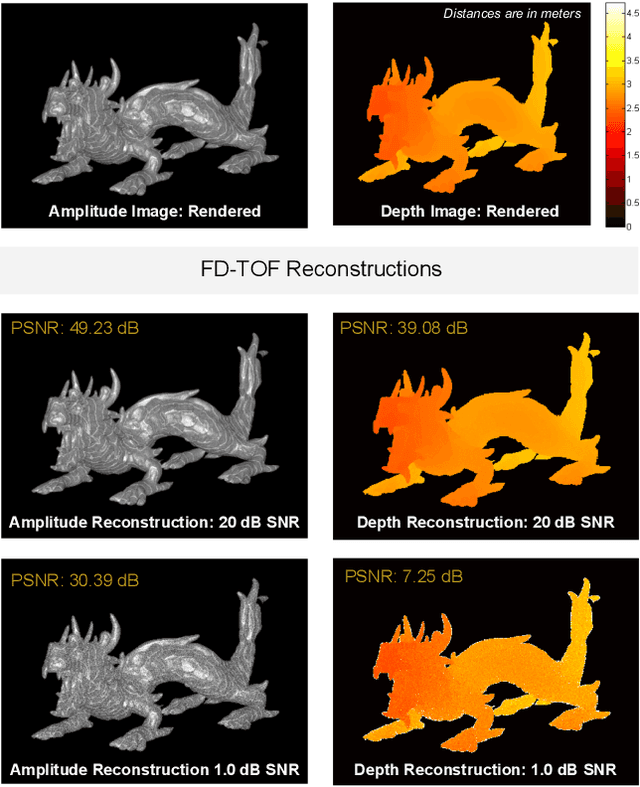

Frequency Domain TOF: Encoding Object Depth in Modulation Frequency

Mar 05, 2015

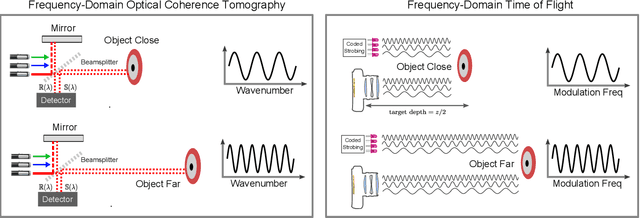

Abstract:Time of flight cameras may emerge as the 3-D sensor of choice. Today, time of flight sensors use phase-based sampling, where the phase delay between emitted and received, high-frequency signals encodes distance. In this paper, we present a new time of flight architecture that relies only on frequency---we refer to this technique as frequency-domain time of flight (FD-TOF). Inspired by optical coherence tomography (OCT), FD-TOF excels when frequency bandwidth is high. With the increasing frequency of TOF sensors, new challenges to time of flight sensing continue to emerge. At high frequencies, FD-TOF offers several potential benefits over phase-based time of flight methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge