Utkarsh J. Dang

Multivariate response and parsimony for Gaussian cluster-weighted models

Feb 26, 2016

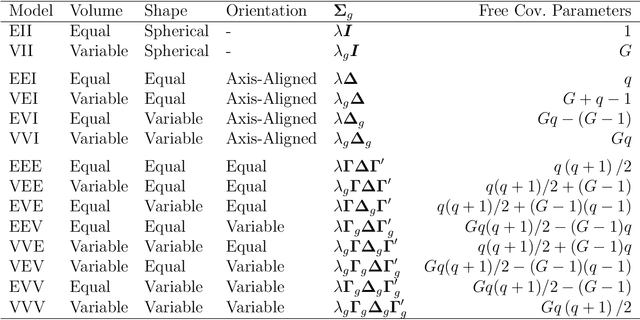

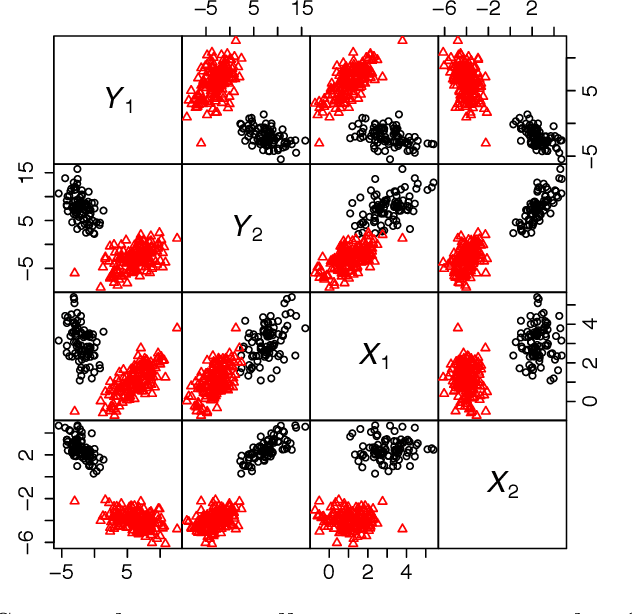

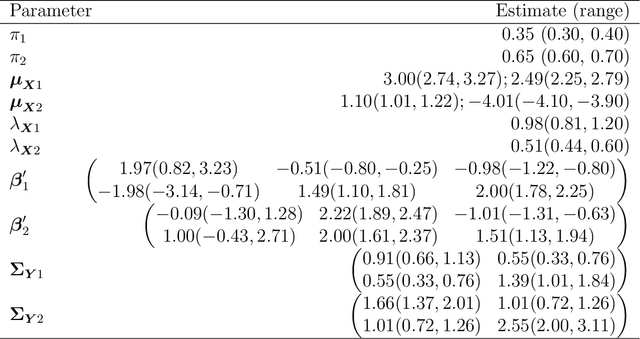

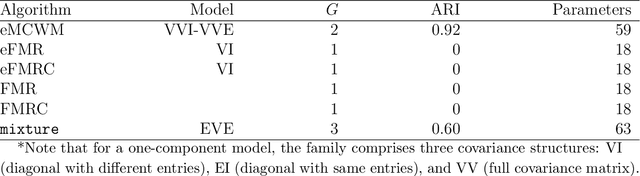

Abstract:A family of parsimonious Gaussian cluster-weighted models is presented. This family concerns a multivariate extension to cluster-weighted modelling that can account for correlations between multivariate responses. Parsimony is attained by constraining parts of an eigen-decomposition imposed on the component covariance matrices. A sufficient condition for identifiability is provided and an expectation-maximization algorithm is presented for parameter estimation. Model performance is investigated on both synthetic and classical real data sets and compared with some popular approaches. Finally, accounting for linear dependencies in the presence of a linear regression structure is shown to offer better performance, vis-\`{a}-vis clustering, over existing methodologies.

Families of Parsimonious Finite Mixtures of Regression Models

Dec 02, 2013

Abstract:Finite mixtures of regression models offer a flexible framework for investigating heterogeneity in data with functional dependencies. These models can be conveniently used for unsupervised learning on data with clear regression relationships. We extend such models by imposing an eigen-decomposition on the multivariate error covariance matrix. By constraining parts of this decomposition, we obtain families of parsimonious mixtures of regressions and mixtures of regressions with concomitant variables. These families of models account for correlations between multiple responses. An expectation-maximization algorithm is presented for parameter estimation and performance is illustrated on simulated and real data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge