Urh Primožič

P(Expression

Dec 02, 2022Abstract:Probabilistic context-free grammars have a long-term record of use as generative models in machine learning and symbolic regression. When used for symbolic regression, they generate algebraic expressions. We define the latter as equivalence classes of strings derived by grammar and address the problem of calculating the probability of deriving a given expression with a given grammar. We show that the problem is undecidable in general. We then present specific grammars for generating linear, polynomial, and rational expressions, where algorithms for calculating the probability of a given expression exist. For those grammars, we design algorithms for calculating the exact probability and efficient approximation with arbitrary precision.

Unsupervised Feature Ranking via Attribute Networks

Nov 25, 2021

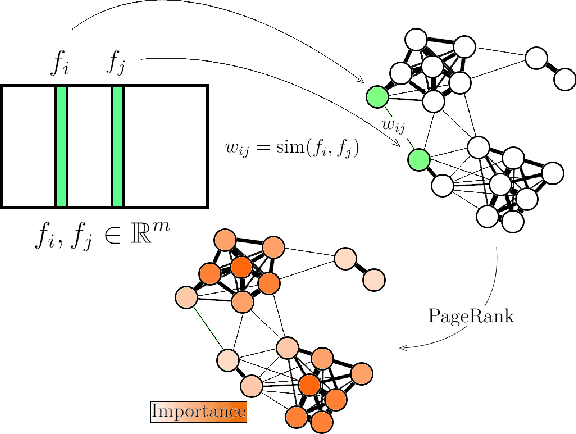

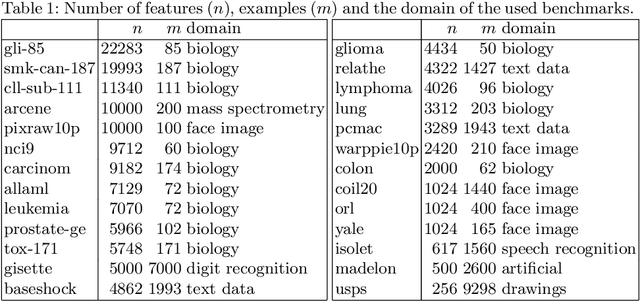

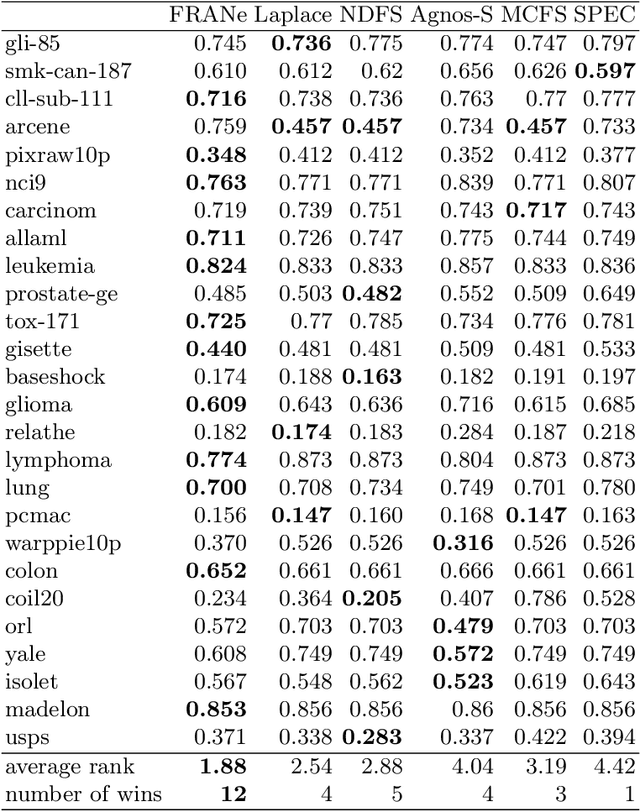

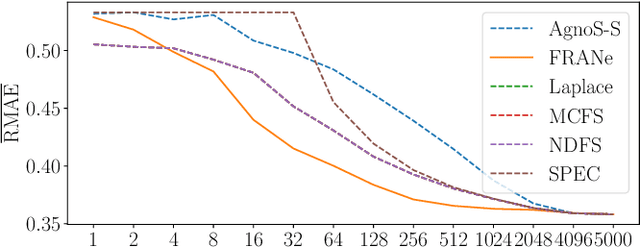

Abstract:The need for learning from unlabeled data is increasing in contemporary machine learning. Methods for unsupervised feature ranking, which identify the most important features in such data are thus gaining attention, and so are their applications in studying high throughput biological experiments or user bases for recommender systems. We propose FRANe (Feature Ranking via Attribute Networks), an unsupervised algorithm capable of finding key features in given unlabeled data set. FRANe is based on ideas from network reconstruction and network analysis. FRANe performs better than state-of-the-art competitors, as we empirically demonstrate on a large collection of benchmarks. Moreover, we provide the time complexity analysis of FRANe further demonstrating its scalability. Finally, FRANe offers as the result the interpretable relational structures used to derive the feature importances.

* for online material and python package see https://github.com/FRANe-team/FRANe

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge