Ulrike Hahn

Birkbeck College London, Department of Psychological Science, London

Can counterfactual explanations of AI systems' predictions skew lay users' causal intuitions about the world? If so, can we correct for that?

May 12, 2022

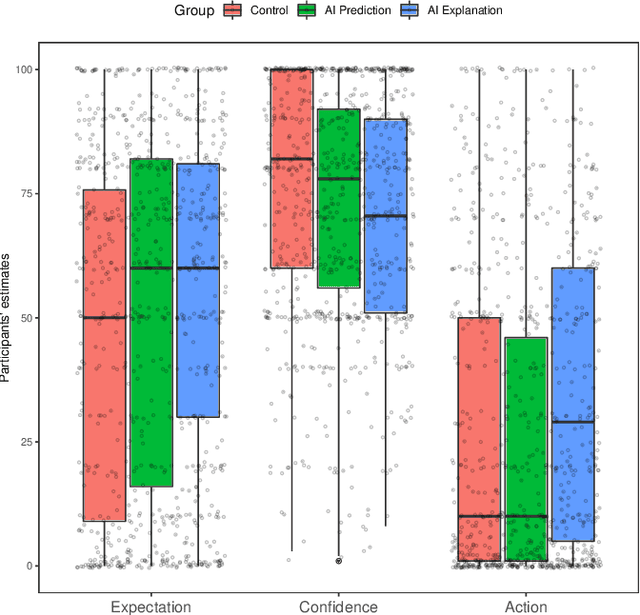

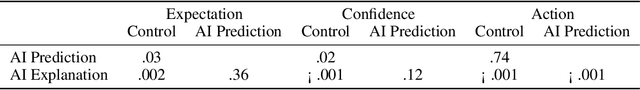

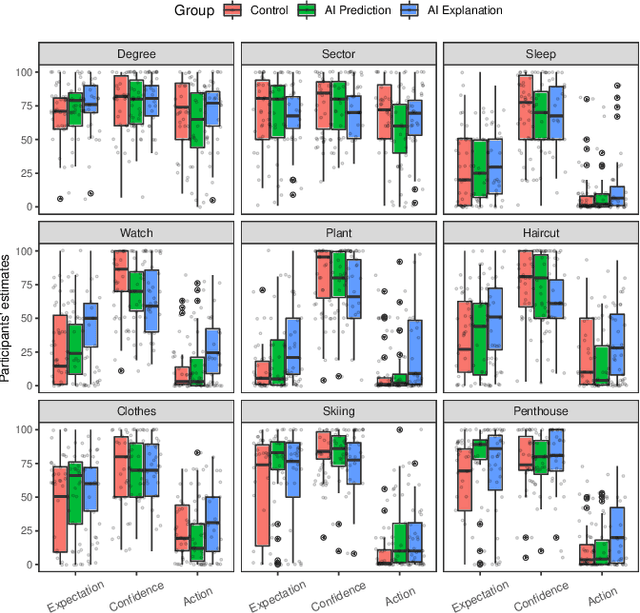

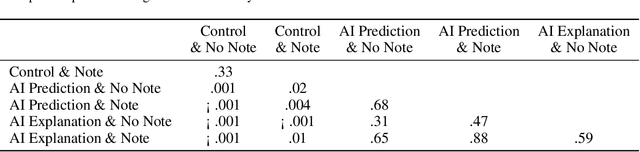

Abstract:Counterfactual (CF) explanations have been employed as one of the modes of explainability in explainable AI-both to increase the transparency of AI systems and to provide recourse. Cognitive science and psychology, however, have pointed out that people regularly use CFs to express causal relationships. Most AI systems are only able to capture associations or correlations in data so interpreting them as casual would not be justified. In this paper, we present two experiment (total N = 364) exploring the effects of CF explanations of AI system's predictions on lay people's causal beliefs about the real world. In Experiment 1 we found that providing CF explanations of an AI system's predictions does indeed (unjustifiably) affect people's causal beliefs regarding factors/features the AI uses and that people are more likely to view them as causal factors in the real world. Inspired by the literature on misinformation and health warning messaging, Experiment 2 tested whether we can correct for the unjustified change in causal beliefs. We found that pointing out that AI systems capture correlations and not necessarily causal relationships can attenuate the effects of CF explanations on people's causal beliefs.

BARD: A structured technique for group elicitation of Bayesian networks to support analytic reasoning

Mar 02, 2020

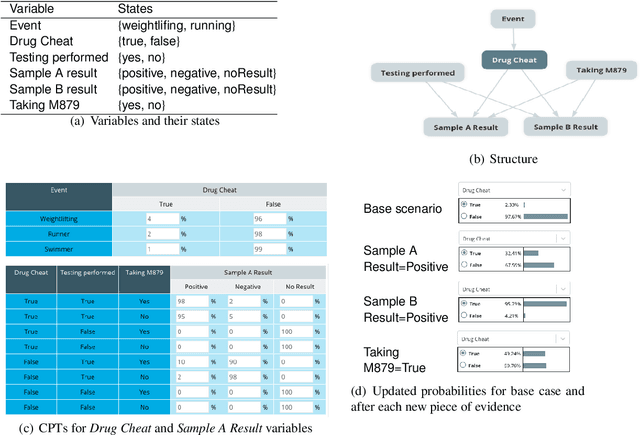

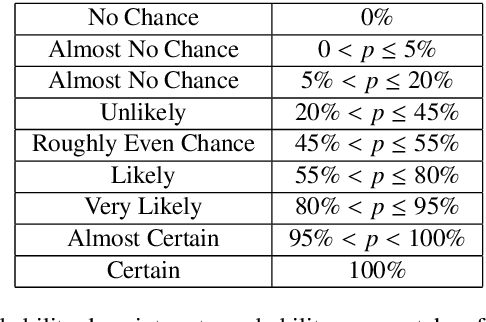

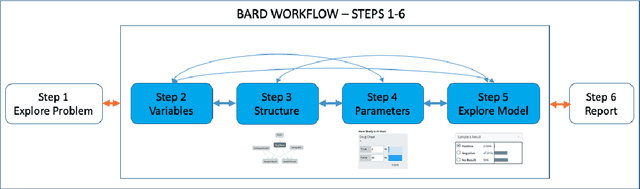

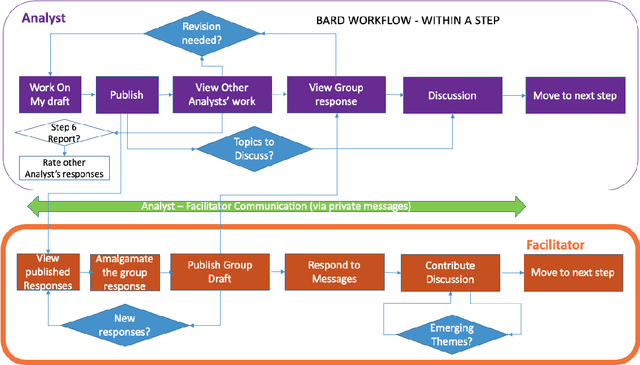

Abstract:In many complex, real-world situations, problem solving and decision making require effective reasoning about causation and uncertainty. However, human reasoning in these cases is prone to confusion and error. Bayesian networks (BNs) are an artificial intelligence technology that models uncertain situations, supporting probabilistic and causal reasoning and decision making. However, to date, BN methodologies and software require significant upfront training, do not provide much guidance on the model building process, and do not support collaboratively building BNs. BARD (Bayesian ARgumentation via Delphi) is both a methodology and an expert system that utilises (1) BNs as the underlying structured representations for better argument analysis, (2) a multi-user web-based software platform and Delphi-style social processes to assist with collaboration, and (3) short, high-quality e-courses on demand, a highly structured process to guide BN construction, and a variety of helpful tools to assist in building and reasoning with BNs, including an automated explanation tool to assist effective report writing. The result is an end-to-end online platform, with associated online training, for groups without prior BN expertise to understand and analyse a problem, build a model of its underlying probabilistic causal structure, validate and reason with the causal model, and use it to produce a written analytic report. Initial experimental results demonstrate that BARD aids in problem solving, reasoning and collaboration.

The Temporal Dynamics of Belief-based Updating of Epistemic Trust: Light at the End of the Tunnel?

Dec 24, 2019

Abstract:We start with the distinction of outcome- and belief-based Bayesian models of the sequential update of agents' beliefs and subjective reliability of sources (trust). We then focus on discussing the influential Bayesian model of belief-based trust update by Eric Olsson, which models dichotomic events and explicitly represents anti-reliability. After sketching some disastrous recent results for this perhaps most promising model of belief update, we show new simulation results for the temporal dynamics of learning belief with and without trust update and with and without communication. The results seem to shed at least a somewhat more positive light on the communicating-and-trust-updating agents. This may be a light at the end of the tunnel of belief-based models of trust updating, but the interpretation of the clear findings is much less clear.

Where Defaults Don't Help: the Case of the German Plural System

May 13, 1996

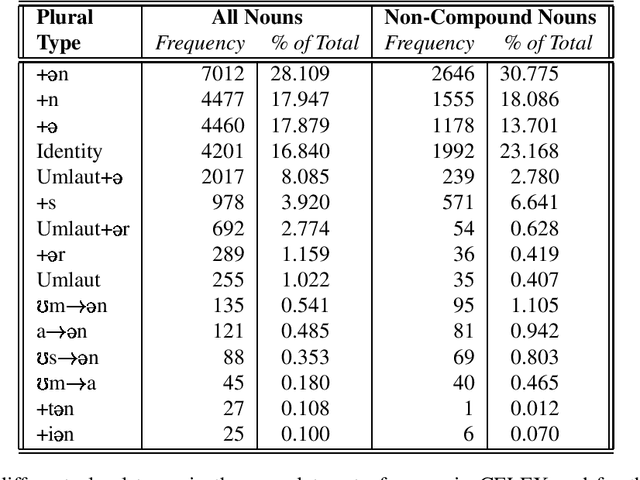

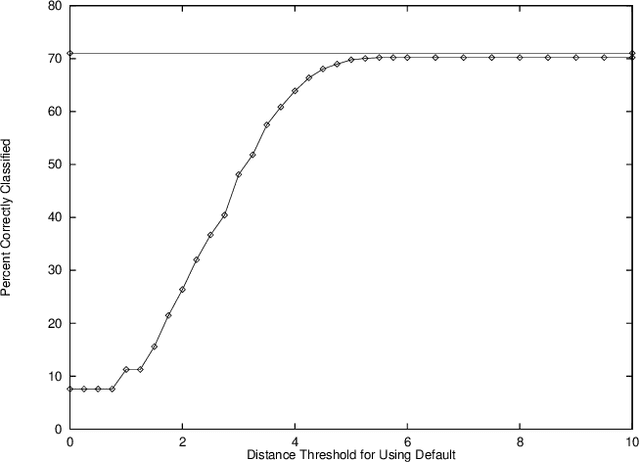

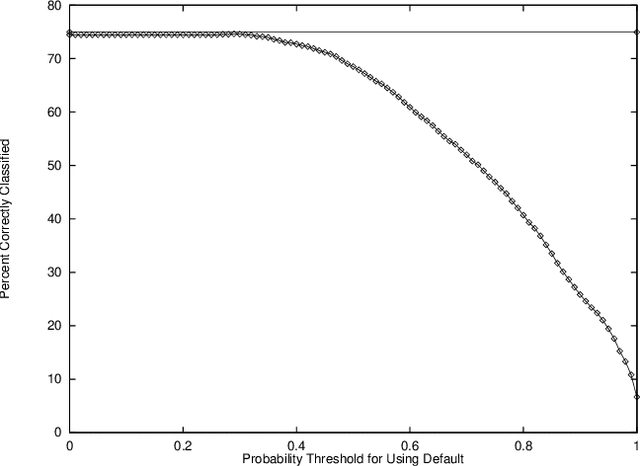

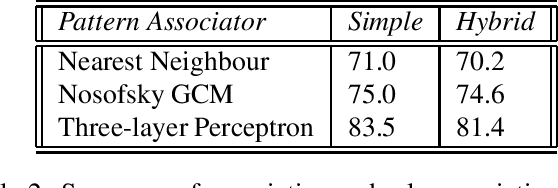

Abstract:The German plural system has become a focal point for conflicting theories of language, both linguistic and cognitive. We present simulation results with three simple classifiers - an ordinary nearest neighbour algorithm, Nosofsky's `Generalized Context Model' (GCM) and a standard, three-layer backprop network - predicting the plural class from a phonological representation of the singular in German. Though these are absolutely `minimal' models, in terms of architecture and input information, they nevertheless do remarkably well. The nearest neighbour predicts the correct plural class with an accuracy of 72% for a set of 24,640 nouns from the CELEX database. With a subset of 8,598 (non-compound) nouns, the nearest neighbour, the GCM and the network score 71.0%, 75.0% and 83.5%, respectively, on novel items. Furthermore, they outperform a hybrid, `pattern-associator + default rule', model, as proposed by Marcus et al. (1995), on this data set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge