Kevin B. Korb

Causal KL: Evaluating Causal Discovery

Nov 11, 2021

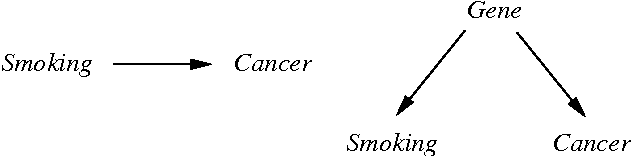

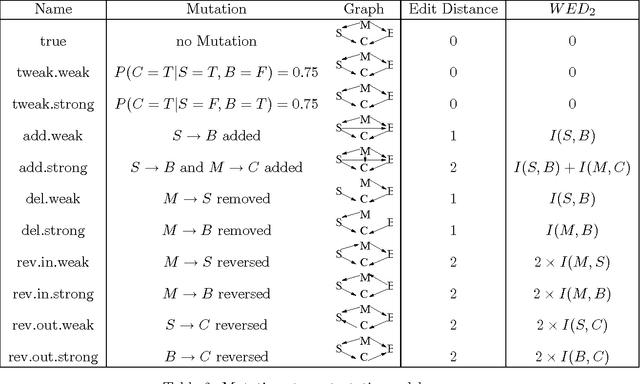

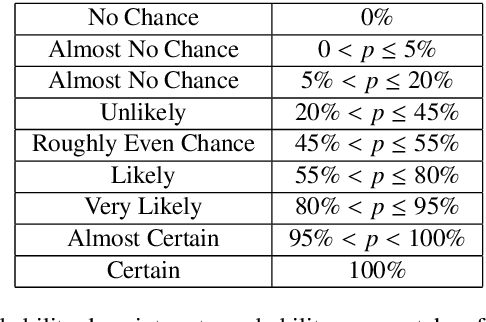

Abstract:The two most commonly used criteria for assessing causal model discovery with artificial data are edit-distance and Kullback-Leibler divergence, measured from the true model to the learned model. Both of these metrics maximally reward the true model. However, we argue that they are both insufficiently discriminating in judging the relative merits of false models. Edit distance, for example, fails to distinguish between strong and weak probabilistic dependencies. KL divergence, on the other hand, rewards equally all statistically equivalent models, regardless of their different causal claims. We propose an augmented KL divergence, which we call Causal KL (CKL), which takes into account causal relationships which distinguish between observationally equivalent models. Results are presented for three variants of CKL, showing that Causal KL works well in practice.

BARD: A structured technique for group elicitation of Bayesian networks to support analytic reasoning

Mar 02, 2020

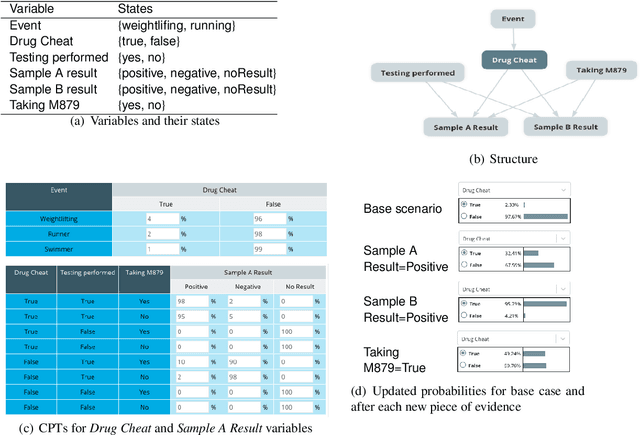

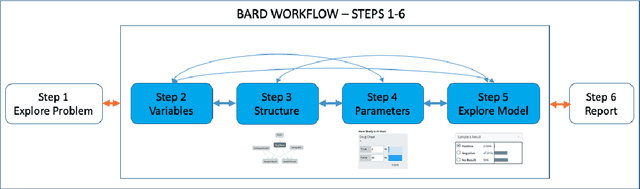

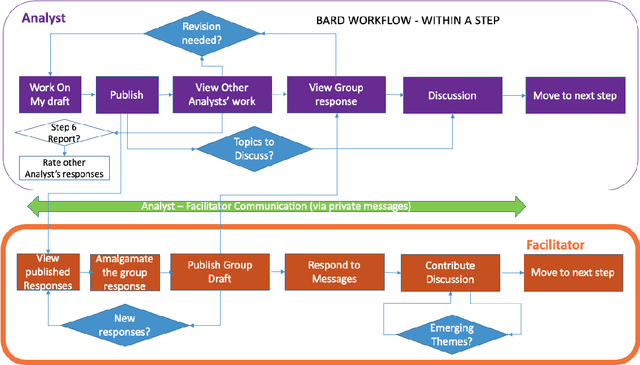

Abstract:In many complex, real-world situations, problem solving and decision making require effective reasoning about causation and uncertainty. However, human reasoning in these cases is prone to confusion and error. Bayesian networks (BNs) are an artificial intelligence technology that models uncertain situations, supporting probabilistic and causal reasoning and decision making. However, to date, BN methodologies and software require significant upfront training, do not provide much guidance on the model building process, and do not support collaboratively building BNs. BARD (Bayesian ARgumentation via Delphi) is both a methodology and an expert system that utilises (1) BNs as the underlying structured representations for better argument analysis, (2) a multi-user web-based software platform and Delphi-style social processes to assist with collaboration, and (3) short, high-quality e-courses on demand, a highly structured process to guide BN construction, and a variety of helpful tools to assist in building and reasoning with BNs, including an automated explanation tool to assist effective report writing. The result is an end-to-end online platform, with associated online training, for groups without prior BN expertise to understand and analyse a problem, build a model of its underlying probabilistic causal structure, validate and reason with the causal model, and use it to produce a written analytic report. Initial experimental results demonstrate that BARD aids in problem solving, reasoning and collaboration.

Latent Variable Discovery Using Dependency Patterns

Jul 22, 2016

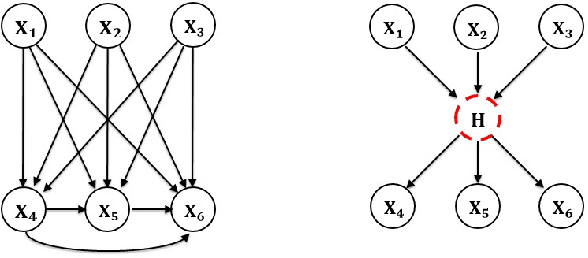

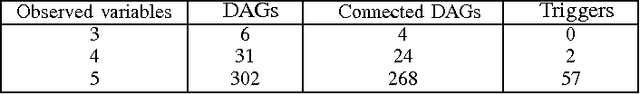

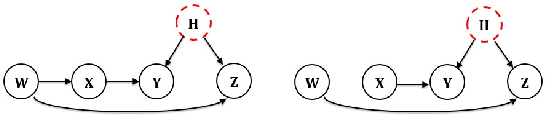

Abstract:The causal discovery of Bayesian networks is an active and important research area, and it is based upon searching the space of causal models for those which can best explain a pattern of probabilistic dependencies shown in the data. However, some of those dependencies are generated by causal structures involving variables which have not been measured, i.e., latent variables. Some such patterns of dependency "reveal" themselves, in that no model based solely upon the observed variables can explain them as well as a model using a latent variable. That is what latent variable discovery is based upon. Here we did a search for finding them systematically, so that they may be applied in latent variable discovery in a more rigorous fashion.

Learning Bayesian Networks with Restricted Causal Interactions

Jan 23, 2013

Abstract:A major problem for the learning of Bayesian networks (BNs) is the exponential number of parameters needed for conditional probability tables. Recent research reduces this complexity by modeling local structure in the probability tables. We examine the use of log-linear local models. While log-linear models in this context are not new (Whittaker, 1990; Buntine, 1991; Neal, 1992; Heckerman and Meek, 1997), for structure learning they are generally subsumed under a naive Bayes model. We describe an alternative interpretation, and use a Minimum Message Length (MML) (Wallace, 1987) metric for structure learning of networks exhibiting causal independence, which we term first-order networks (FONs). We also investigate local model selection on a node-by-node basis.

Bayesian Poker

Jan 23, 2013

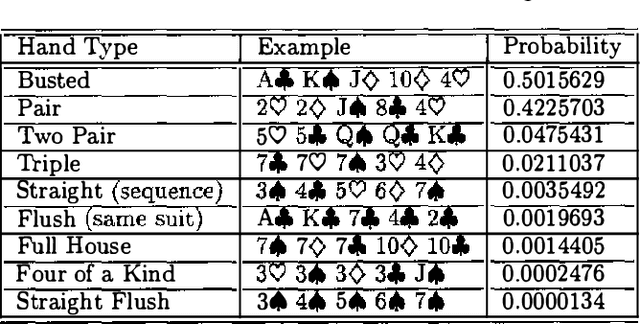

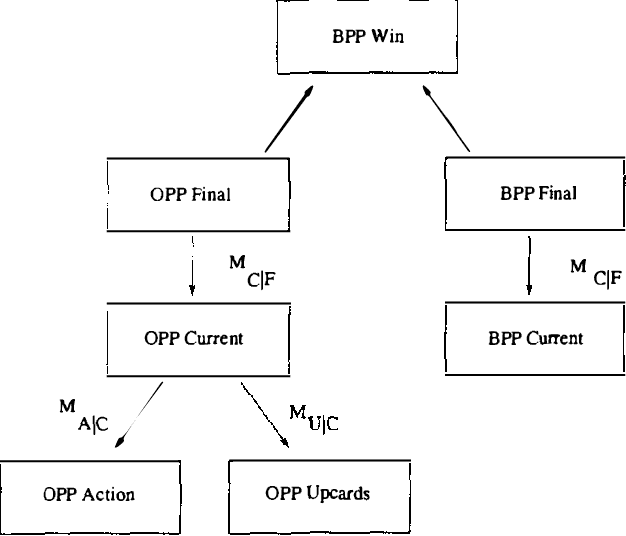

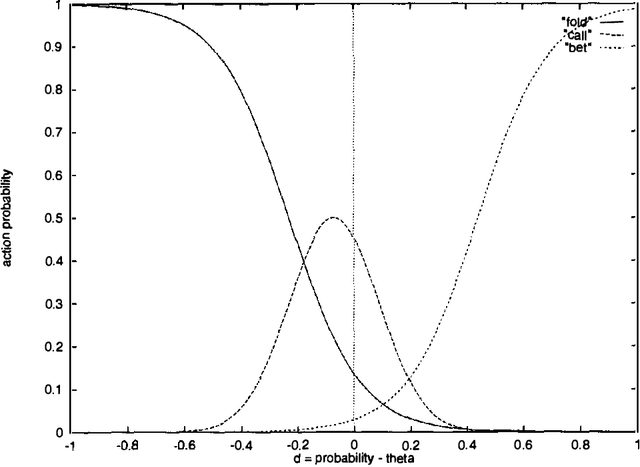

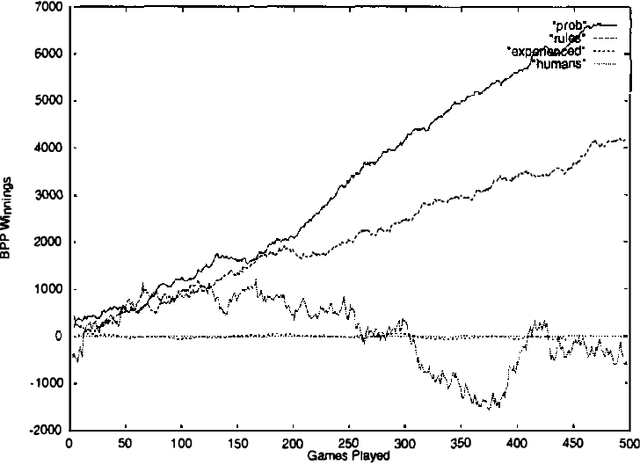

Abstract:Poker is ideal for testing automated reasoning under uncertainty. It introduces uncertainty both by physical randomization and by incomplete information about opponents hands.Another source OF uncertainty IS the limited information available TO construct psychological models OF opponents, their tendencies TO bluff, play conservatively, reveal weakness, etc. AND the relation BETWEEN their hand strengths AND betting behaviour. ALL OF these uncertainties must be assessed accurately AND combined effectively FOR ANY reasonable LEVEL OF skill IN the game TO be achieved, since good decision making IS highly sensitive TO those tasks.We describe our Bayesian Poker Program(BPP), which uses a Bayesian network TO model the programs poker hand, the opponents hand AND the opponents playing behaviour conditioned upon the hand, and betting curves which govern play given a probability of winning. The history of play with opponents is used to improve BPPs understanding OF their behaviour.We compare BPP experimentally WITH : a simple RULE - based system; a program which depends exclusively ON hand probabilities(i.e., without opponent modeling); AND WITH human players.BPP has shown itself TO be an effective player against ALL these opponents, barring the better humans.We also sketch out SOME likely ways OF improving play.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge