Udunna C Anazodo

Resource-Efficient Glioma Segmentation on Sub-Saharan MRI

Sep 11, 2025Abstract:Gliomas are the most prevalent type of primary brain tumors, and their accurate segmentation from MRI is critical for diagnosis, treatment planning, and longitudinal monitoring. However, the scarcity of high-quality annotated imaging data in Sub-Saharan Africa (SSA) poses a significant challenge for deploying advanced segmentation models in clinical workflows. This study introduces a robust and computationally efficient deep learning framework tailored for resource-constrained settings. We leveraged a 3D Attention UNet architecture augmented with residual blocks and enhanced through transfer learning from pre-trained weights on the BraTS 2021 dataset. Our model was evaluated on 95 MRI cases from the BraTS-Africa dataset, a benchmark for glioma segmentation in SSA MRI data. Despite the limited data quality and quantity, our approach achieved Dice scores of 0.76 for the Enhancing Tumor (ET), 0.80 for Necrotic and Non-Enhancing Tumor Core (NETC), and 0.85 for Surrounding Non-Functional Hemisphere (SNFH). These results demonstrate the generalizability of the proposed model and its potential to support clinical decision making in low-resource settings. The compact architecture, approximately 90 MB, and sub-minute per-volume inference time on consumer-grade hardware further underscore its practicality for deployment in SSA health systems. This work contributes toward closing the gap in equitable AI for global health by empowering underserved regions with high-performing and accessible medical imaging solutions.

Parameter-efficient Fine-tuning for improved Convolutional Baseline for Brain Tumor Segmentation in Sub-Saharan Africa Adult Glioma Dataset

Dec 18, 2024

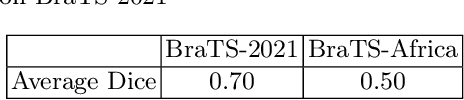

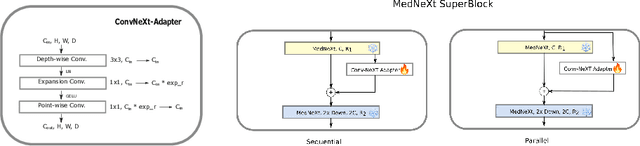

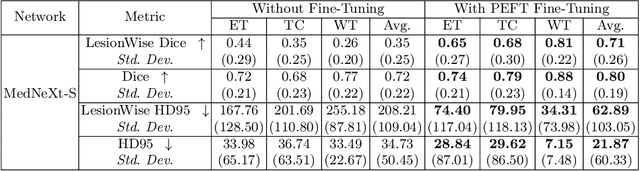

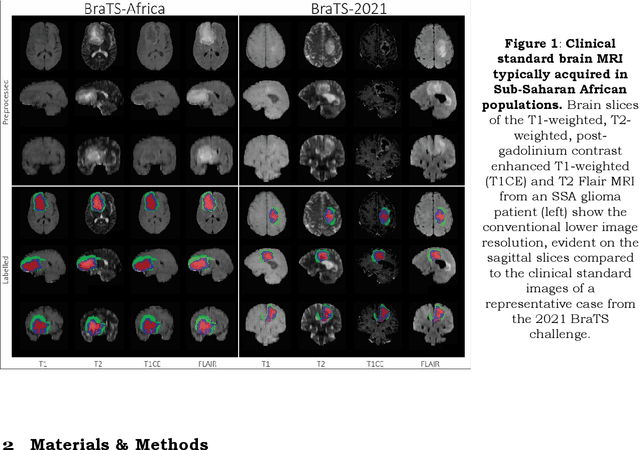

Abstract:Automating brain tumor segmentation using deep learning methods is an ongoing challenge in medical imaging. Multiple lingering issues exist including domain-shift and applications in low-resource settings which brings a unique set of challenges including scarcity of data. As a step towards solving these specific problems, we propose Convolutional adapter-inspired Parameter-efficient Fine-tuning (PEFT) of MedNeXt architecture. To validate our idea, we show our method performs comparable to full fine-tuning with the added benefit of reduced training compute using BraTS-2021 as pre-training dataset and BraTS-Africa as the fine-tuning dataset. BraTS-Africa consists of a small dataset (60 train / 35 validation) from the Sub-Saharan African population with marked shift in the MRI quality compared to BraTS-2021 (1251 train samples). We first show that models trained on BraTS-2021 dataset do not generalize well to BraTS-Africa as shown by 20% reduction in mean dice on BraTS-Africa validation samples. Then, we show that PEFT can leverage both the BraTS-2021 and BraTS-Africa dataset to obtain mean dice of 0.8 compared to 0.72 when trained only on BraTS-Africa. Finally, We show that PEFT (0.80 mean dice) results in comparable performance to full fine-tuning (0.77 mean dice) which may show PEFT to be better on average but the boxplots show that full finetuning results is much lesser variance in performance. Nevertheless, on disaggregation of the dice metrics, we find that the model has tendency to oversegment as shown by high specificity (0.99) compared to relatively low sensitivity(0.75). The source code is available at https://github.com/CAMERA-MRI/SPARK2024/tree/main/PEFT_MedNeXt

Towards SAMBA: Segment Anything Model for Brain Tumor Segmentation in Sub-Sharan African Populations

Dec 19, 2023

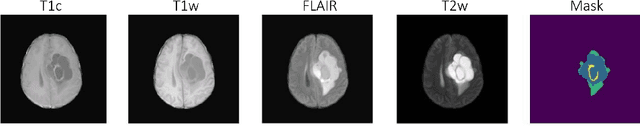

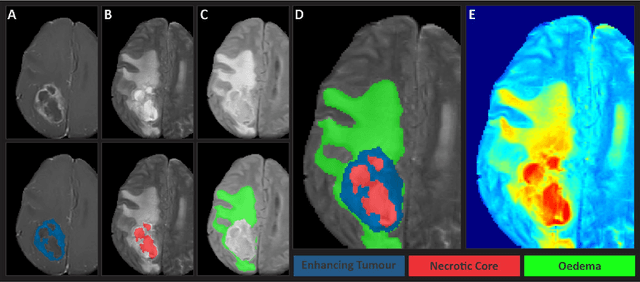

Abstract:Gliomas, the most prevalent primary brain tumors, require precise segmentation for diagnosis and treatment planning. However, this task poses significant challenges, particularly in the African population, were limited access to high-quality imaging data hampers algorithm performance. In this study, we propose an innovative approach combining the Segment Anything Model (SAM) and a voting network for multi-modal glioma segmentation. By fine-tuning SAM with bounding box-guided prompts (SAMBA), we adapt the model to the complexities of African datasets. Our ensemble strategy, utilizing multiple modalities and views, produces a robust consensus segmentation, addressing intra-tumoral heterogeneity. Although the low quality of scans presents difficulties, our methodology has the potential to profoundly impact clinical practice in resource-limited settings such as Africa, improving treatment decisions and advancing neuro-oncology research. Furthermore, successful application to other brain tumor types and lesions in the future holds promise for a broader transformation in neurological imaging, improving healthcare outcomes across all settings. This study was conducted on the Brain Tumor Segmentation (BraTS) Challenge Africa (BraTS-Africa) dataset, which provides a valuable resource for addressing challenges specific to resource-limited settings, particularly the African population, and facilitating the development of effective and more generalizable segmentation algorithms. To illustrate our approach's potential, our experiments on the BraTS-Africa dataset yielded compelling results, with SAM attaining a Dice coefficient of 86.6 for binary segmentation and 60.4 for multi-class segmentation.

The Brain Tumor Segmentation Challenge 2023: Glioma Segmentation in Sub-Saharan Africa Patient Population

May 30, 2023

Abstract:Gliomas are the most common type of primary brain tumors. Although gliomas are relatively rare, they are among the deadliest types of cancer, with a survival rate of less than 2 years after diagnosis. Gliomas are challenging to diagnose, hard to treat and inherently resistant to conventional therapy. Years of extensive research to improve diagnosis and treatment of gliomas have decreased mortality rates across the Global North, while chances of survival among individuals in low- and middle-income countries (LMICs) remain unchanged and are significantly worse in Sub-Saharan Africa (SSA) populations. Long-term survival with glioma is associated with the identification of appropriate pathological features on brain MRI and confirmation by histopathology. Since 2012, the Brain Tumor Segmentation (BraTS) Challenge have evaluated state-of-the-art machine learning methods to detect, characterize, and classify gliomas. However, it is unclear if the state-of-the-art methods can be widely implemented in SSA given the extensive use of lower-quality MRI technology, which produces poor image contrast and resolution and more importantly, the propensity for late presentation of disease at advanced stages as well as the unique characteristics of gliomas in SSA (i.e., suspected higher rates of gliomatosis cerebri). Thus, the BraTS-Africa Challenge provides a unique opportunity to include brain MRI glioma cases from SSA in global efforts through the BraTS Challenge to develop and evaluate computer-aided-diagnostic (CAD) methods for the detection and characterization of glioma in resource-limited settings, where the potential for CAD tools to transform healthcare are more likely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge