Bijay Adhikari

From Development to Deployment of AI-assisted Telehealth and Screening for Vision- and Hearing-threatening diseases in resource-constrained settings: Field Observations, Challenges and Way Forward

Sep 19, 2025

Abstract:Vision- and hearing-threatening diseases cause preventable disability, especially in resource-constrained settings(RCS) with few specialists and limited screening setup. Large scale AI-assisted screening and telehealth has potential to expand early detection, but practical deployment is challenging in paper-based workflows and limited documented field experience exist to build upon. We provide insights on challenges and ways forward in development to adoption of scalable AI-assisted Telehealth and screening in such settings. Specifically, we find that iterative, interdisciplinary collaboration through early prototyping, shadow deployment and continuous feedback is important to build shared understanding as well as reduce usability hurdles when transitioning from paper-based to AI-ready workflows. We find public datasets and AI models highly useful despite poor performance due to domain shift. In addition, we find the need for automated AI-based image quality check to capture gradable images for robust screening in high-volume camps. Our field learning stress the importance of treating AI development and workflow digitization as an end-to-end, iterative co-design process. By documenting these practical challenges and lessons learned, we aim to address the gap in contextual, actionable field knowledge for building real-world AI-assisted telehealth and mass-screening programs in RCS.

Parameter-efficient Fine-tuning for improved Convolutional Baseline for Brain Tumor Segmentation in Sub-Saharan Africa Adult Glioma Dataset

Dec 18, 2024

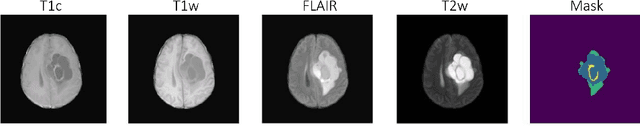

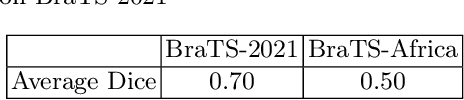

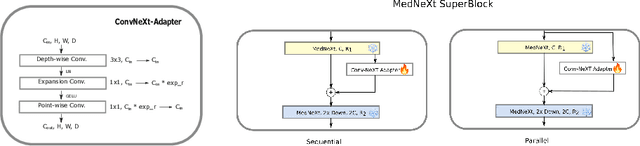

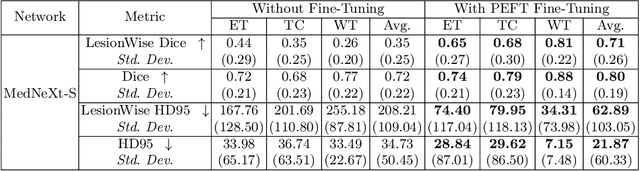

Abstract:Automating brain tumor segmentation using deep learning methods is an ongoing challenge in medical imaging. Multiple lingering issues exist including domain-shift and applications in low-resource settings which brings a unique set of challenges including scarcity of data. As a step towards solving these specific problems, we propose Convolutional adapter-inspired Parameter-efficient Fine-tuning (PEFT) of MedNeXt architecture. To validate our idea, we show our method performs comparable to full fine-tuning with the added benefit of reduced training compute using BraTS-2021 as pre-training dataset and BraTS-Africa as the fine-tuning dataset. BraTS-Africa consists of a small dataset (60 train / 35 validation) from the Sub-Saharan African population with marked shift in the MRI quality compared to BraTS-2021 (1251 train samples). We first show that models trained on BraTS-2021 dataset do not generalize well to BraTS-Africa as shown by 20% reduction in mean dice on BraTS-Africa validation samples. Then, we show that PEFT can leverage both the BraTS-2021 and BraTS-Africa dataset to obtain mean dice of 0.8 compared to 0.72 when trained only on BraTS-Africa. Finally, We show that PEFT (0.80 mean dice) results in comparable performance to full fine-tuning (0.77 mean dice) which may show PEFT to be better on average but the boxplots show that full finetuning results is much lesser variance in performance. Nevertheless, on disaggregation of the dice metrics, we find that the model has tendency to oversegment as shown by high specificity (0.99) compared to relatively low sensitivity(0.75). The source code is available at https://github.com/CAMERA-MRI/SPARK2024/tree/main/PEFT_MedNeXt

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge