Udesh Gunarathna

Intelligent Autonomous Intersection Management

Feb 09, 2022

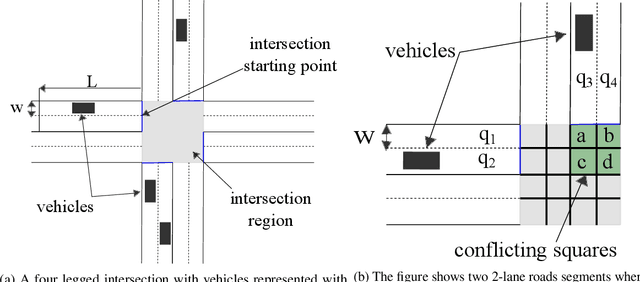

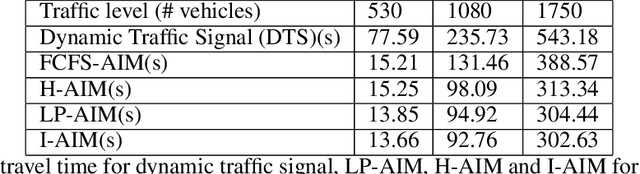

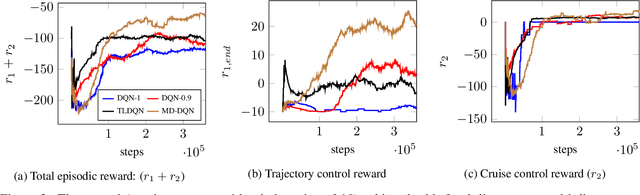

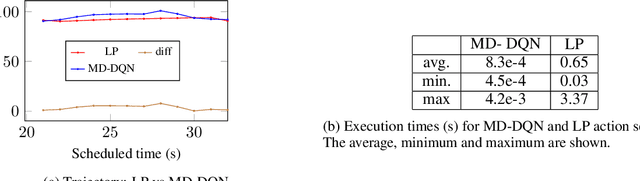

Abstract:Connected Autonomous Vehicles will make autonomous intersection management a reality replacing traditional traffic signal control. Autonomous intersection management requires time and speed adjustment of vehicles arriving at an intersection for collision-free passing through the intersection. Due to its computational complexity, this problem has been studied only when vehicle arrival times towards the vicinity of the intersection are known beforehand, which limits the applicability of these solutions for real-time deployment. To solve the real-time autonomous traffic intersection management problem, we propose a reinforcement learning (RL) based multiagent architecture and a novel RL algorithm coined multi-discount Q-learning. In multi-discount Q-learning, we introduce a simple yet effective way to solve a Markov Decision Process by preserving both short-term and long-term goals, which is crucial for collision-free speed control. Our empirical results show that our RL-based multiagent solution can achieve near-optimal performance efficiently when minimizing the travel time through an intersection.

Solving Dynamic Graph Problems with Multi-Attention Deep Reinforcement Learning

Jan 13, 2022

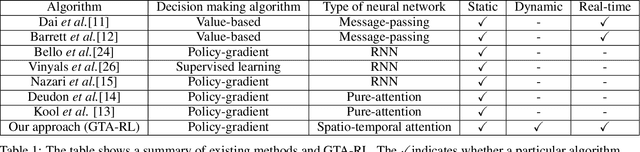

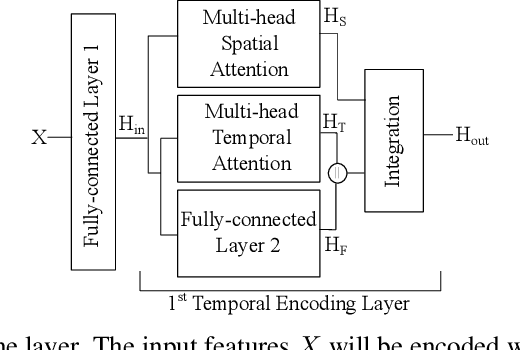

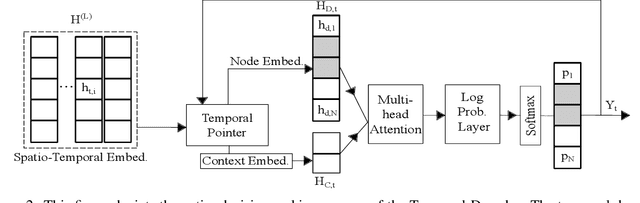

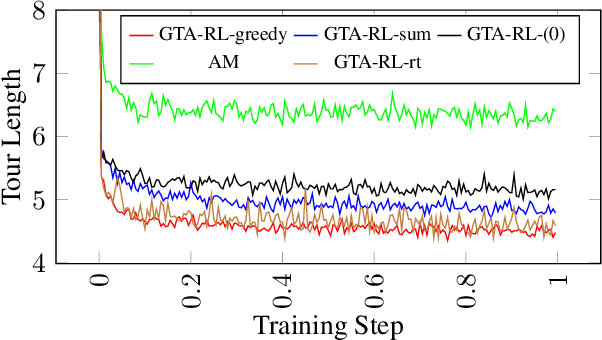

Abstract:Graph problems such as traveling salesman problem, or finding minimal Steiner trees are widely studied and used in data engineering and computer science. Typically, in real-world applications, the features of the graph tend to change over time, thus, finding a solution to the problem becomes challenging. The dynamic version of many graph problems are the key for a plethora of real-world problems in transportation, telecommunication, and social networks. In recent years, using deep learning techniques to find heuristic solutions for NP-hard graph combinatorial problems has gained much interest as these learned heuristics can find near-optimal solutions efficiently. However, most of the existing methods for learning heuristics focus on static graph problems. The dynamic nature makes NP-hard graph problems much more challenging to learn, and the existing methods fail to find reasonable solutions. In this paper, we propose a novel architecture named Graph Temporal Attention with Reinforcement Learning (GTA-RL) to learn heuristic solutions for graph-based dynamic combinatorial optimization problems. The GTA-RL architecture consists of an encoder capable of embedding temporal features of a combinatorial problem instance and a decoder capable of dynamically focusing on the embedded features to find a solution to a given combinatorial problem instance. We then extend our architecture to learn heuristics for the real-time version of combinatorial optimization problems where all input features of a problem are not known a prior, but rather learned in real-time. Our experimental results against several state-of-the-art learning-based algorithms and optimal solvers demonstrate that our approach outperforms the state-of-the-art learning-based approaches in terms of effectiveness and optimal solvers in terms of efficiency on dynamic and real-time graph combinatorial optimization.

Dynamic Graph Configuration with Reinforcement Learning for Connected Autonomous Vehicle Trajectories

Oct 14, 2019

Abstract:Traditional traffic optimization solutions assume that the graph structure of road networks is static, missing opportunities for further traffic flow optimization. We are interested in optimizing traffic flows as a new type of graph-based problem, where the graph structure of a road network can adapt to traffic conditions in real time. In particular, we focus on the dynamic configuration of traffic-lane directions, which can help balance the usage of traffic lanes in opposite directions. The rise of connected autonomous vehicles offers an opportunity to apply this type of dynamic traffic optimization at a large scale. The existing techniques for optimizing lane-directions are however not suitable for dynamic traffic environments due to their high computational complexity and the static nature. In this paper, we propose an efficient traffic optimization solution, called Coordinated Learning-based Lane Allocation (CLLA), which is suitable for dynamic configuration of lane-directions. CLLA consists of a two-layer multi-agent architecture, where the bottom-layer agents use a machine learning technique to find a suitable configuration of lane-directions around individual road intersections. The lane-direction changes proposed by the learning agents are then coordinated at a higher level to reduce the negative impact of the changes on other parts of the road network. Our experimental results show that CLLA can reduce the average travel time significantly in congested road networks. We believe our method is general enough to be applied to other types of networks as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge