Tucker Hybinette Balch

Collusion Resistant Federated Learning with Oblivious Distributed Differential Privacy

Feb 20, 2022

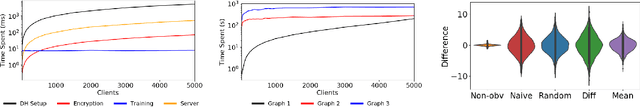

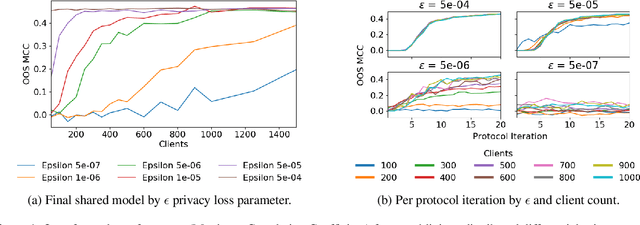

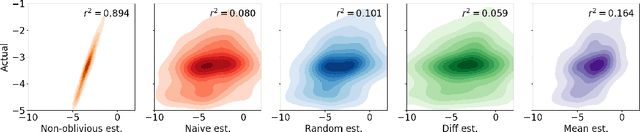

Abstract:Privacy-preserving federated learning enables a population of distributed clients to jointly learn a shared model while keeping client training data private, even from an untrusted server. Prior works do not provide efficient solutions that protect against collusion attacks in which parties collaborate to expose an honest client's model parameters. We present an efficient mechanism based on oblivious distributed differential privacy that is the first to protect against such client collusion, including the "Sybil" attack in which a server preferentially selects compromised devices or simulates fake devices. We leverage the novel privacy mechanism to construct a secure federated learning protocol and prove the security of that protocol. We conclude with empirical analysis of the protocol's execution speed, learning accuracy, and privacy performance on two data sets within a realistic simulation of 5,000 distributed network clients.

Intra-day Equity Price Prediction using Deep Learning as a Measure of Market Efficiency

Aug 22, 2019

Abstract:In finance, the weak form of the Efficient Market Hypothesis asserts that historic stock price and volume data cannot inform predictions of future prices. In this paper we show that, to the contrary, future intra-day stock prices could be predicted effectively until 2009. We demonstrate this using two different profitable machine learning-based trading strategies. However, the effectiveness of both approaches diminish over time, and neither of them are profitable after 2009. We present our implementation and results in detail for the period 2003-2017 and propose a novel idea: the use of such flexible machine learning methods as an objective measure of relative market efficiency. We conclude with a candidate explanation, comparing our returns over time with high-frequency trading volume, and suggest concrete steps for further investigation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge