Tsai-Ching Lu

Most Relevant Explanation in Bayesian Networks

Jan 16, 2014

Abstract:A major inference task in Bayesian networks is explaining why some variables are observed in their particular states using a set of target variables. Existing methods for solving this problem often generate explanations that are either too simple (underspecified) or too complex (overspecified). In this paper, we introduce a method called Most Relevant Explanation (MRE) which finds a partial instantiation of the target variables that maximizes the generalized Bayes factor (GBF) as the best explanation for the given evidence. Our study shows that GBF has several theoretical properties that enable MRE to automatically identify the most relevant target variables in forming its explanation. In particular, conditional Bayes factor (CBF), defined as the GBF of a new explanation conditioned on an existing explanation, provides a soft measure on the degree of relevance of the variables in the new explanation in explaining the evidence given the existing explanation. As a result, MRE is able to automatically prune less relevant variables from its explanation. We also show that CBF is able to capture well the explaining-away phenomenon that is often represented in Bayesian networks. Moreover, we define two dominance relations between the candidate solutions and use the relations to generalize MRE to find a set of top explanations that is both diverse and representative. Case studies on several benchmark diagnostic Bayesian networks show that MRE is often able to find explanatory hypotheses that are not only precise but also concise.

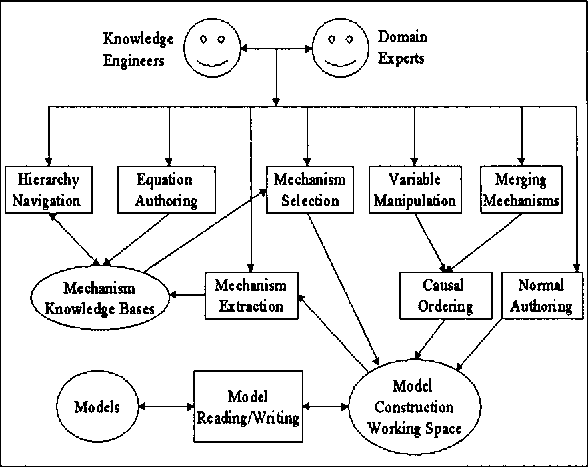

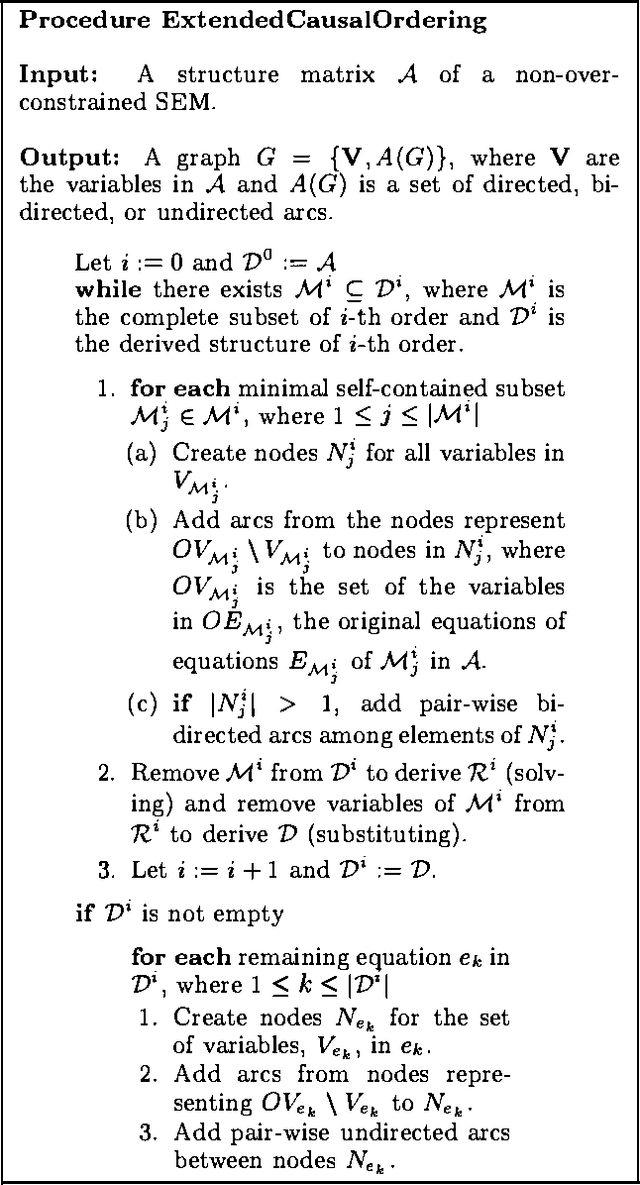

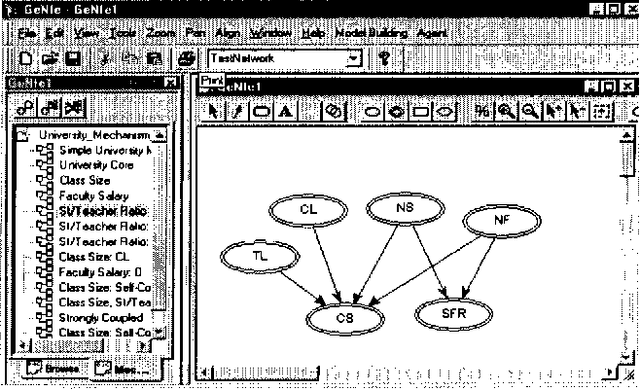

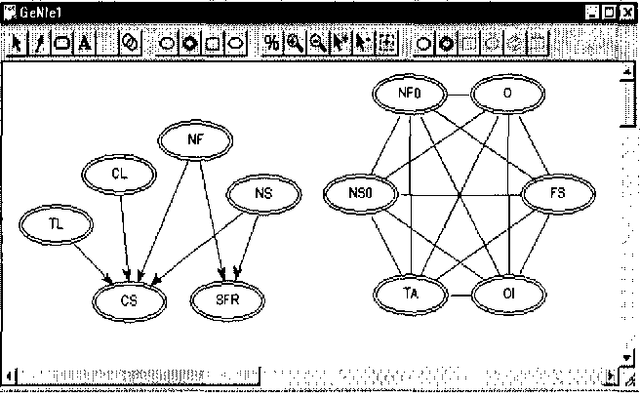

Causal Mechanism-based Model Construction

Jan 16, 2013

Abstract:We propose a framework for building graphical causal model that is based on the concept of causal mechanisms. Causal models are intuitive for human users and, more importantly, support the prediction of the effect of manipulation. We describe an implementation of the proposed framework as an interactive model construction module, ImaGeNIe, in SMILE (Structural Modeling, Inference, and Learning Engine) and in GeNIe (SMILE's Windows user interface).

Annealed MAP

Jul 11, 2012

Abstract:Maximum a Posteriori assignment (MAP) is the problem of finding the most probable instantiation of a set of variables given the partial evidence on the other variables in a Bayesian network. MAP has been shown to be a NP-hard problem [22], even for constrained networks, such as polytrees [18]. Hence, previous approaches often fail to yield any results for MAP problems in large complex Bayesian networks. To address this problem, we propose AnnealedMAP algorithm, a simulated annealing-based MAP algorithm. The AnnealedMAP algorithm simulates a non-homogeneous Markov chain whose invariant function is a probability density that concentrates itself on the modes of the target density. We tested this algorithm on several real Bayesian networks. The results show that, while maintaining good quality of the MAP solutions, the AnnealedMAP algorithm is also able to solve many problems that are beyond the reach of previous approaches.

Most Relevant Explanation: Properties, Algorithms, and Evaluations

May 09, 2012

Abstract:Most Relevant Explanation (MRE) is a method for finding multivariate explanations for given evidence in Bayesian networks [12]. This paper studies the theoretical properties of MRE and develops an algorithm for finding multiple top MRE solutions. Our study shows that MRE relies on an implicit soft relevance measure in automatically identifying the most relevant target variables and pruning less relevant variables from an explanation. The soft measure also enables MRE to capture the intuitive phenomenon of explaining away encoded in Bayesian networks. Furthermore, our study shows that the solution space of MRE has a special lattice structure which yields interesting dominance relations among the solutions. A K-MRE algorithm based on these dominance relations is developed for generating a set of top solutions that are more representative. Our empirical results show that MRE methods are promising approaches for explanation in Bayesian networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge