Trung-Dung Hoang

Label-free estimation of clinically relevant performance metrics under distribution shifts

Jul 30, 2025Abstract:Performance monitoring is essential for safe clinical deployment of image classification models. However, because ground-truth labels are typically unavailable in the target dataset, direct assessment of real-world model performance is infeasible. State-of-the-art performance estimation methods address this by leveraging confidence scores to estimate the target accuracy. Despite being a promising direction, the established methods mainly estimate the model's accuracy and are rarely evaluated in a clinical domain, where strong class imbalances and dataset shifts are common. Our contributions are twofold: First, we introduce generalisations of existing performance prediction methods that directly estimate the full confusion matrix. Then, we benchmark their performance on chest x-ray data in real-world distribution shifts as well as simulated covariate and prevalence shifts. The proposed confusion matrix estimation methods reliably predicted clinically relevant counting metrics on medical images under distribution shifts. However, our simulated shift scenarios exposed important failure modes of current performance estimation techniques, calling for a better understanding of real-world deployment contexts when implementing these performance monitoring techniques for postmarket surveillance of medical AI models.

A Real-Time Digital Twin for Type 1 Diabetes using Simulation-Based Inference

Jul 02, 2025Abstract:Accurately estimating parameters of physiological models is essential to achieving reliable digital twins. For Type 1 Diabetes, this is particularly challenging due to the complexity of glucose-insulin interactions. Traditional methods based on Markov Chain Monte Carlo struggle with high-dimensional parameter spaces and fit parameters from scratch at inference time, making them slow and computationally expensive. In this study, we propose a Simulation-Based Inference approach based on Neural Posterior Estimation to efficiently capture the complex relationships between meal intake, insulin, and glucose level, providing faster, amortized inference. Our experiments demonstrate that SBI not only outperforms traditional methods in parameter estimation but also generalizes better to unseen conditions, offering real-time posterior inference with reliable uncertainty quantification.

Subgroup Performance Analysis in Hidden Stratifications

Mar 13, 2025Abstract:Machine learning (ML) models may suffer from significant performance disparities between patient groups. Identifying such disparities by monitoring performance at a granular level is crucial for safely deploying ML to each patient. Traditional subgroup analysis based on metadata can expose performance disparities only if the available metadata (e.g., patient sex) sufficiently reflects the main reasons for performance variability, which is not common. Subgroup discovery techniques that identify cohesive subgroups based on learned feature representations appear as a potential solution: They could expose hidden stratifications and provide more granular subgroup performance reports. However, subgroup discovery is challenging to evaluate even as a standalone task, as ground truth stratification labels do not exist in real data. Subgroup discovery has thus neither been applied nor evaluated for the application of subgroup performance monitoring. Here, we apply subgroup discovery for performance monitoring in chest x-ray and skin lesion classification. We propose novel evaluation strategies and show that a simplified subgroup discovery method without access to classification labels or metadata can expose larger performance disparities than traditional metadata-based subgroup analysis. We provide the first compelling evidence that subgroup discovery can serve as an important tool for comprehensive performance validation and monitoring of trustworthy AI in medicine.

Learning to Discretize Denoising Diffusion ODEs

May 24, 2024

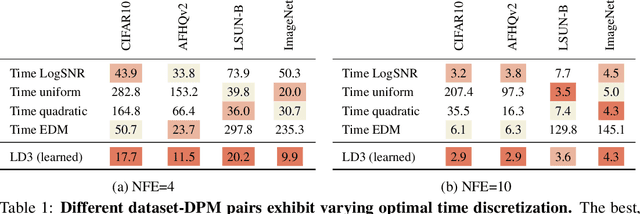

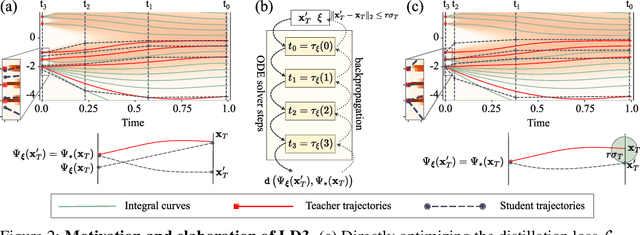

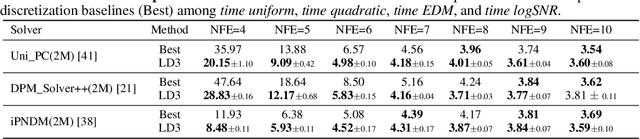

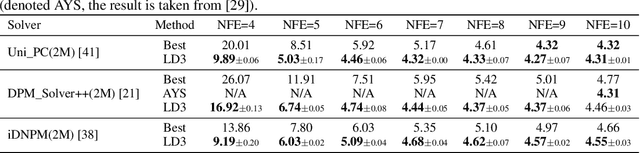

Abstract:Diffusion Probabilistic Models (DPMs) are powerful generative models showing competitive performance in various domains, including image synthesis and 3D point cloud generation. However, sampling from pre-trained DPMs involves multiple neural function evaluations (NFE) to transform Gaussian noise samples into images, resulting in higher computational costs compared to single-step generative models such as GANs or VAEs. Therefore, a crucial problem is to reduce NFE while preserving generation quality. To this end, we propose LD3, a lightweight framework for learning time discretization while sampling from the diffusion ODE encapsulated by DPMs. LD3 can be combined with various diffusion ODE solvers and consistently improves performance without retraining resource-intensive neural networks. We demonstrate analytically and empirically that LD3 enhances sampling efficiency compared to distillation-based methods, without the extensive computational overhead. We evaluate our method with extensive experiments on 5 datasets, covering unconditional and conditional sampling in both pixel-space and latent-space DPMs. For example, in about 5 minutes of training on a single GPU, our method reduces the FID score from 6.63 to 2.68 on CIFAR10 (7 NFE), and in around 20 minutes, decreases the FID from 8.51 to 5.03 on class-conditional ImageNet-256 (5 NFE). LD3 complements distillation methods, offering a more efficient approach to sampling from pre-trained diffusion models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge