Trung Quang Tran

CAP-GAN: Towards Adversarial Robustness with Cycle-consistent Attentional Purification

Feb 17, 2021

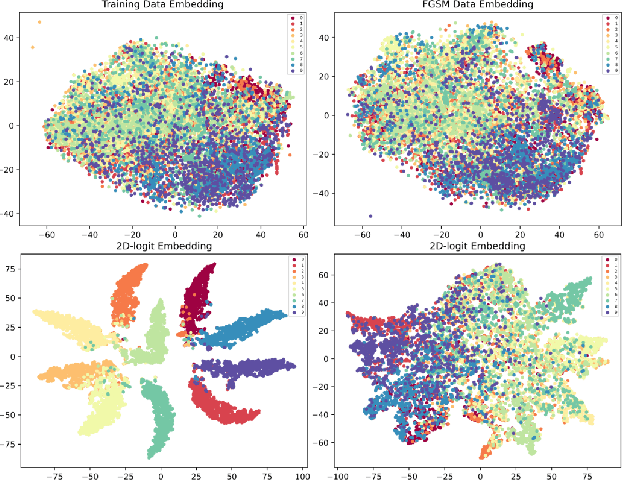

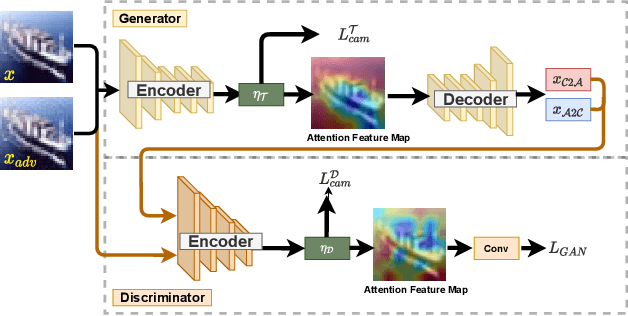

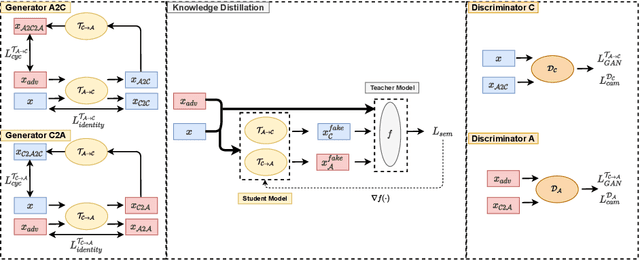

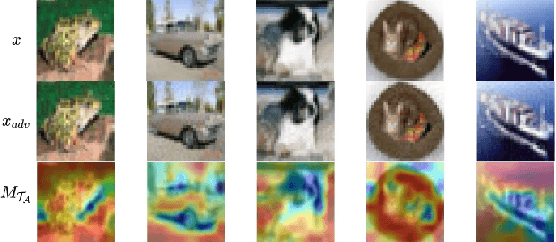

Abstract:Adversarial attack is aimed at fooling the target classifier with imperceptible perturbation. Adversarial examples, which are carefully crafted with a malicious purpose, can lead to erroneous predictions, resulting in catastrophic accidents. To mitigate the effects of adversarial attacks, we propose a novel purification model called CAP-GAN. CAP-GAN takes account of the idea of pixel-level and feature-level consistency to achieve reasonable purification under cycle-consistent learning. Specifically, we utilize the guided attention module and knowledge distillation to convey meaningful information to the purification model. Once a model is fully trained, inputs would be projected into the purification model and transformed into clean-like images. We vary the capacity of the adversary to argue the robustness against various types of attack strategies. On the CIFAR-10 dataset, CAP-GAN outperforms other pre-processing based defenses under both black-box and white-box settings.

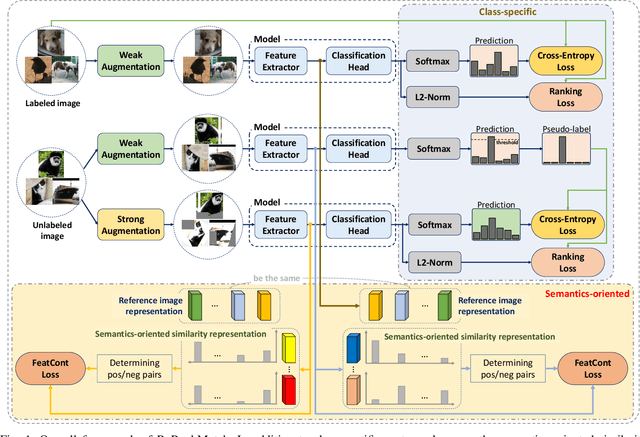

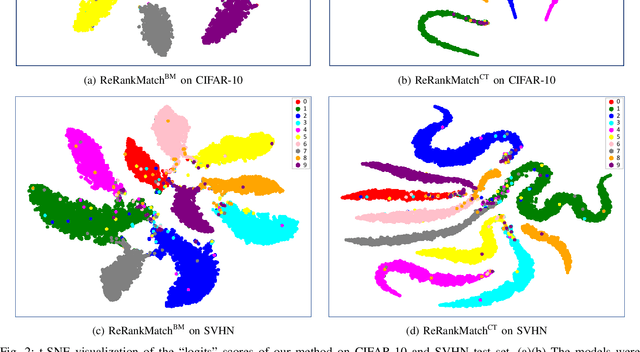

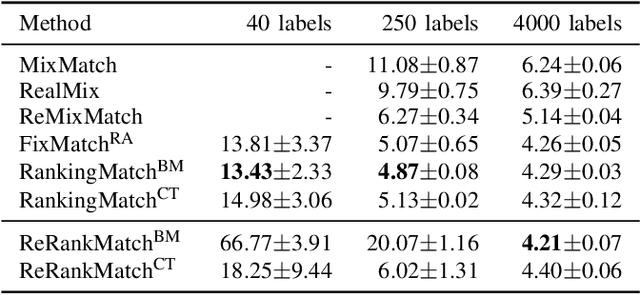

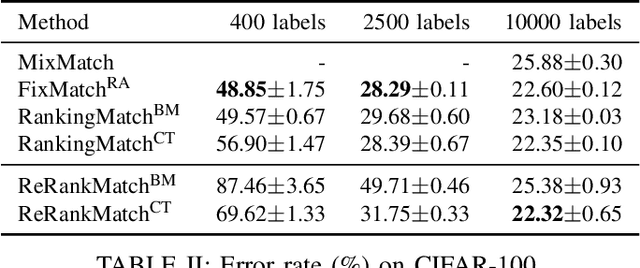

ReRankMatch: Semi-Supervised Learning with Semantics-Oriented Similarity Representation

Feb 12, 2021

Abstract:This paper proposes integrating semantics-oriented similarity representation into RankingMatch, a recently proposed semi-supervised learning method. Our method, dubbed ReRankMatch, aims to deal with the case in which labeled and unlabeled data share non-overlapping categories. ReRankMatch encourages the model to produce the similar image representations for the samples likely belonging to the same class. We evaluate our method on various datasets such as CIFAR-10, CIFAR-100, SVHN, STL-10, and Tiny ImageNet. We obtain promising results (4.21% error rate on CIFAR-10 with 4000 labels, 22.32% error rate on CIFAR-100 with 10000 labels, and 2.19% error rate on SVHN with 1000 labels) when the amount of labeled data is sufficient to learn semantics-oriented similarity representation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge