Trent Lewis

Vis-CRF, A Classical Receptive Field Model for VISION

Nov 17, 2020

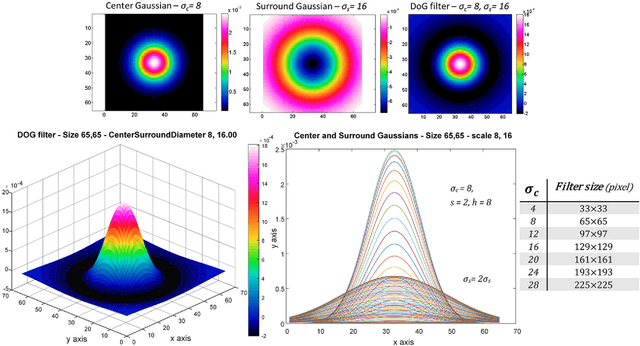

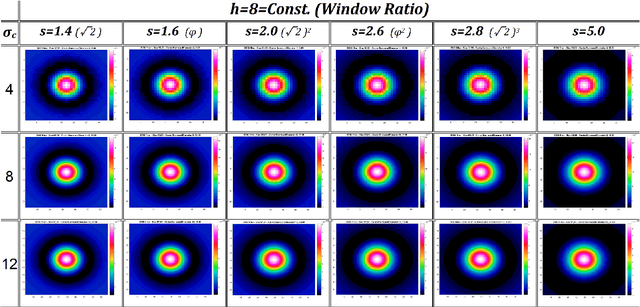

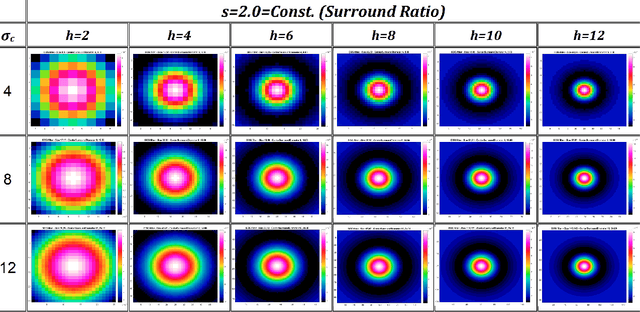

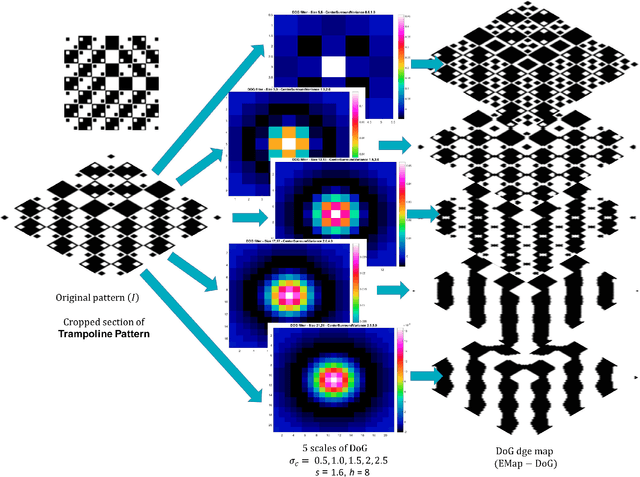

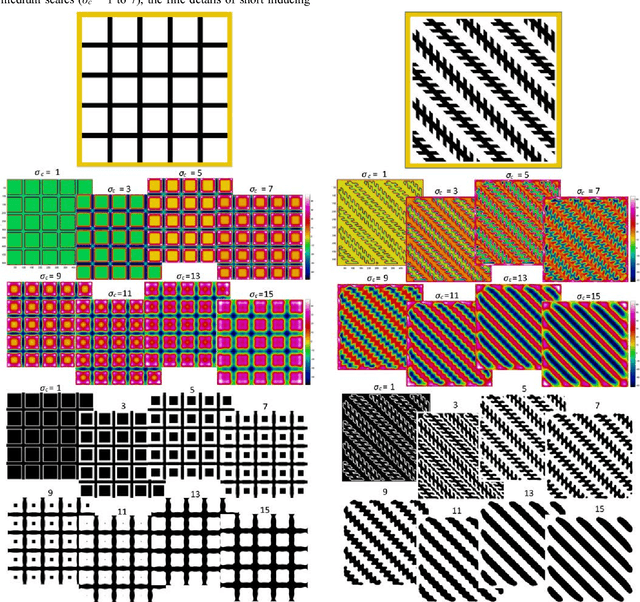

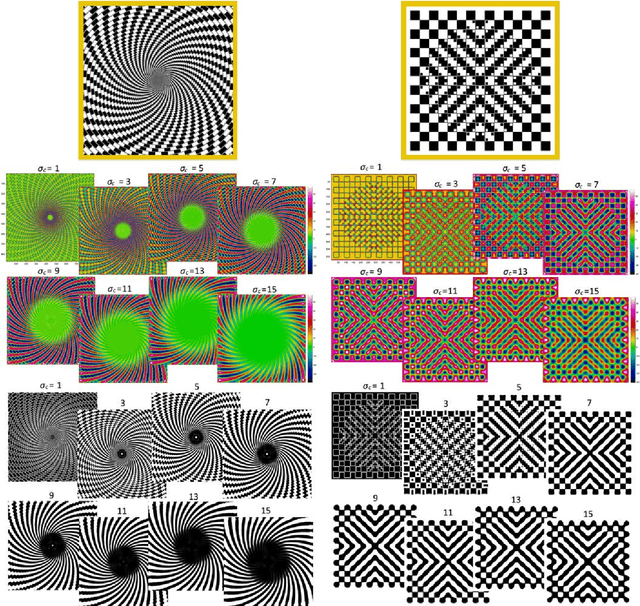

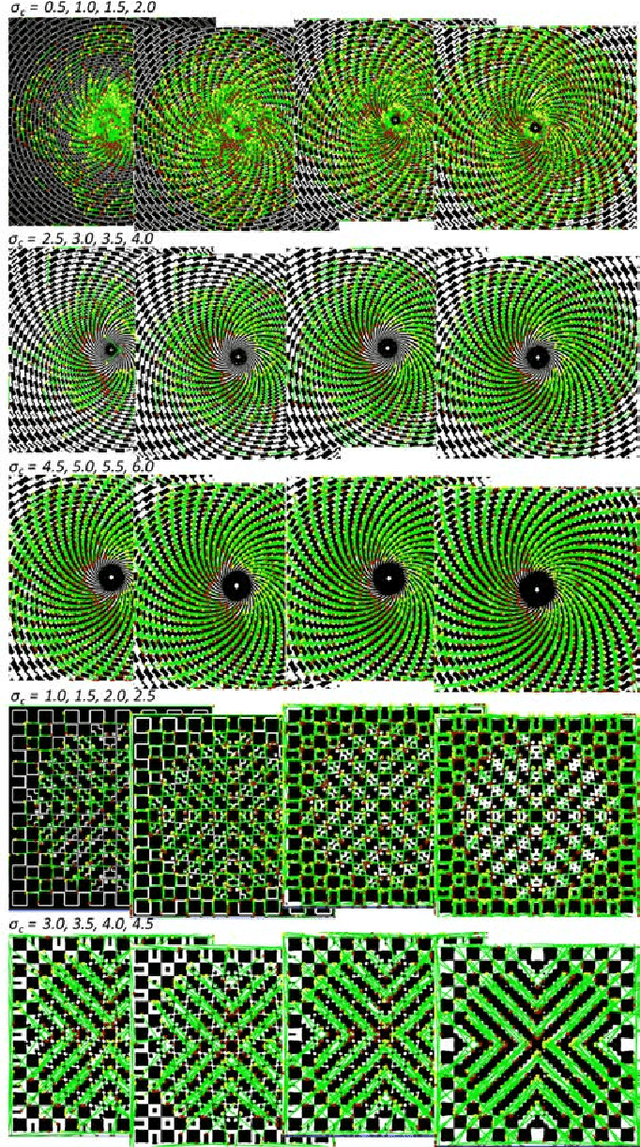

Abstract:Over the last decade, a variety of new neurophysiological experiments have led to new insights as to how, when and where retinal processing takes place, and the nature of the retinal representation encoding sent to the cortex for further processing. Based on these neurobiological discoveries, in our previous work, we provided computer simulation evidence to suggest that Geometrical illusions are explained in part, by the interaction of multiscale visual processing performed in the retina. The output of our retinal stage model, named Vis-CRF, is presented here for a sample of natural image and for several types of Tilt Illusion, in which the final tilt percept arises from multiple scale processing of Difference of Gaussians (DoG) and the perceptual interaction of foreground and background elements (Nematzadeh and Powers, 2019; Nematzadeh, 2018; Nematzadeh, Powers and Lewis, 2017; Nematzadeh, Lewis and Powers, 2015).

Informing Computer Vision with Optical Illusions

Feb 08, 2019

Abstract:Illusions are fascinating and immediately catch people's attention and interest, but they are also valuable in terms of giving us insights into human cognition and perception. A good theory of human perception should be able to explain the illusion, and a correct theory will actually give quantifiable results. We investigate here the efficiency of a computational filtering model utilised for modelling the lateral inhibition of retinal ganglion cells and their responses to a range of Geometric Illusions using isotropic Differences of Gaussian filters. This study explores the way in which illusions have been explained and shows how a simple standard model of vision based on classical receptive fields can predict the existence of these illusions as well as the degree of effect. A fundamental contribution of this work is to link bottom-up processes to higher level perception and cognition consistent with Marr's theory of vision and edge map representation.

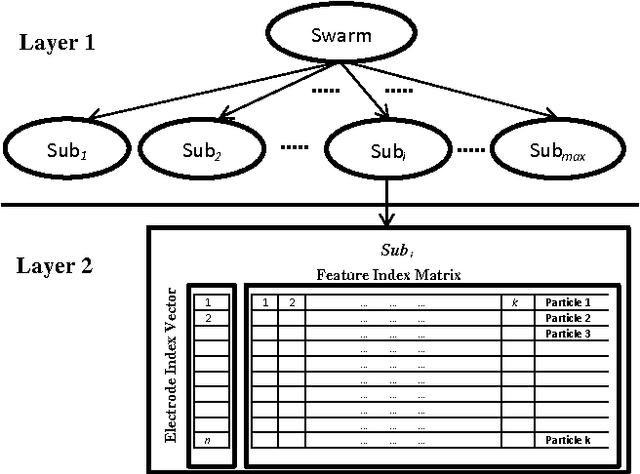

Reducing training requirements through evolutionary based dimension reduction and subject transfer

Feb 06, 2016

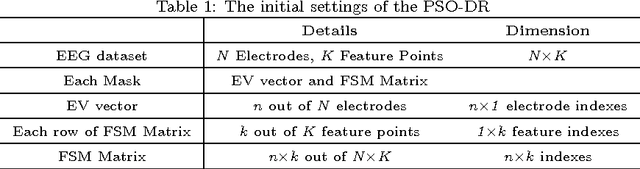

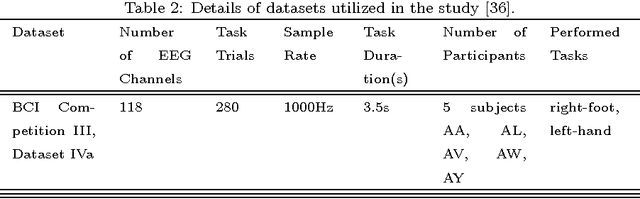

Abstract:Training Brain Computer Interface (BCI) systems to understand the intention of a subject through Electroencephalogram (EEG) data currently requires multiple training sessions with a subject in order to develop the necessary expertise to distinguish signals for different tasks. Conventionally the task of training the subject is done by introducing a training and calibration stage during which some feedback is presented to the subject. This training session can take several hours which is not appropriate for on-line EEG-based BCI systems. An alternative approach is to use previous recording sessions of the same person or some other subjects that performed the same tasks (subject transfer) for training the classifiers. The main aim of this study is to generate a methodology that allows the use of data from other subjects while reducing the dimensions of the data. The study investigates several possibilities for reducing the necessary training and calibration period in subjects and the classifiers and addresses the impact of i) evolutionary subject transfer and ii) adapting previously trained methods (retraining) using other subjects data. Our results suggest reduction to 40% of target subject data is sufficient for training the classifier. Our results also indicate the superiority of the approaches that incorporated evolutionary subject transfer and highlights the feasibility of adapting a system trained on other subjects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge