Torsten Krauß

University of Würzburg

TwinBreak: Jailbreaking LLM Security Alignments based on Twin Prompts

Jun 09, 2025Abstract:Machine learning is advancing rapidly, with applications bringing notable benefits, such as improvements in translation and code generation. Models like ChatGPT, powered by Large Language Models (LLMs), are increasingly integrated into daily life. However, alongside these benefits, LLMs also introduce social risks. Malicious users can exploit LLMs by submitting harmful prompts, such as requesting instructions for illegal activities. To mitigate this, models often include a security mechanism that automatically rejects such harmful prompts. However, they can be bypassed through LLM jailbreaks. Current jailbreaks often require significant manual effort, high computational costs, or result in excessive model modifications that may degrade regular utility. We introduce TwinBreak, an innovative safety alignment removal method. Building on the idea that the safety mechanism operates like an embedded backdoor, TwinBreak identifies and prunes parameters responsible for this functionality. By focusing on the most relevant model layers, TwinBreak performs fine-grained analysis of parameters essential to model utility and safety. TwinBreak is the first method to analyze intermediate outputs from prompts with high structural and content similarity to isolate safety parameters. We present the TwinPrompt dataset containing 100 such twin prompts. Experiments confirm TwinBreak's effectiveness, achieving 89% to 98% success rates with minimal computational requirements across 16 LLMs from five vendors.

ClearMark: Intuitive and Robust Model Watermarking via Transposed Model Training

Oct 25, 2023

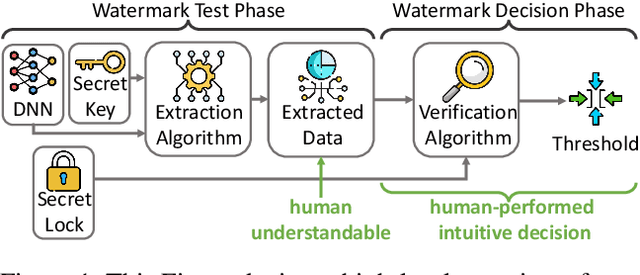

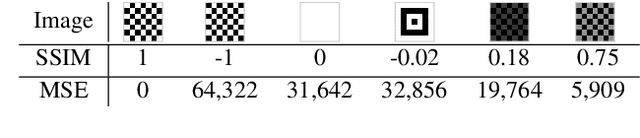

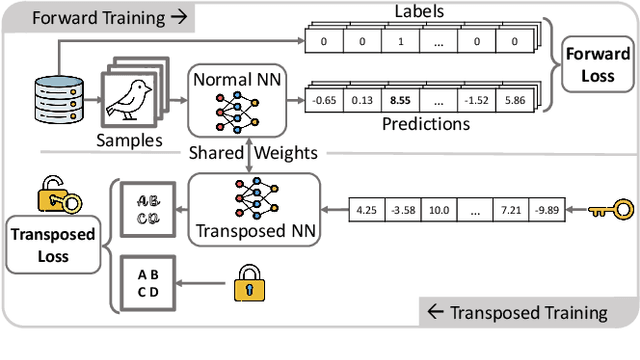

Abstract:Due to costly efforts during data acquisition and model training, Deep Neural Networks (DNNs) belong to the intellectual property of the model creator. Hence, unauthorized use, theft, or modification may lead to legal repercussions. Existing DNN watermarking methods for ownership proof are often non-intuitive, embed human-invisible marks, require trust in algorithmic assessment that lacks human-understandable attributes, and rely on rigid thresholds, making it susceptible to failure in cases of partial watermark erasure. This paper introduces ClearMark, the first DNN watermarking method designed for intuitive human assessment. ClearMark embeds visible watermarks, enabling human decision-making without rigid value thresholds while allowing technology-assisted evaluations. ClearMark defines a transposed model architecture allowing to use of the model in a backward fashion to interwove the watermark with the main task within all model parameters. Compared to existing watermarking methods, ClearMark produces visual watermarks that are easy for humans to understand without requiring complex verification algorithms or strict thresholds. The watermark is embedded within all model parameters and entangled with the main task, exhibiting superior robustness. It shows an 8,544-bit watermark capacity comparable to the strongest existing work. Crucially, ClearMark's effectiveness is model and dataset-agnostic, and resilient against adversarial model manipulations, as demonstrated in a comprehensive study performed with four datasets and seven architectures.

Avoid Adversarial Adaption in Federated Learning by Multi-Metric Investigations

Jun 06, 2023

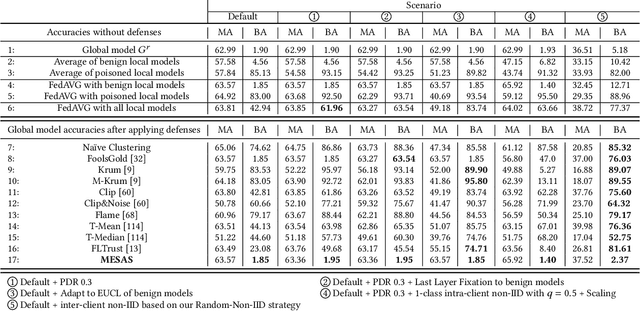

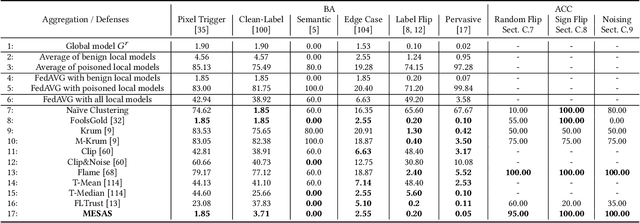

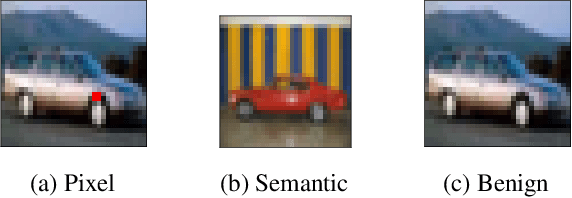

Abstract:Federated Learning (FL) trains machine learning models on data distributed across multiple devices, avoiding data transfer to a central location. This improves privacy, reduces communication costs, and enhances model performance. However, FL is prone to poisoning attacks, which can be untargeted aiming to reduce the model performance, or targeted, so-called backdoors, which add adversarial behavior that can be triggered with appropriately crafted inputs. Striving for stealthiness, backdoor attacks are harder to deal with. Mitigation techniques against poisoning attacks rely on monitoring certain metrics and filtering malicious model updates. However, previous works didn't consider real-world adversaries and data distributions. To support our statement, we define a new notion of strong adaptive adversaries that can simultaneously adapt to multiple objectives and demonstrate through extensive tests, that existing defense methods can be circumvented in this adversary model. We also demonstrate, that existing defenses have limited effectiveness when no assumptions are made about underlying data distributions. To address realistic scenarios and adversary models, we propose Metric-Cascades (MESAS) a new defense that leverages multiple detection metrics simultaneously for the filtering of poisoned model updates. This approach forces adaptive attackers into a heavy multi-objective optimization problem, and our evaluation with nine backdoors and three datasets shows that even our strong adaptive attacker cannot evade MESAS's detection. We show that MESAS outperforms existing defenses in distinguishing backdoors from distortions originating from different data distributions within and across the clients. Overall, MESAS is the first defense that is robust against strong adaptive adversaries and is effective in real-world data scenarios while introducing a low overhead of 24.37s on average.

Close the Gate: Detecting Backdoored Models in Federated Learning based on Client-Side Deep Layer Output Analysis

Oct 14, 2022

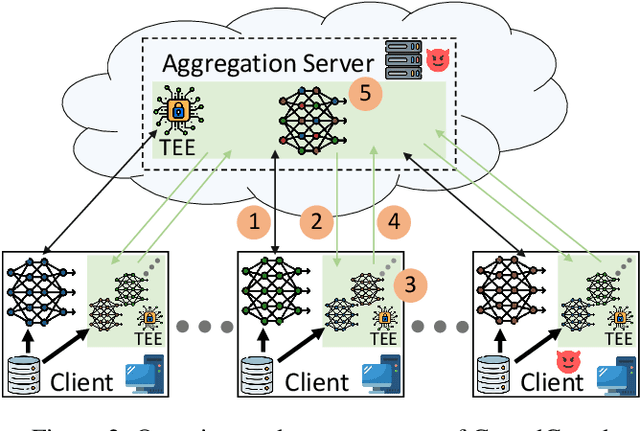

Abstract:Federated Learning (FL) is a scheme for collaboratively training Deep Neural Networks (DNNs) with multiple data sources from different clients. Instead of sharing the data, each client trains the model locally, resulting in improved privacy. However, recently so-called targeted poisoning attacks have been proposed that allow individual clients to inject a backdoor into the trained model. Existing defenses against these backdoor attacks either rely on techniques like Differential Privacy to mitigate the backdoor, or analyze the weights of the individual models and apply outlier detection methods that restricts these defenses to certain data distributions. However, adding noise to the models' parameters or excluding benign outliers might also reduce the accuracy of the collaboratively trained model. Additionally, allowing the server to inspect the clients' models creates a privacy risk due to existing knowledge extraction methods. We propose \textit{CrowdGuard}, a model filtering defense, that mitigates backdoor attacks by leveraging the clients' data to analyze the individual models before the aggregation. To prevent data leaks, the server sends the individual models to secure enclaves, running in client-located Trusted Execution Environments. To effectively distinguish benign and poisoned models, even if the data of different clients are not independently and identically distributed (non-IID), we introduce a novel metric called \textit{HLBIM} to analyze the outputs of the DNN's hidden layers. We show that the applied significance-based detection algorithm combined can effectively detect poisoned models, even in non-IID scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge