Toni Mancini

Optimizing Fault-Tolerant Quality-Guaranteed Sensor Deployments for UAV Localization in Critical Areas via Computational Geometry

Dec 05, 2023Abstract:The increasing spreading of small commercial Unmanned Aerial Vehicles (UAVs, aka drones) presents serious threats for critical areas such as airports, power plants, governmental and military facilities. In fact, such UAVs can easily disturb or jam radio communications, collide with other flying objects, perform espionage activity, and carry offensive payloads, e.g., weapons or explosives. A central problem when designing surveillance solutions for the localization of unauthorized UAVs in critical areas is to decide how many triangulating sensors to use, and where to deploy them to optimise both coverage and cost effectiveness. In this article, we compute deployments of triangulating sensors for UAV localization, optimizing a given blend of metrics, namely: coverage under multiple sensing quality levels, cost-effectiveness, fault-tolerance. We focus on large, complex 3D regions, which exhibit obstacles (e.g., buildings), varying terrain elevation, different coverage priorities, constraints on possible sensors placement. Our novel approach relies on computational geometry and statistical model checking, and enables the effective use of off-the-shelf AI-based black-box optimizers. Moreover, our method allows us to compute a closed-form, analytical representation of the region uncovered by a sensor deployment, which provides the means for rigorous, formal certification of the quality of the latter. We show the practical feasibility of our approach by computing optimal sensor deployments for UAV localization in two large, complex 3D critical regions, the Rome Leonardo Da Vinci International Airport (FCO) and the Vienna International Center (VIC), using NOMAD as our state-of-the-art underlying optimization engine. Results show that we can compute optimal sensor deployments within a few hours on a standard workstation and within minutes on a small parallel infrastructure.

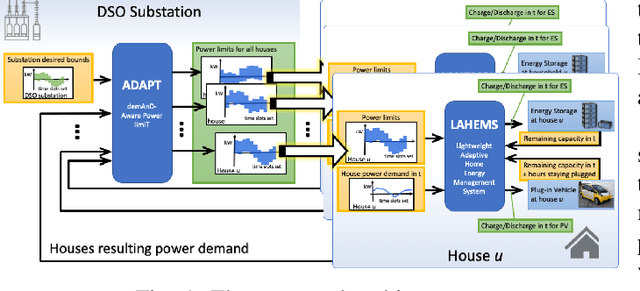

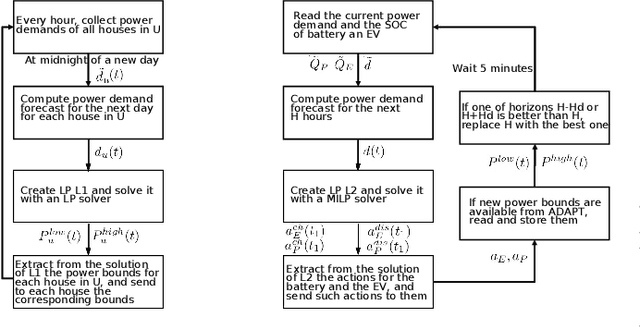

A Two-Layer Near-Optimal Strategy for Substation Constraint Management via Home Batteries

Aug 15, 2021

Abstract:Within electrical distribution networks, substation constraints management requires that aggregated power demand from residential users is kept within suitable bounds. Efficiency of substation constraints management can be measured as the reduction of constraints violations w.r.t. unmanaged demand. Home batteries hold the promise of enabling efficient and user-oblivious substation constraints management. Centralized control of home batteries would achieve optimal efficiency. However, it is hardly acceptable by users, since service providers (e.g., utilities or aggregators) would directly control batteries at user premises. Unfortunately, devising efficient hierarchical control strategies, thus overcoming the above problem, is far from easy. We present a novel two-layer control strategy for home batteries that avoids direct control of home devices by the service provider and at the same time yields near-optimal substation constraints management efficiency. Our simulation results on field data from 62 households in Denmark show that the substation constraints management efficiency achieved with our approach is at least 82% of the one obtained with a theoretical optimal centralized strategy.

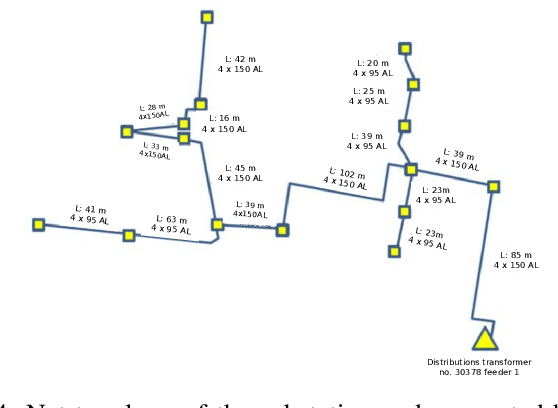

MILP, pseudo-boolean, and OMT solvers for optimal fault-tolerant placements of relay nodes in mission critical wireless networks

Jun 20, 2021

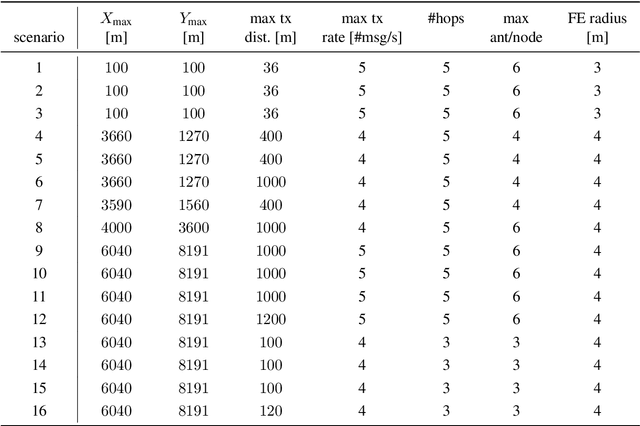

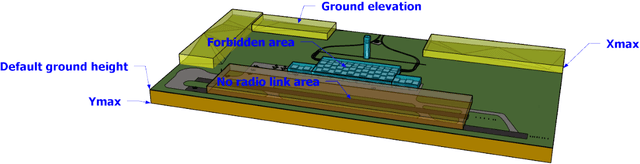

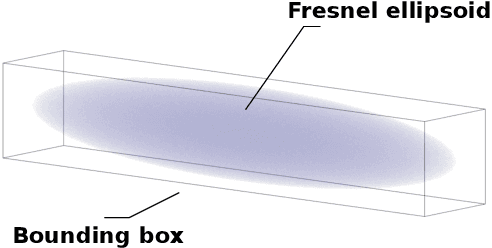

Abstract:In critical infrastructures like airports, much care has to be devoted in protecting radio communication networks from external electromagnetic interference. Protection of such mission-critical radio communication networks is usually tackled by exploiting radiogoniometers: at least three suitably deployed radiogoniometers, and a gateway gathering information from them, permit to monitor and localise sources of electromagnetic emissions that are not supposed to be present in the monitored area. Typically, radiogoniometers are connected to the gateway through relay nodes. As a result, some degree of fault-tolerance for the network of relay nodes is essential in order to offer a reliable monitoring. On the other hand, deployment of relay nodes is typically quite expensive. As a result, we have two conflicting requirements: minimise costs while guaranteeing a given fault-tolerance. In this paper, we address the problem of computing a deployment for relay nodes that minimises the relay node network cost while at the same time guaranteeing proper working of the network even when some of the relay nodes (up to a given maximum number) become faulty (fault-tolerance). We show that, by means of a computation-intensive pre-processing on a HPC infrastructure, the above optimisation problem can be encoded as a 0/1 Linear Program, becoming suitable to be approached with standard Artificial Intelligence reasoners like MILP, PB-SAT, and SMT/OMT solvers. Our problem formulation enables us to present experimental results comparing the performance of these three solving technologies on a real case study of a relay node network deployment in areas of the Leonardo da Vinci Airport in Rome, Italy.

* 33 pages, 11 figures

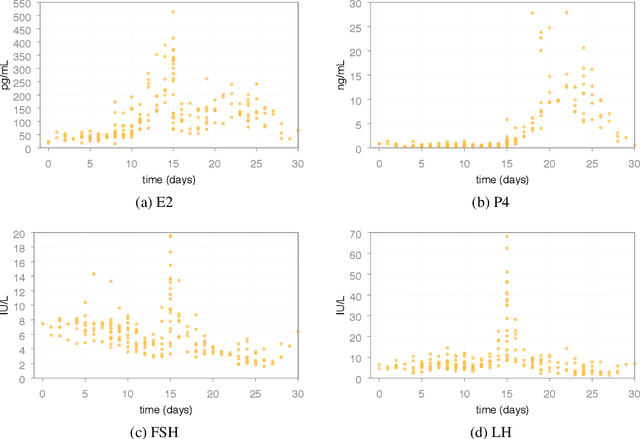

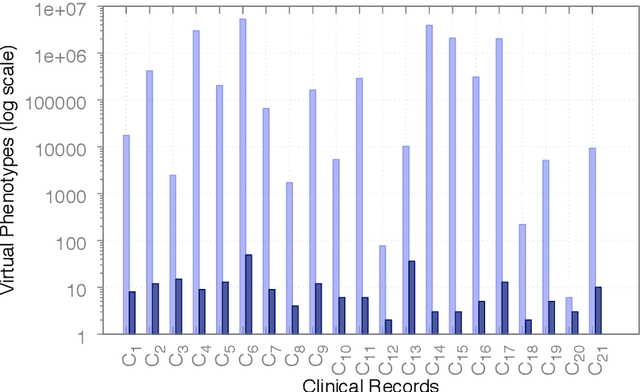

Optimal personalised treatment computation through in silico clinical trials on patient digital twins

Jun 20, 2021

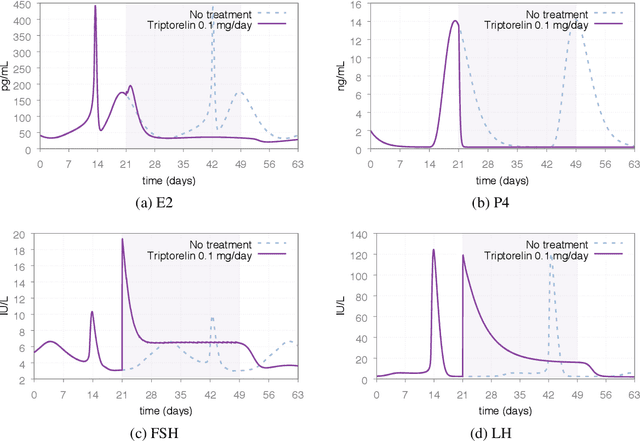

Abstract:In Silico Clinical Trials (ISTC), i.e., clinical experimental campaigns carried out by means of computer simulations, hold the promise to decrease time and cost for the safety and efficacy assessment of pharmacological treatments, reduce the need for animal and human testing, and enable precision medicine. In this paper we present methods and an algorithm that, by means of extensive computer simulation--based experimental campaigns (ISTC) guided by intelligent search, optimise a pharmacological treatment for an individual patient (precision medicine). e show the effectiveness of our approach on a case study involving a real pharmacological treatment, namely the downregulation phase of a complex clinical protocol for assisted reproduction in humans.

* 31 pages, 9 figures

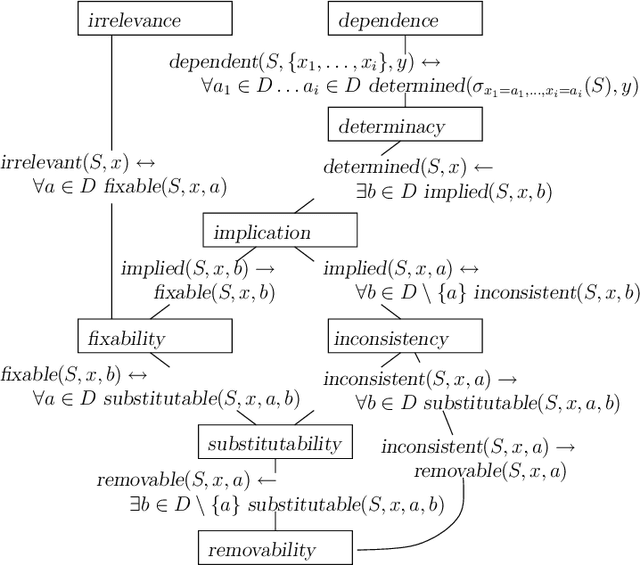

A Unifying Framework for Structural Properties of CSPs: Definitions, Complexity, Tractability

Jan 15, 2014

Abstract:Literature on Constraint Satisfaction exhibits the definition of several structural properties that can be possessed by CSPs, like (in)consistency, substitutability or interchangeability. Current tools for constraint solving typically detect such properties efficiently by means of incomplete yet effective algorithms, and use them to reduce the search space and boost search. In this paper, we provide a unifying framework encompassing most of the properties known so far, both in CSP and other fields literature, and shed light on the semantical relationships among them. This gives a unified and comprehensive view of the topic, allows new, unknown, properties to emerge, and clarifies the computational complexity of the various detection problems. In particular, among the others, two new concepts, fixability and removability emerge, that come out to be the ideal characterisations of values that may be safely assigned or removed from a variables domain, while preserving problem satisfiability. These two notions subsume a large number of known properties, including inconsistency, substitutability and others. Because of the computational intractability of all the property-detection problems, by following the CSP approach we then determine a number of relaxations which provide sufficient conditions for their tractability. In particular, we exploit forms of language restrictions and local reasoning.

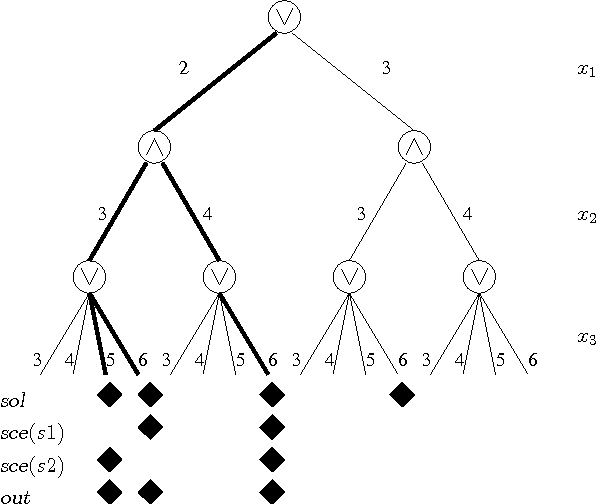

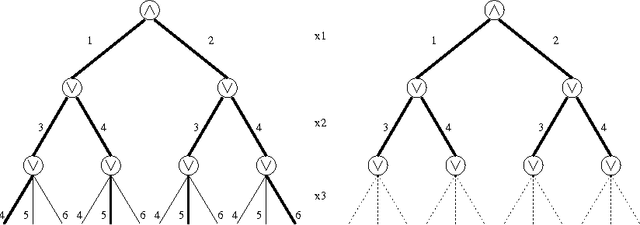

Generalizing Consistency and other Constraint Properties to Quantified Constraints

May 24, 2007

Abstract:Quantified constraints and Quantified Boolean Formulae are typically much more difficult to reason with than classical constraints, because quantifier alternation makes the usual notion of solution inappropriate. As a consequence, basic properties of Constraint Satisfaction Problems (CSP), such as consistency or substitutability, are not completely understood in the quantified case. These properties are important because they are the basis of most of the reasoning methods used to solve classical (existentially quantified) constraints, and one would like to benefit from similar reasoning methods in the resolution of quantified constraints. In this paper, we show that most of the properties that are used by solvers for CSP can be generalized to quantified CSP. This requires a re-thinking of a number of basic concepts; in particular, we propose a notion of outcome that generalizes the classical notion of solution and on which all definitions are based. We propose a systematic study of the relations which hold between these properties, as well as complexity results regarding the decision of these properties. Finally, and since these problems are typically intractable, we generalize the approach used in CSP and propose weaker, easier to check notions based on locality, which allow to detect these properties incompletely but in polynomial time.

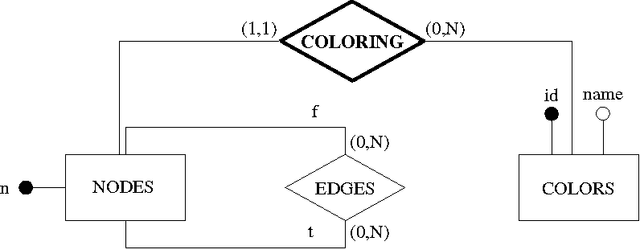

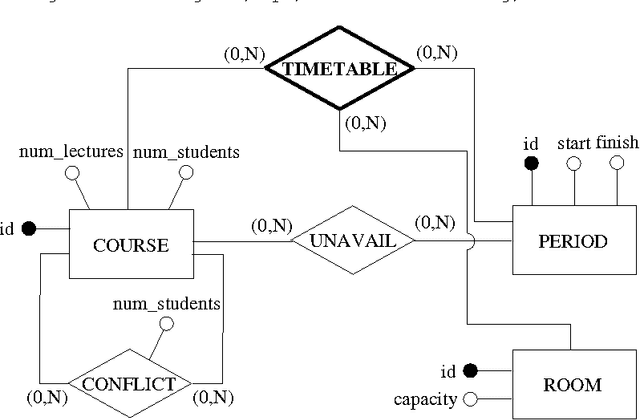

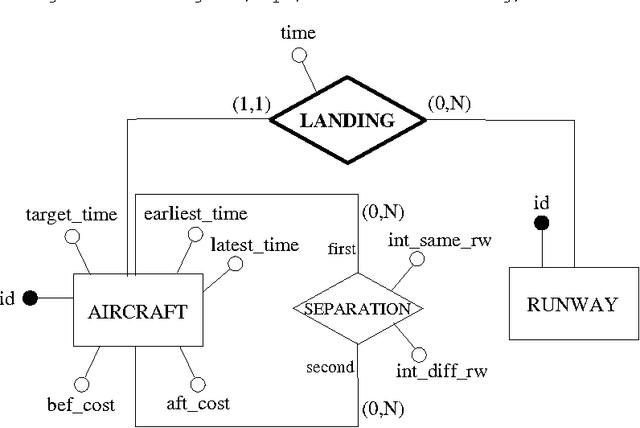

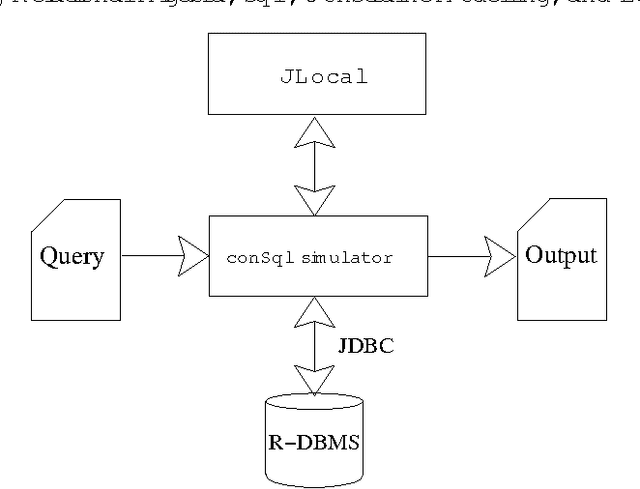

Combining Relational Algebra, SQL, Constraint Modelling, and Local Search

Jan 11, 2006

Abstract:The goal of this paper is to provide a strong integration between constraint modelling and relational DBMSs. To this end we propose extensions of standard query languages such as relational algebra and SQL, by adding constraint modelling capabilities to them. In particular, we propose non-deterministic extensions of both languages, which are specially suited for combinatorial problems. Non-determinism is introduced by means of a guessing operator, which declares a set of relations to have an arbitrary extension. This new operator results in languages with higher expressive power, able to express all problems in the complexity class NP. Some syntactical restrictions which make data complexity polynomial are shown. The effectiveness of both extensions is demonstrated by means of several examples. The current implementation, written in Java using local search techniques, is described. To appear in Theory and Practice of Logic Programming (TPLP)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge