Tomer Barak

Investigating learning-independent abstract reasoning in artificial neural networks

Jul 25, 2024Abstract:Humans are capable of solving complex abstract reasoning tests. Whether this ability reflects a learning-independent inference mechanism applicable to any novel unlearned problem or whether it is a manifestation of extensive training throughout life is an open question. Addressing this question in humans is challenging because it is impossible to control their prior training. However, assuming a similarity between the cognitive processing of Artificial Neural Networks (ANNs) and humans, the extent to which training is required for ANNs' abstract reasoning is informative about this question in humans. Previous studies demonstrated that ANNs can solve abstract reasoning tests. However, this success required extensive training. In this study, we examined the learning-independent abstract reasoning of ANNs. Specifically, we evaluated their performance without any pretraining, with the ANNs' weights being randomly-initialized, and only change in the process of problem solving. We found that naive ANN models can solve non-trivial visual reasoning tests, similar to those used to evaluate human learning-independent reasoning. We further studied the mechanisms that support this ability. Our results suggest the possibility of learning-independent abstract reasoning that does not require extensive training.

Naive Few-Shot Learning: Sequence Consistency Evaluation

May 24, 2022

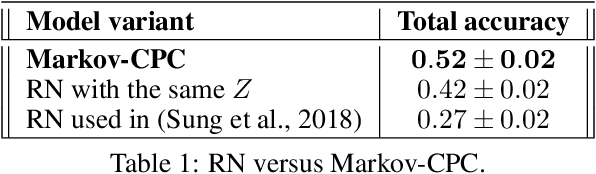

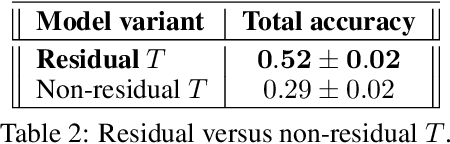

Abstract:Cognitive psychologists often use the term $\textit{fluid intelligence}$ to describe the ability of humans to solve novel tasks without any prior training. In contrast to humans, deep neural networks can perform cognitive tasks only after extensive (pre-)training with a large number of relevant examples. Motivated by fluid intelligence research in the cognitive sciences, we built a benchmark task which we call sequence consistency evaluation (SCE) that can be used to address this gap. Solving the SCE task requires the ability to extract simple rules from sequences, a basic computation that is required for solving various intelligence tests in humans. We tested $\textit{untrained}$ (naive) deep learning models in the SCE task. Specifically, we compared Relation Networks (RN) and Contrastive Predictive Coding (CPC), two models that can extract simple rules from sequences, and found that the latter, which imposes a structure on the predictable rule does better. We further found that simple networks fare better in this task than complex ones. Finally, we show that this approach can be used for security camera anomaly detection without any prior training.

Naive Artificial Intelligence

Sep 04, 2020

Abstract:In the cognitive sciences, it is common to distinguish between crystal intelligence, the ability to utilize knowledge acquired through past learning or experience and fluid intelligence, the ability to solve novel problems without relying on prior knowledge. Using this cognitive distinction between the two types of intelligence, extensively-trained deep networks that can play chess or Go exhibit crystal but not fluid intelligence. In humans, fluid intelligence is typically studied and quantified using intelligence tests. Previous studies have shown that deep networks can solve some forms of intelligence tests, but only after extensive training. Here we present a computational model that solves intelligence tests without any prior training. This ability is based on continual inductive reasoning, and is implemented by deep unsupervised latent-prediction networks. Our work demonstrates the potential fluid intelligence of deep networks. Finally, we propose that the computational principles underlying our approach can be used to model fluid intelligence in the cognitive sciences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge