Naive Few-Shot Learning: Sequence Consistency Evaluation

Paper and Code

May 24, 2022

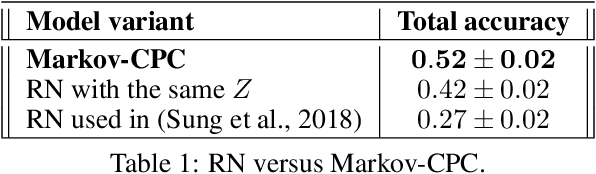

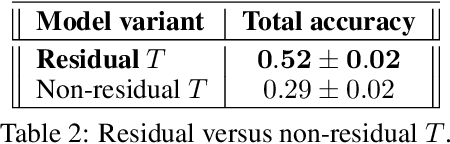

Cognitive psychologists often use the term $\textit{fluid intelligence}$ to describe the ability of humans to solve novel tasks without any prior training. In contrast to humans, deep neural networks can perform cognitive tasks only after extensive (pre-)training with a large number of relevant examples. Motivated by fluid intelligence research in the cognitive sciences, we built a benchmark task which we call sequence consistency evaluation (SCE) that can be used to address this gap. Solving the SCE task requires the ability to extract simple rules from sequences, a basic computation that is required for solving various intelligence tests in humans. We tested $\textit{untrained}$ (naive) deep learning models in the SCE task. Specifically, we compared Relation Networks (RN) and Contrastive Predictive Coding (CPC), two models that can extract simple rules from sequences, and found that the latter, which imposes a structure on the predictable rule does better. We further found that simple networks fare better in this task than complex ones. Finally, we show that this approach can be used for security camera anomaly detection without any prior training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge