Tom White

VISTA: A Panoramic View of Neural Representations

Dec 03, 2024

Abstract:We present VISTA (Visualization of Internal States and Their Associations), a novel pipeline for visually exploring and interpreting neural network representations. VISTA addresses the challenge of analyzing vast multidimensional spaces in modern machine learning models by mapping representations into a semantic 2D space. The resulting collages visually reveal patterns and relationships within internal representations. We demonstrate VISTA's utility by applying it to sparse autoencoder latents uncovering new properties and interpretations. We review the VISTA methodology, present findings from our case study ( https://got.drib.net/latents/ ), and discuss implications for neural network interpretability across various domains of machine learning.

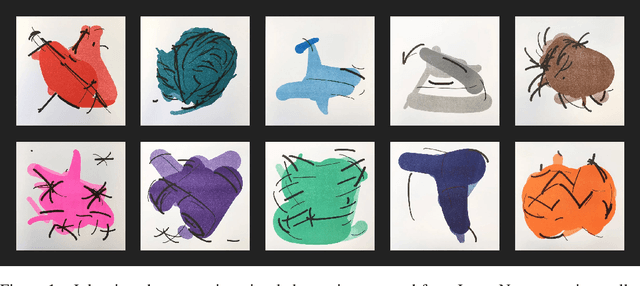

Shared Visual Abstractions

Nov 19, 2019

Abstract:This paper presents abstract art created by neural networks and broadly recognizable across various computer vision systems. The existence of abstract forms that trigger specific labels independent of neural architecture or training set suggests convolutional neural networks build shared visual representations for the categories they understand. Computer vision classifiers encountering these drawings often respond with strong responses for specific labels - in extreme cases stronger than all examples from the validation set. By surveying human subjects we confirm that these abstract artworks are also broadly recognizable by people, suggesting visual representations triggered by these drawings are shared across human and computer vision systems.

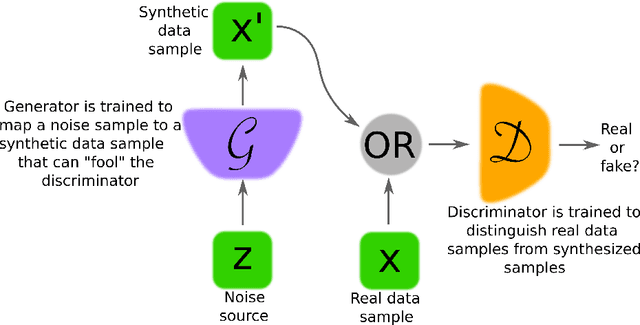

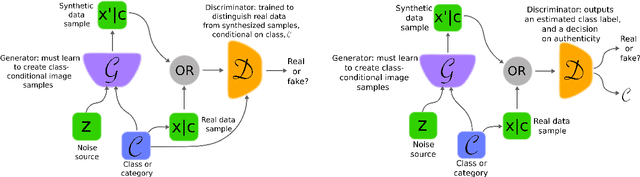

Generative Adversarial Networks: An Overview

Oct 19, 2017

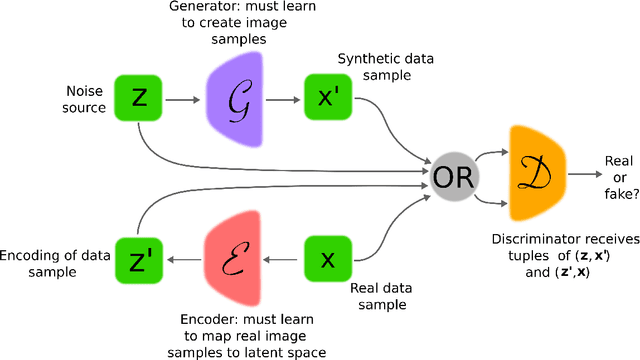

Abstract:Generative adversarial networks (GANs) provide a way to learn deep representations without extensively annotated training data. They achieve this through deriving backpropagation signals through a competitive process involving a pair of networks. The representations that can be learned by GANs may be used in a variety of applications, including image synthesis, semantic image editing, style transfer, image super-resolution and classification. The aim of this review paper is to provide an overview of GANs for the signal processing community, drawing on familiar analogies and concepts where possible. In addition to identifying different methods for training and constructing GANs, we also point to remaining challenges in their theory and application.

Sampling Generative Networks

Dec 06, 2016

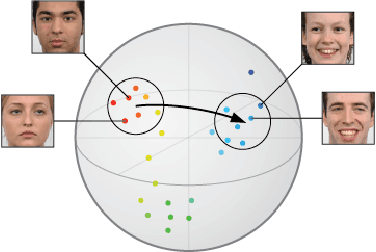

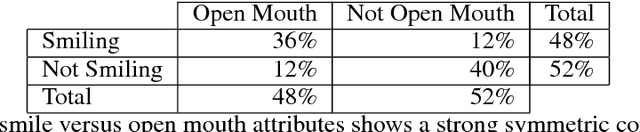

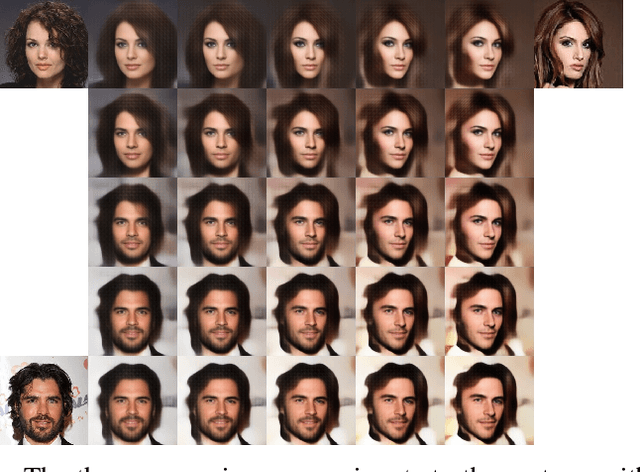

Abstract:We introduce several techniques for sampling and visualizing the latent spaces of generative models. Replacing linear interpolation with spherical linear interpolation prevents diverging from a model's prior distribution and produces sharper samples. J-Diagrams and MINE grids are introduced as visualizations of manifolds created by analogies and nearest neighbors. We demonstrate two new techniques for deriving attribute vectors: bias-corrected vectors with data replication and synthetic vectors with data augmentation. Binary classification using attribute vectors is presented as a technique supporting quantitative analysis of the latent space. Most techniques are intended to be independent of model type and examples are shown on both Variational Autoencoders and Generative Adversarial Networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge