Tom Chou

An efficient Wasserstein-distance approach for reconstructing jump-diffusion processes using parameterized neural networks

Jun 03, 2024Abstract:We analyze the Wasserstein distance ($W$-distance) between two probability distributions associated with two multidimensional jump-diffusion processes. Specifically, we analyze a temporally decoupled squared $W_2$-distance, which provides both upper and lower bounds associated with the discrepancies in the drift, diffusion, and jump amplitude functions between the two jump-diffusion processes. Then, we propose a temporally decoupled squared $W_2$-distance method for efficiently reconstructing unknown jump-diffusion processes from data using parameterized neural networks. We further show its performance can be enhanced by utilizing prior information on the drift function of the jump-diffusion process. The effectiveness of our proposed reconstruction method is demonstrated across several examples and applications.

Squared Wasserstein-2 Distance for Efficient Reconstruction of Stochastic Differential Equations

Jan 21, 2024Abstract:We provide an analysis of the squared Wasserstein-2 ($W_2$) distance between two probability distributions associated with two stochastic differential equations (SDEs). Based on this analysis, we propose the use of a squared $W_2$ distance-based loss functions in the \textit{reconstruction} of SDEs from noisy data. To demonstrate the practicality of our Wasserstein distance-based loss functions, we performed numerical experiments that demonstrate the efficiency of our method in reconstructing SDEs that arise across a number of applications.

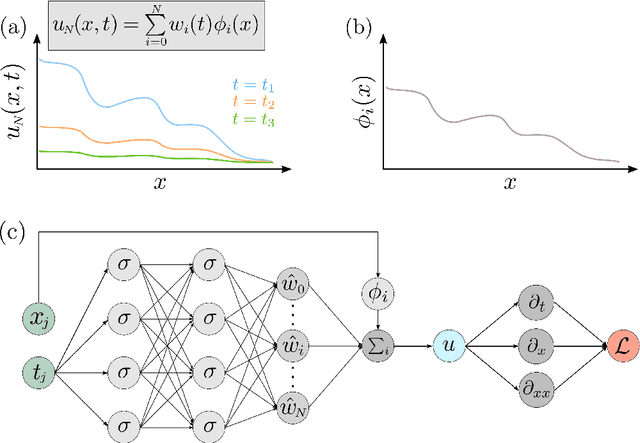

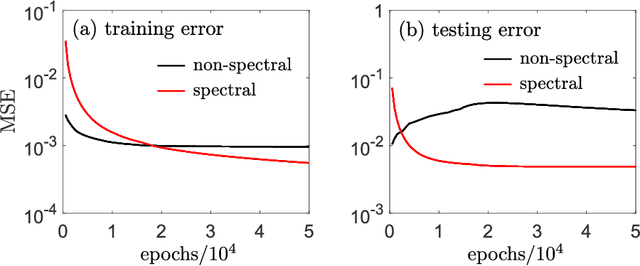

A Spectral Approach for Learning Spatiotemporal Neural Differential Equations

Sep 28, 2023

Abstract:Rapidly developing machine learning methods has stimulated research interest in computationally reconstructing differential equations (DEs) from observational data which may provide additional insight into underlying causative mechanisms. In this paper, we propose a novel neural-ODE based method that uses spectral expansions in space to learn spatiotemporal DEs. The major advantage of our spectral neural DE learning approach is that it does not rely on spatial discretization, thus allowing the target spatiotemporal equations to contain long range, nonlocal spatial interactions that act on unbounded spatial domains. Our spectral approach is shown to be as accurate as some of the latest machine learning approaches for learning PDEs operating on bounded domains. By developing a spectral framework for learning both PDEs and integro-differential equations, we extend machine learning methods to apply to unbounded DEs and a larger class of problems.

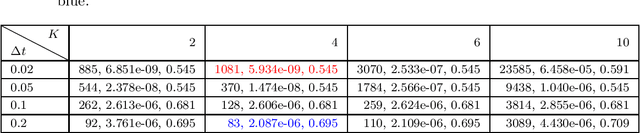

Spectrally Adapted Physics-Informed Neural Networks for Solving Unbounded Domain Problems

Feb 06, 2022

Abstract:Solving analytically intractable partial differential equations (PDEs) that involve at least one variable defined in an unbounded domain requires efficient numerical methods that accurately resolve the dependence of the PDE on that variable over several orders of magnitude. Unbounded domain problems arise in various application areas and solving such problems is important for understanding multi-scale biological dynamics, resolving physical processes at long time scales and distances, and performing parameter inference in engineering problems. In this work, we combine two classes of numerical methods: (i) physics-informed neural networks (PINNs) and (ii) adaptive spectral methods. The numerical methods that we develop take advantage of the ability of physics-informed neural networks to easily implement high-order numerical schemes to efficiently solve PDEs. We then show how recently introduced adaptive techniques for spectral methods can be integrated into PINN-based PDE solvers to obtain numerical solutions of unbounded domain problems that cannot be efficiently approximated by standard PINNs. Through a number of examples, we demonstrate the advantages of the proposed spectrally adapted PINNs (s-PINNs) over standard PINNs in approximating functions, solving PDEs, and estimating model parameters from noisy observations in unbounded domains.

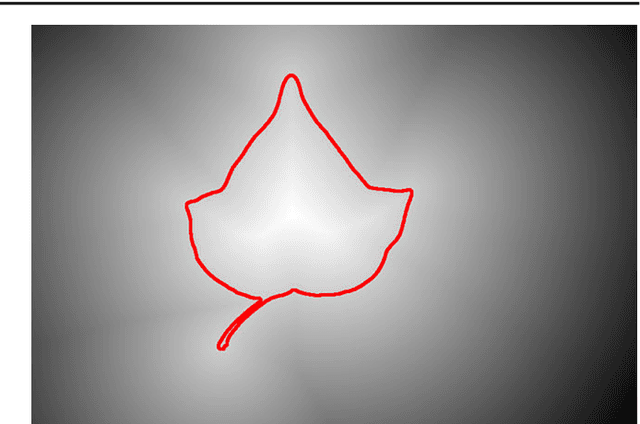

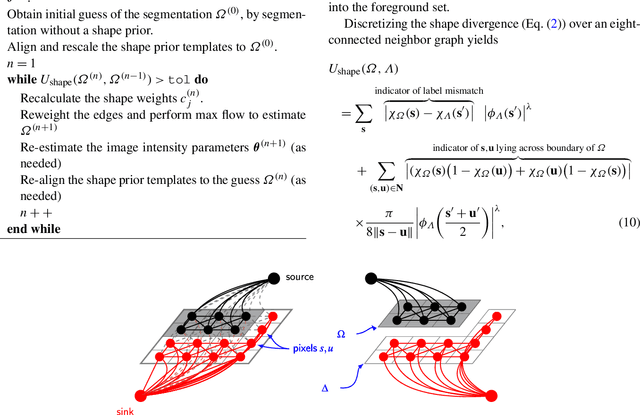

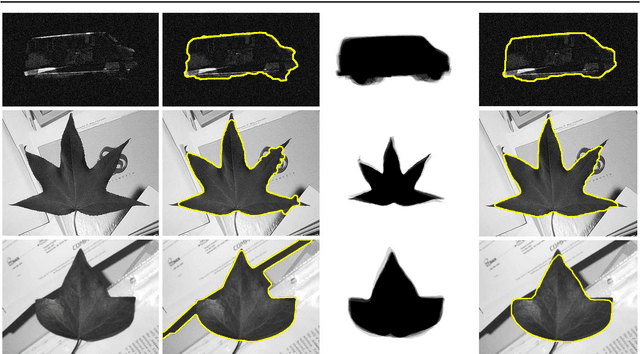

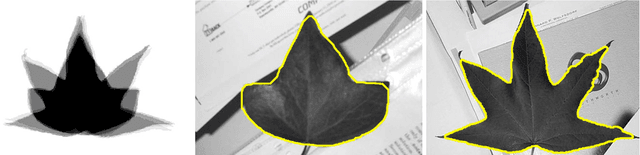

Iterative graph cuts for image segmentation with a nonlinear statistical shape prior

Feb 22, 2013

Abstract:Shape-based regularization has proven to be a useful method for delineating objects within noisy images where one has prior knowledge of the shape of the targeted object. When a collection of possible shapes is available, the specification of a shape prior using kernel density estimation is a natural technique. Unfortunately, energy functionals arising from kernel density estimation are of a form that makes them impossible to directly minimize using efficient optimization algorithms such as graph cuts. Our main contribution is to show how one may recast the energy functional into a form that is minimizable iteratively and efficiently using graph cuts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge