Tiaoqiao Liu

Automatic Short Answer Grading via Multiway Attention Networks

Sep 23, 2019

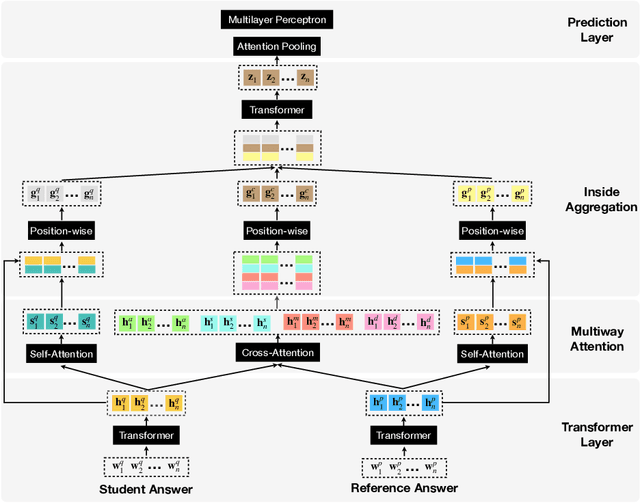

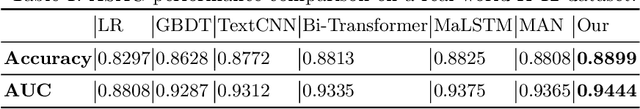

Abstract:Automatic short answer grading (ASAG), which autonomously score student answers according to reference answers, provides a cost-effective and consistent approach to teaching professionals and can reduce their monotonous and tedious grading workloads. However, ASAG is a very challenging task due to two reasons: (1) student answers are made up of free text which requires a deep semantic understanding; and (2) the questions are usually open-ended and across many domains in K-12 scenarios. In this paper, we propose a generalized end-to-end ASAG learning framework which aims to (1) autonomously extract linguistic information from both student and reference answers; and (2) accurately model the semantic relations between free-text student and reference answers in open-ended domain. The proposed ASAG model is evaluated on a large real-world K-12 dataset and can outperform the state-of-the-art baselines in terms of various evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge