Tianyuan Chang

Tongue image constitution recognition based on Complexity Perception method

Mar 01, 2018

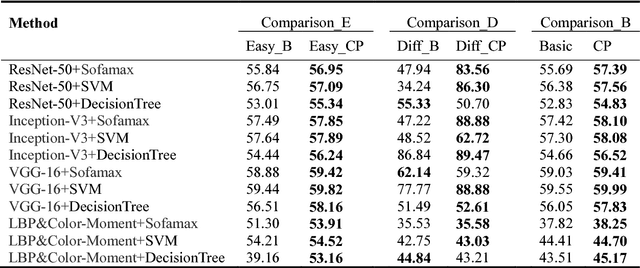

Abstract:Background and Object: In China, body constitution is highly related to physiological and pathological functions of human body and determines the tendency of the disease, which is of great importance for treatment in clinical medicine. Tongue diagnosis, as a key part of Traditional Chinese Medicine inspection, is an important way to recognize the type of constitution.In order to deploy tongue image constitution recognition system on non-invasive mobile device to achieve fast, efficient and accurate constitution recognition, an efficient method is required to deal with the challenge of this kind of complex environment. Methods: In this work, we perform the tongue area detection, tongue area calibration and constitution classification using methods which are based on deep convolutional neural network. Subject to the variation of inconstant environmental condition, the distribution of the picture is uneven, which has a bad effect on classification performance. To solve this problem, we propose a method based on the complexity of individual instances to divide dataset into two subsets and classify them separately, which is capable of improving classification accuracy. To evaluate the performance of our proposed method, we conduct experiments on three sizes of tongue datasets, in which deep convolutional neural network method and traditional digital image analysis method are respectively applied to extract features for tongue images. The proposed method is combined with the base classifier Softmax, SVM, and DecisionTree respectively. Results: As the experiments results shown, our proposed method improves the classification accuracy by 1.135% on average and achieves 59.99% constitution classification accuracy. Conclusions: Experimental results on three datasets show that our proposed method can effectively improve the classification accuracy of tongue constitution recognition.

Facial Expression Recognition Based on Complexity Perception Classification Algorithm

Mar 01, 2018

Abstract:Facial expression recognition (FER) has always been a challenging issue in computer vision. The different expressions of emotion and uncontrolled environmental factors lead to inconsistencies in the complexity of FER and variability of between expression categories, which is often overlooked in most facial expression recognition systems. In order to solve this problem effectively, we presented a simple and efficient CNN model to extract facial features, and proposed a complexity perception classification (CPC) algorithm for FER. The CPC algorithm divided the dataset into an easy classification sample subspace and a complex classification sample subspace by evaluating the complexity of facial features that are suitable for classification. The experimental results of our proposed algorithm on Fer2013 and CK-plus datasets demonstrated the algorithm's effectiveness and superiority over other state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge