Tianxiang Liu

A successive difference-of-convex approximation method for a class of nonconvex nonsmooth optimization problems

May 26, 2018

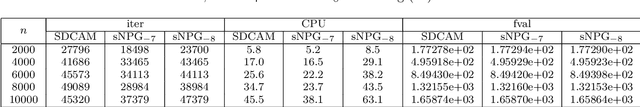

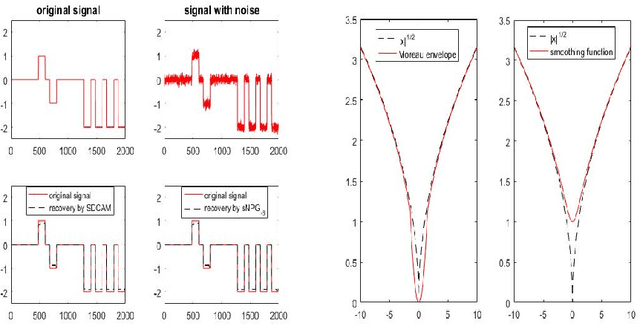

Abstract:We consider a class of nonconvex nonsmooth optimization problems whose objective is the sum of a smooth function and a finite number of nonnegative proper closed possibly nonsmooth functions (whose proximal mappings are easy to compute), some of which are further composed with linear maps. This kind of problems arises naturally in various applications when different regularizers are introduced for inducing simultaneous structures in the solutions. Solving these problems, however, can be challenging because of the coupled nonsmooth functions: the corresponding proximal mapping can be hard to compute so that standard first-order methods such as the proximal gradient algorithm cannot be applied efficiently. In this paper, we propose a successive difference-of-convex approximation method for solving this kind of problems. In this algorithm, we approximate the nonsmooth functions by their Moreau envelopes in each iteration. Making use of the simple observation that Moreau envelopes of nonnegative proper closed functions are continuous {\em difference-of-convex} functions, we can then approximately minimize the approximation function by first-order methods with suitable majorization techniques. These first-order methods can be implemented efficiently thanks to the fact that the proximal mapping of {\em each} nonsmooth function is easy to compute. Under suitable assumptions, we prove that the sequence generated by our method is bounded and any accumulation point is a stationary point of the objective. We also discuss how our method can be applied to concrete applications such as nonconvex fused regularized optimization problems and simultaneously structured matrix optimization problems, and illustrate the performance numerically for these two specific applications.

A refined convergence analysis of pDCA$_e$ with applications to simultaneous sparse recovery and outlier detection

Apr 19, 2018

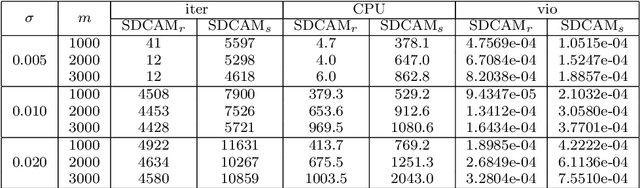

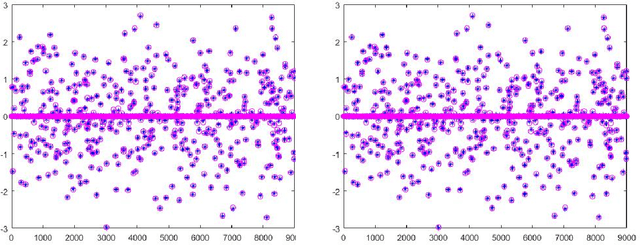

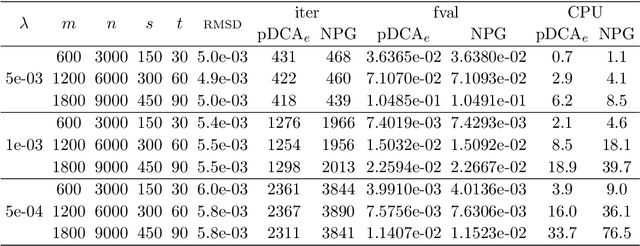

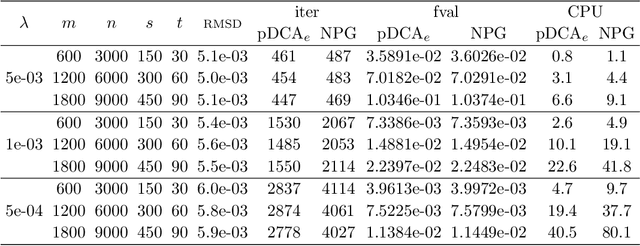

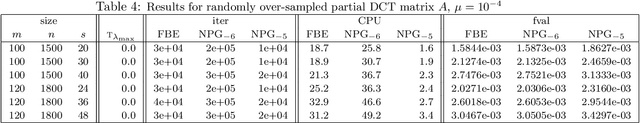

Abstract:We consider the problem of minimizing a difference-of-convex (DC) function, which can be written as the sum of a smooth convex function with Lipschitz gradient, a proper closed convex function and a continuous possibly nonsmooth concave function. We refine the convergence analysis in [38] for the proximal DC algorithm with extrapolation (pDCA$_e$) and show that the whole sequence generated by the algorithm is convergent when the objective is level-bounded, {\em without} imposing differentiability assumptions in the concave part. Our analysis is based on a new potential function and we assume such a function is a Kurdyka-{\L}ojasiewicz (KL) function. We also establish a relationship between our KL assumption and the one used in [38]. Finally, we demonstrate how the pDCA$_e$ can be applied to a class of simultaneous sparse recovery and outlier detection problems arising from robust compressed sensing in signal processing and least trimmed squares regression in statistics. Specifically, we show that the objectives of these problems can be written as level-bounded DC functions whose concave parts are {\em typically nonsmooth}. Moreover, for a large class of loss functions and regularizers, the KL exponent of the corresponding potential function are shown to be 1/2, which implies that the pDCA$_e$ is locally linearly convergent when applied to these problems. Our numerical experiments show that the pDCA$_e$ usually outperforms the proximal DC algorithm with nonmonotone linesearch [24, Appendix A] in both CPU time and solution quality for this particular application.

Further properties of the forward-backward envelope with applications to difference-of-convex programming

Oct 18, 2016

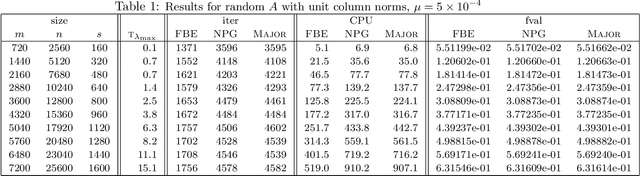

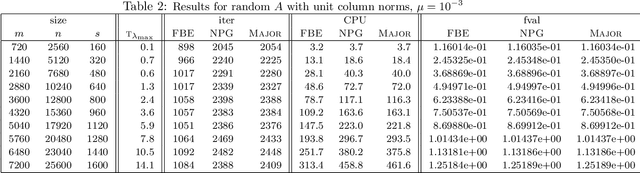

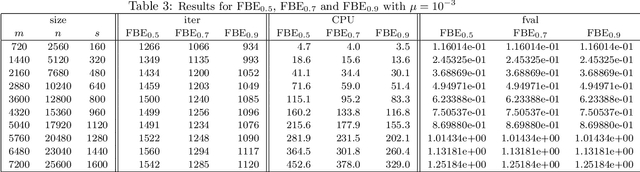

Abstract:In this paper, we further study the forward-backward envelope first introduced in [28] and [30] for problems whose objective is the sum of a proper closed convex function and a twice continuously differentiable possibly nonconvex function with Lipschitz continuous gradient. We derive sufficient conditions on the original problem for the corresponding forward-backward envelope to be a level-bounded and Kurdyka-{\L}ojasiewicz function with an exponent of $\frac12$; these results are important for the efficient minimization of the forward-backward envelope by classical optimization algorithms. In addition, we demonstrate how to minimize some difference-of-convex regularized least squares problems by minimizing a suitably constructed forward-backward envelope. Our preliminary numerical results on randomly generated instances of large-scale $\ell_{1-2}$ regularized least squares problems [37] illustrate that an implementation of this approach with a limited-memory BFGS scheme usually outperforms standard first-order methods such as the nonmonotone proximal gradient method in [35].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge