Thomas Vaessen

More to Less : Enhanced Health Recognition in the Wild with Reduced Modality of Wearable Sensors

Feb 16, 2022

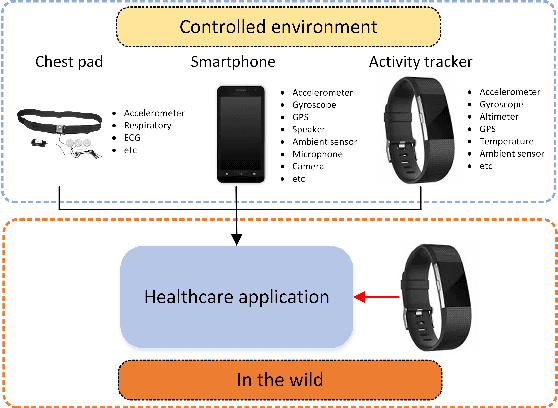

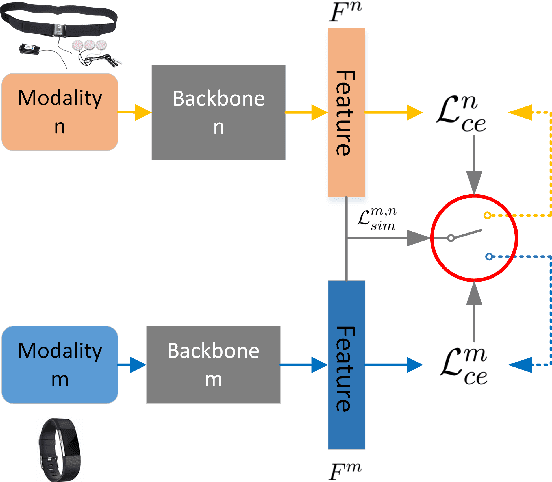

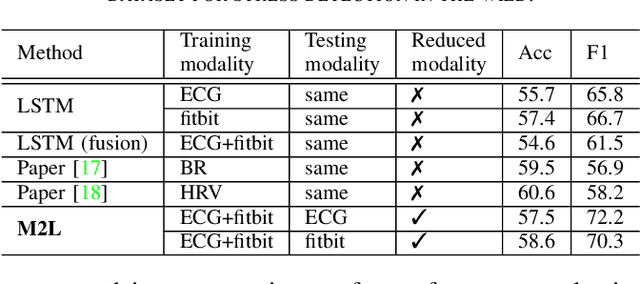

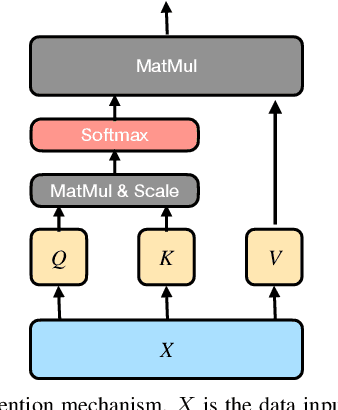

Abstract:Accurately recognizing health-related conditions from wearable data is crucial for improved healthcare outcomes. To improve the recognition accuracy, various approaches have focused on how to effectively fuse information from multiple sensors. Fusing multiple sensors is a common scenario in many applications, but may not always be feasible in real-world scenarios. For example, although combining bio-signals from multiple sensors (i.e., a chest pad sensor and a wrist wearable sensor) has been proved effective for improved performance, wearing multiple devices might be impractical in the free-living context. To solve the challenges, we propose an effective more to less (M2L) learning framework to improve testing performance with reduced sensors through leveraging the complementary information of multiple modalities during training. More specifically, different sensors may carry different but complementary information, and our model is designed to enforce collaborations among different modalities, where positive knowledge transfer is encouraged and negative knowledge transfer is suppressed, so that better representation is learned for individual modalities. Our experimental results show that our framework achieves comparable performance when compared with the full modalities. Our code and results will be available at https://github.com/compwell-org/More2Less.git.

Modality Fusion Network and Personalized Attention in Momentary Stress Detection in the Wild

Jul 21, 2021

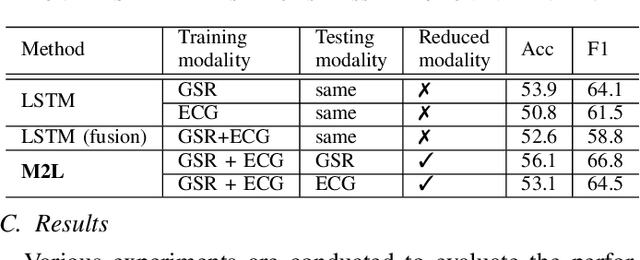

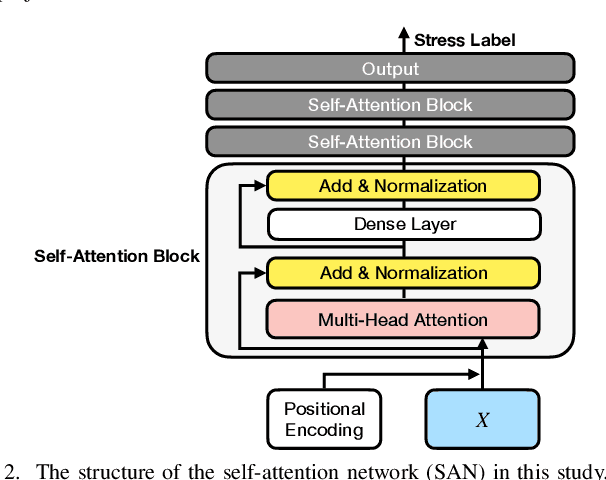

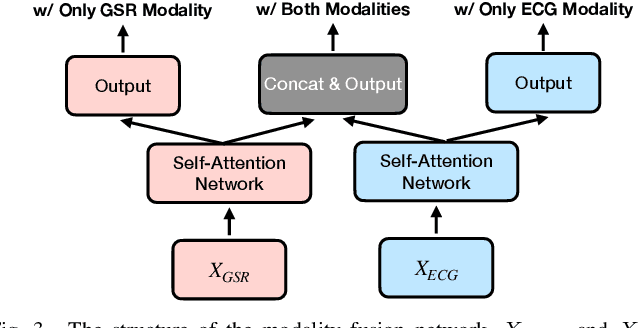

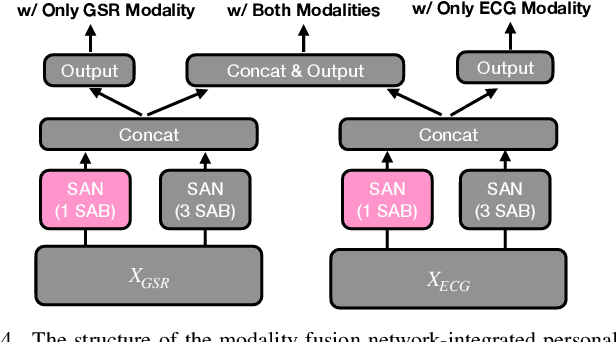

Abstract:Multimodal wearable physiological data in daily life have been used to estimate self-reported stress labels. However, missing data modalities in data collection makes it challenging to leverage all the collected samples. Besides, heterogeneous sensor data and labels among individuals add challenges in building robust stress detection models. In this paper, we proposed a modality fusion network (MFN) to train models and infer self-reported binary stress labels under both complete and incomplete modality conditions. In addition, we applied personalized attention (PA) strategy to leverage personalized representation along with the generalized one-size-fits-all model. We evaluated our methods on a multimodal wearable sensor dataset (N=41) including galvanic skin response (GSR) and electrocardiogram (ECG). Compared to the baseline method using the samples with complete modalities, the performance of the MFN improved by 1.6% in f1-scores. On the other hand, the proposed PA strategy showed a 2.3% higher stress detection f1-score and approximately up to 70% reduction in personalized model parameter size (9.1 MB) compared to the previous state-of-the-art transfer learning strategy (29.3 MB).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge