Thomas Reid

In-Simulation Testing of Deep Learning Vision Models in Autonomous Robotic Manipulators

Oct 25, 2024

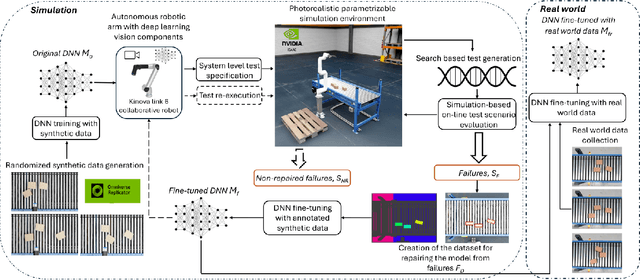

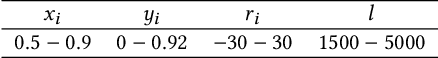

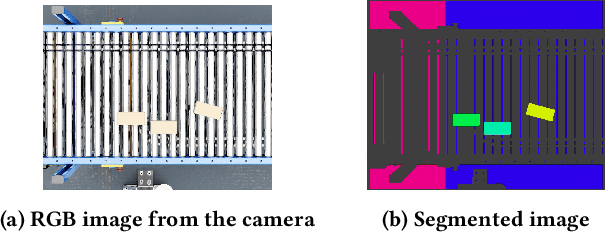

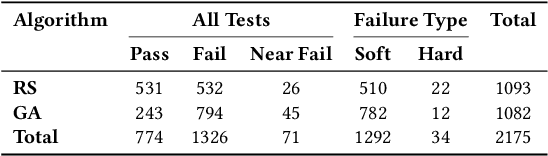

Abstract:Testing autonomous robotic manipulators is challenging due to the complex software interactions between vision and control components. A crucial element of modern robotic manipulators is the deep learning based object detection model. The creation and assessment of this model requires real world data, which can be hard to label and collect, especially when the hardware setup is not available. The current techniques primarily focus on using synthetic data to train deep neural networks (DDNs) and identifying failures through offline or online simulation-based testing. However, the process of exploiting the identified failures to uncover design flaws early on, and leveraging the optimized DNN within the simulation to accelerate the engineering of the DNN for real-world tasks remains unclear. To address these challenges, we propose the MARTENS (Manipulator Robot Testing and Enhancement in Simulation) framework, which integrates a photorealistic NVIDIA Isaac Sim simulator with evolutionary search to identify critical scenarios aiming at improving the deep learning vision model and uncovering system design flaws. Evaluation of two industrial case studies demonstrated that MARTENS effectively reveals robotic manipulator system failures, detecting 25 % to 50 % more failures with greater diversity compared to random test generation. The model trained and repaired using the MARTENS approach achieved mean average precision (mAP) scores of 0.91 and 0.82 on real-world images with no prior retraining. Further fine-tuning on real-world images for a few epochs (less than 10) increased the mAP to 0.95 and 0.89 for the first and second use cases, respectively. In contrast, a model trained solely on real-world data achieved mAPs of 0.8 and 0.75 for use case 1 and use case 2 after more than 25 epochs.

Trimming the Risk: Towards Reliable Continuous Training for Deep Learning Inspection Systems

Sep 13, 2024

Abstract:The industry increasingly relies on deep learning (DL) technology for manufacturing inspections, which are challenging to automate with rule-based machine vision algorithms. DL-powered inspection systems derive defect patterns from labeled images, combining human-like agility with the consistency of a computerized system. However, finite labeled datasets often fail to encompass all natural variations necessitating Continuous Training (CT) to regularly adjust their models with recent data. Effective CT requires fresh labeled samples from the original distribution; otherwise, selfgenerated labels can lead to silent performance degradation. To mitigate this risk, we develop a robust CT-based maintenance approach that updates DL models using reliable data selections through a two-stage filtering process. The initial stage filters out low-confidence predictions, as the model inherently discredits them. The second stage uses variational auto-encoders and histograms to generate image embeddings that capture latent and pixel characteristics, then rejects the inputs of substantially shifted embeddings as drifted data with erroneous overconfidence. Then, a fine-tuning of the original DL model is executed on the filtered inputs while validating on a mixture of recent production and original datasets. This strategy mitigates catastrophic forgetting and ensures the model adapts effectively to new operational conditions. Evaluations on industrial inspection systems for popsicle stick prints and glass bottles using critical real-world datasets showed less than 9% of erroneous self-labeled data are retained after filtering and used for fine-tuning, improving model performance on production data by up to 14% without compromising its results on original validation data.

SmOOD: Smoothness-based Out-of-Distribution Detection Approach for Surrogate Neural Networks in Aircraft Design

Sep 07, 2022

Abstract:Aircraft industry is constantly striving for more efficient design optimization methods in terms of human efforts, computation time, and resource consumption. Hybrid surrogate optimization maintains high results quality while providing rapid design assessments when both the surrogate model and the switch mechanism for eventually transitioning to the HF model are calibrated properly. Feedforward neural networks (FNNs) can capture highly nonlinear input-output mappings, yielding efficient surrogates for aircraft performance factors. However, FNNs often fail to generalize over the out-of-distribution (OOD) samples, which hinders their adoption in critical aircraft design optimization. Through SmOOD, our smoothness-based out-of-distribution detection approach, we propose to codesign a model-dependent OOD indicator with the optimized FNN surrogate, to produce a trustworthy surrogate model with selective but credible predictions. Unlike conventional uncertainty-grounded methods, SmOOD exploits inherent smoothness properties of the HF simulations to effectively expose OODs through revealing their suspicious sensitivities, thereby avoiding over-confident uncertainty estimates on OOD samples. By using SmOOD, only high-risk OOD inputs are forwarded to the HF model for re-evaluation, leading to more accurate results at a low overhead cost. Three aircraft performance models are investigated. Results show that FNN-based surrogates outperform their Gaussian Process counterparts in terms of predictive performance. Moreover, SmOOD does cover averagely 85% of actual OODs on all the study cases. When SmOOD plus FNN surrogates are deployed in hybrid surrogate optimization settings, they result in a decrease error rate of 34.65% and a computational speed up rate of 58.36 times, respectively.

Physics-Guided Adversarial Machine Learning for Aircraft Systems Simulation

Sep 07, 2022

Abstract:In the context of aircraft system performance assessment, deep learning technologies allow to quickly infer models from experimental measurements, with less detailed system knowledge than usually required by physics-based modeling. However, this inexpensive model development also comes with new challenges regarding model trustworthiness. This work presents a novel approach, physics-guided adversarial machine learning (ML), that improves the confidence over the physics consistency of the model. The approach performs, first, a physics-guided adversarial testing phase to search for test inputs revealing behavioral system inconsistencies, while still falling within the range of foreseeable operational conditions. Then, it proceeds with physics-informed adversarial training to teach the model the system-related physics domain foreknowledge through iteratively reducing the unwanted output deviations on the previously-uncovered counterexamples. Empirical evaluation on two aircraft system performance models shows the effectiveness of our adversarial ML approach in exposing physical inconsistencies of both models and in improving their propensity to be consistent with physics domain knowledge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge