Thomas Eisenbarth

Beyond Accuracy: Characterizing Code Comprehension Capabilities in (Large) Language Models

Jan 19, 2026Abstract:Large Language Models (LLMs) are increasingly integrated into software engineering workflows, yet current benchmarks provide only coarse performance summaries that obscure the diverse capabilities and limitations of these models. This paper investigates whether LLMs' code-comprehension performance aligns with traditional human-centric software metrics or instead reflects distinct, non-human regularities. We introduce a diagnostic framework that reframes code understanding as a binary input-output consistency task, enabling the evaluation of classification and generative models. Using a large-scale dataset, we correlate model performance with traditional, human-centric complexity metrics, such as lexical size, control-flow complexity, and abstract syntax tree structure. Our analyses reveal minimal correlation between human-defined metrics and LLM success (AUROC 0.63), while shadow models achieve substantially higher predictive performance (AUROC 0.86), capturing complex, partially predictable patterns beyond traditional software measures. These findings suggest that LLM comprehension reflects model-specific regularities only partially accessible through either human-designed or learned features, emphasizing the need for benchmark methodologies that move beyond aggregate accuracy and toward instance-level diagnostics, while acknowledging fundamental limits in predicting correct outcomes.

Prompt Pirates Need a Map: Stealing Seeds helps Stealing Prompts

Sep 11, 2025Abstract:Diffusion models have significantly advanced text-to-image generation, enabling the creation of highly realistic images conditioned on textual prompts and seeds. Given the considerable intellectual and economic value embedded in such prompts, prompt theft poses a critical security and privacy concern. In this paper, we investigate prompt-stealing attacks targeting diffusion models. We reveal that numerical optimization-based prompt recovery methods are fundamentally limited as they do not account for the initial random noise used during image generation. We identify and exploit a noise-generation vulnerability (CWE-339), prevalent in major image-generation frameworks, originating from PyTorch's restriction of seed values to a range of $2^{32}$ when generating the initial random noise on CPUs. Through a large-scale empirical analysis conducted on images shared via the popular platform CivitAI, we demonstrate that approximately 95% of these images' seed values can be effectively brute-forced in 140 minutes per seed using our seed-recovery tool, SeedSnitch. Leveraging the recovered seed, we propose PromptPirate, a genetic algorithm-based optimization method explicitly designed for prompt stealing. PromptPirate surpasses state-of-the-art methods, i.e., PromptStealer, P2HP, and CLIP-Interrogator, achieving an 8-11% improvement in LPIPS similarity. Furthermore, we introduce straightforward and effective countermeasures that render seed stealing, and thus optimization-based prompt stealing, ineffective. We have disclosed our findings responsibly and initiated coordinated mitigation efforts with the developers to address this critical vulnerability.

AutoStub: Genetic Programming-Based Stub Creation for Symbolic Execution

Sep 10, 2025Abstract:Symbolic execution is a powerful technique for software testing, but suffers from limitations when encountering external functions, such as native methods or third-party libraries. Existing solutions often require additional context, expensive SMT solvers, or manual intervention to approximate these functions through symbolic stubs. In this work, we propose a novel approach to automatically generate symbolic stubs for external functions during symbolic execution that leverages Genetic Programming. When the symbolic executor encounters an external function, AutoStub generates training data by executing the function on randomly generated inputs and collecting the outputs. Genetic Programming then derives expressions that approximate the behavior of the function, serving as symbolic stubs. These automatically generated stubs allow the symbolic executor to continue the analysis without manual intervention, enabling the exploration of program paths that were previously intractable. We demonstrate that AutoStub can automatically approximate external functions with over 90% accuracy for 55% of the functions evaluated, and can infer language-specific behaviors that reveal edge cases crucial for software testing.

* 2025 HUMIES finalist

Non-omniscient backdoor injection with a single poison sample: Proving the one-poison hypothesis for linear regression and linear classification

Aug 07, 2025Abstract:Backdoor injection attacks are a threat to machine learning models that are trained on large data collected from untrusted sources; these attacks enable attackers to inject malicious behavior into the model that can be triggered by specially crafted inputs. Prior work has established bounds on the success of backdoor attacks and their impact on the benign learning task, however, an open question is what amount of poison data is needed for a successful backdoor attack. Typical attacks either use few samples, but need much information about the data points or need to poison many data points. In this paper, we formulate the one-poison hypothesis: An adversary with one poison sample and limited background knowledge can inject a backdoor with zero backdooring-error and without significantly impacting the benign learning task performance. Moreover, we prove the one-poison hypothesis for linear regression and linear classification. For adversaries that utilize a direction that is unused by the benign data distribution for the poison sample, we show that the resulting model is functionally equivalent to a model where the poison was excluded from training. We build on prior work on statistical backdoor learning to show that in all other cases, the impact on the benign learning task is still limited. We also validate our theoretical results experimentally with realistic benchmark data sets.

Trace Gadgets: Minimizing Code Context for Machine Learning-Based Vulnerability Prediction

Apr 18, 2025

Abstract:As the number of web applications and API endpoints exposed to the Internet continues to grow, so does the number of exploitable vulnerabilities. Manually identifying such vulnerabilities is tedious. Meanwhile, static security scanners tend to produce many false positives. While machine learning-based approaches are promising, they typically perform well only in scenarios where training and test data are closely related. A key challenge for ML-based vulnerability detection is providing suitable and concise code context, as excessively long contexts negatively affect the code comprehension capabilities of machine learning models, particularly smaller ones. This work introduces Trace Gadgets, a novel code representation that minimizes code context by removing non-related code. Trace Gadgets precisely capture the statements that cover the path to the vulnerability. As input for ML models, Trace Gadgets provide a minimal but complete context, thereby improving the detection performance. Moreover, we collect a large-scale dataset generated from real-world applications with manually curated labels to further improve the performance of ML-based vulnerability detectors. Our results show that state-of-the-art machine learning models perform best when using Trace Gadgets compared to previous code representations, surpassing the detection capabilities of industry-standard static scanners such as GitHub's CodeQL by at least 4% on a fully unseen dataset. By applying our framework to real-world applications, we identify and report previously unknown vulnerabilities in widely deployed software.

OCEAN: Open-World Contrastive Authorship Identification

Dec 06, 2024Abstract:In an era where cyberattacks increasingly target the software supply chain, the ability to accurately attribute code authorship in binary files is critical to improving cybersecurity measures. We propose OCEAN, a contrastive learning-based system for function-level authorship attribution. OCEAN is the first framework to explore code authorship attribution on compiled binaries in an open-world and extreme scenario, where two code samples from unknown authors are compared to determine if they are developed by the same author. To evaluate OCEAN, we introduce new realistic datasets: CONAN, to improve the performance of authorship attribution systems in real-world use cases, and SNOOPY, to increase the robustness of the evaluation of such systems. We use CONAN to train our model and evaluate on SNOOPY, a fully unseen dataset, resulting in an AUROC score of 0.86 even when using high compiler optimizations. We further show that CONAN improves performance by 7% compared to the previously used Google Code Jam dataset. Additionally, OCEAN outperforms previous methods in their settings, achieving a 10% improvement over state-of-the-art SCS-Gan in scenarios analyzing source code. Furthermore, OCEAN can detect code injections from an unknown author in a software update, underscoring its value for securing software supply chains.

Dynamic Frequency-Based Fingerprinting Attacks against Modern Sandbox Environments

Apr 17, 2024Abstract:The cloud computing landscape has evolved significantly in recent years, embracing various sandboxes to meet the diverse demands of modern cloud applications. These sandboxes encompass container-based technologies like Docker and gVisor, microVM-based solutions like Firecracker, and security-centric sandboxes relying on Trusted Execution Environments (TEEs) such as Intel SGX and AMD SEV. However, the practice of placing multiple tenants on shared physical hardware raises security and privacy concerns, most notably side-channel attacks. In this paper, we investigate the possibility of fingerprinting containers through CPU frequency reporting sensors in Intel and AMD CPUs. One key enabler of our attack is that the current CPU frequency information can be accessed by user-space attackers. We demonstrate that Docker images exhibit a unique frequency signature, enabling the distinction of different containers with up to 84.5% accuracy even when multiple containers are running simultaneously in different cores. Additionally, we assess the effectiveness of our attack when performed against several sandboxes deployed in cloud environments, including Google's gVisor, AWS' Firecracker, and TEE-based platforms like Gramine (utilizing Intel SGX) and AMD SEV. Our empirical results show that these attacks can also be carried out successfully against all of these sandboxes in less than 40 seconds, with an accuracy of over 70% in all cases. Finally, we propose a noise injection-based countermeasure to mitigate the proposed attack on cloud environments.

DASH: Accelerating Distributed Private Machine Learning Inference with Arithmetic Garbled Circuits

Feb 13, 2023Abstract:The adoption of machine learning solutions is rapidly increasing across all parts of society. Cloud service providers such as Amazon Web Services, Microsoft Azure and the Google Cloud Platform aggressively expand their Machine-Learning-as-a-Service offerings. While the widespread adoption of machine learning has huge potential for both research and industry, the large-scale evaluation of possibly sensitive data on untrusted platforms bears inherent data security and privacy risks. Since computation time is expensive, performance is a critical factor for machine learning. However, prevailing security measures proposed in the past years come with a significant performance overhead. We investigate the current state of protected distributed machine learning systems, focusing on deep convolutional neural networks. The most common and best-performing mixed MPC approaches are based on homomorphic encryption, secret sharing, and garbled circuits. They commonly suffer from communication overheads that grow linearly in the depth of the neural network. We present Dash, a fast and distributed private machine learning inference scheme. Dash is based purely on arithmetic garbled circuits. It requires only a single communication round per inference step, regardless of the depth of the neural network, and a very small constant communication volume. Dash thus significantly reduces performance requirements and scales better than previous approaches. In addition, we introduce the concept of LabelTensors. This allows us to efficiently use GPUs while using garbled circuits, which further reduces the runtime. Dash offers security against a malicious attacker and is up to 140 times faster than previous arithmetic garbling schemes.

FortuneTeller: Predicting Microarchitectural Attacks via Unsupervised Deep Learning

Jul 08, 2019

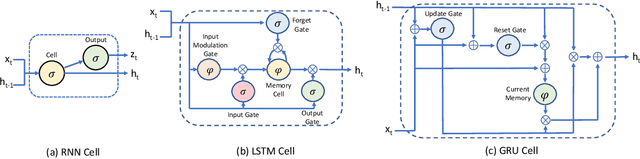

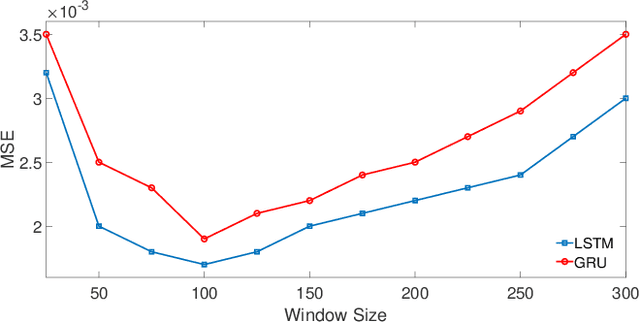

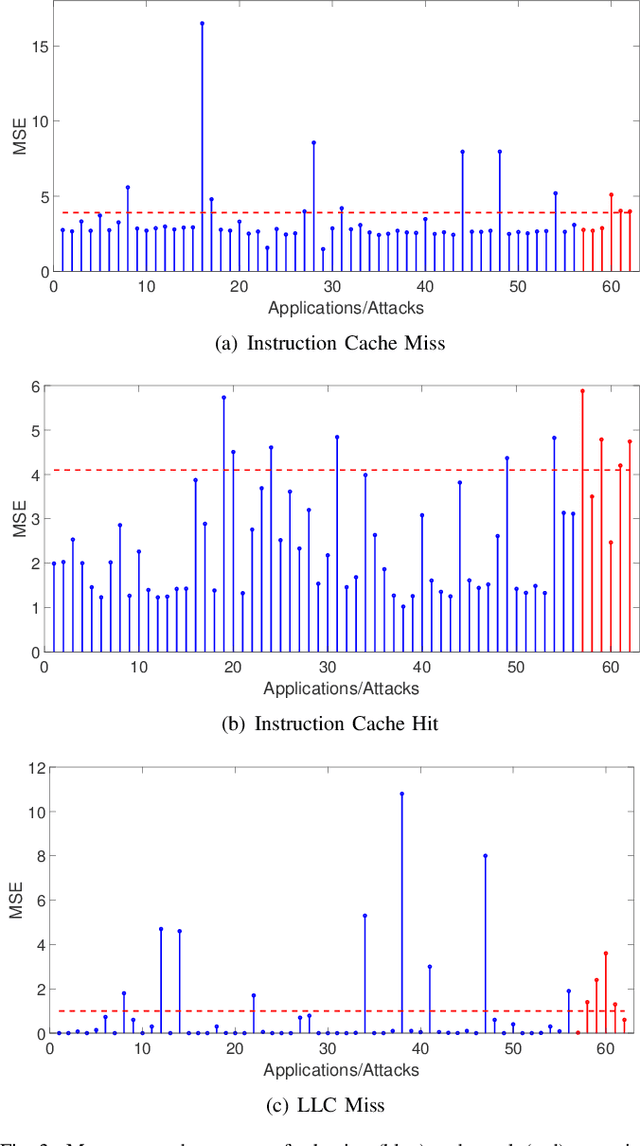

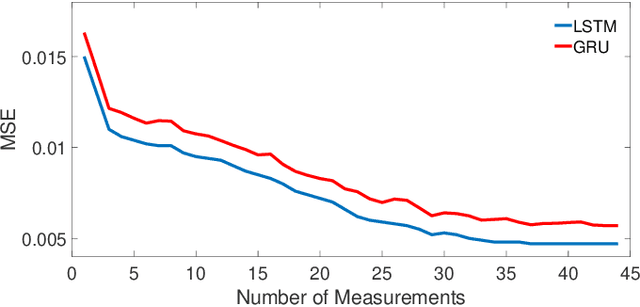

Abstract:The growing security threat of microarchitectural attacks underlines the importance of robust security sensors and detection mechanisms at the hardware level. While there are studies on runtime detection of cache attacks, a generic model to consider the broad range of existing and future attacks is missing. Unfortunately, previous approaches only consider either a single attack variant, e.g. Prime+Probe, or specific victim applications such as cryptographic implementations. Furthermore, the state-of-the art anomaly detection methods are based on coarse-grained statistical models, which are not successful to detect anomalies in a large-scale real world systems. Thanks to the memory capability of advanced Recurrent Neural Networks (RNNs) algorithms, both short and long term dependencies can be learned more accurately. Therefore, we propose FortuneTeller, which for the first time leverages the superiority of RNNs to learn complex execution patterns and detects unseen microarchitectural attacks in real world systems. FortuneTeller models benign workload pattern from a microarchitectural standpoint in an unsupervised fashion, and then, it predicts how upcoming benign executions are supposed to behave. Potential attacks and malicious behaviors will be detected automatically, when there is a discrepancy between the predicted execution pattern and the runtime observation. We implement FortuneTeller based on the available hardware performance counters on Intel processors and it is trained with 10 million samples obtained from benign applications. For the first time, the latest attacks such as Meltdown, Spectre, Rowhammer and Zombieload are detected with one trained model and without observing these attacks during the training. We show that FortuneTeller achieves F-score of 0.9970.

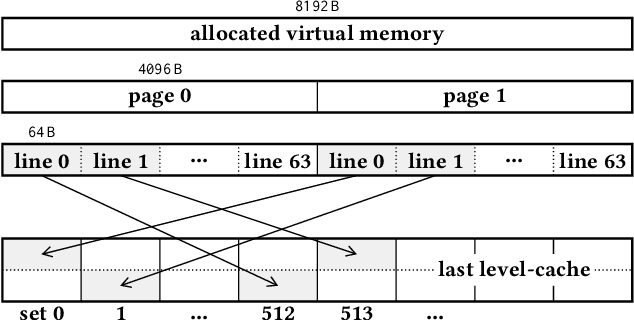

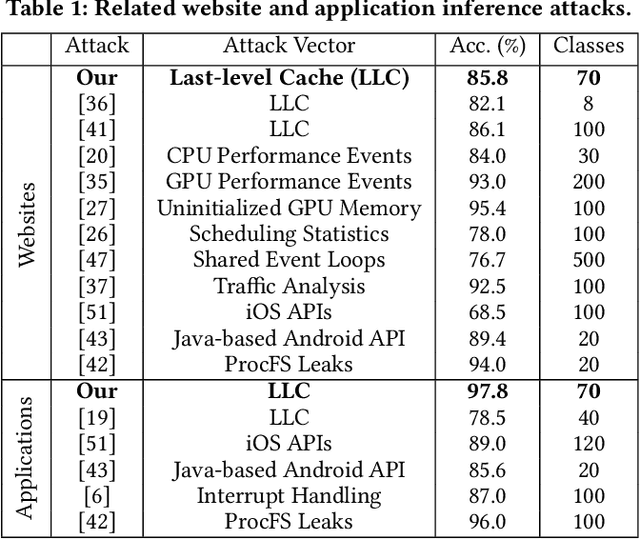

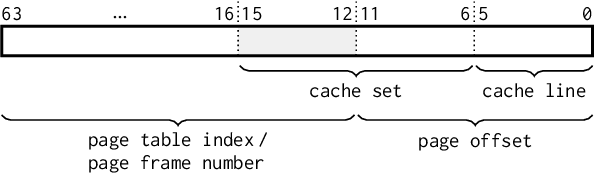

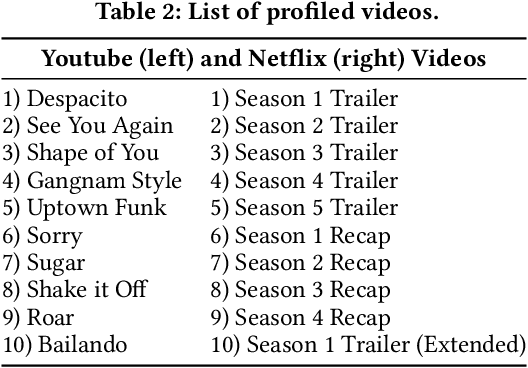

Undermining User Privacy on Mobile Devices Using AI

Nov 27, 2018

Abstract:Over the past years, literature has shown that attacks exploiting the microarchitecture of modern processors pose a serious threat to the privacy of mobile phone users. This is because applications leave distinct footprints in the processor, which can be used by malware to infer user activities. In this work, we show that these inference attacks are considerably more practical when combined with advanced AI techniques. In particular, we focus on profiling the activity in the last-level cache (LLC) of ARM processors. We employ a simple Prime+Probe based monitoring technique to obtain cache traces, which we classify with Deep Learning methods including Convolutional Neural Networks. We demonstrate our approach on an off-the-shelf Android phone by launching a successful attack from an unprivileged, zeropermission App in well under a minute. The App thereby detects running applications with an accuracy of 98% and reveals opened websites and streaming videos by monitoring the LLC for at most 6 seconds. This is possible, since Deep Learning compensates measurement disturbances stemming from the inherently noisy LLC monitoring and unfavorable cache characteristics such as random line replacement policies. In summary, our results show that thanks to advanced AI techniques, inference attacks are becoming alarmingly easy to implement and execute in practice. This once more calls for countermeasures that confine microarchitectural leakage and protect mobile phone applications, especially those valuing the privacy of their users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge