Thomas Ahle

Extended Mind Transformers

Jun 04, 2024

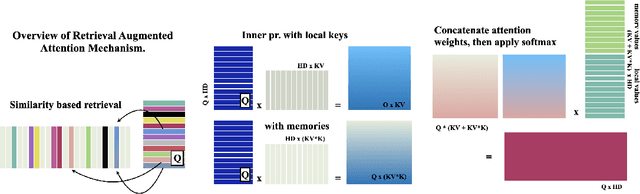

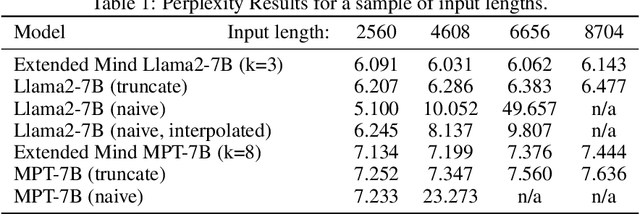

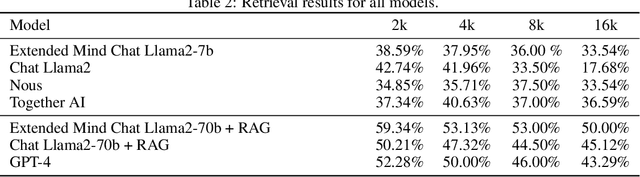

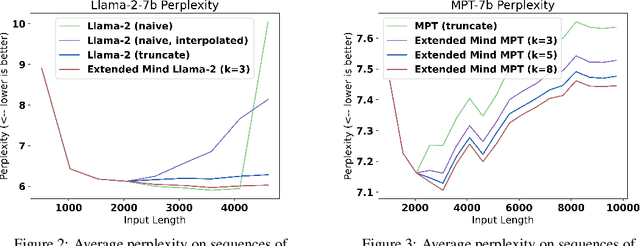

Abstract:Pre-trained language models demonstrate general intelligence and common sense, but long inputs quickly become a bottleneck for memorizing information at inference time. We resurface a simple method, Memorizing Transformers (Wu et al., 2022), that gives the model access to a bank of pre-computed memories. We show that it is possible to fix many of the shortcomings of the original method, such as the need for fine-tuning, by critically assessing how positional encodings should be updated for the keys and values retrieved. This intuitive method uses the model's own key/query system to select and attend to the most relevant memories at each generation step, rather than using external embeddings. We demonstrate the importance of external information being retrieved in a majority of decoder layers, contrary to previous work. We open source a new counterfactual long-range retrieval benchmark, and show that Extended Mind Transformers outperform today's state of the art by 6% on average.

Thermodynamic Computing System for AI Applications

Dec 08, 2023

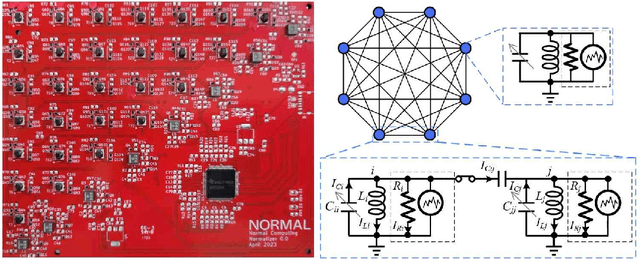

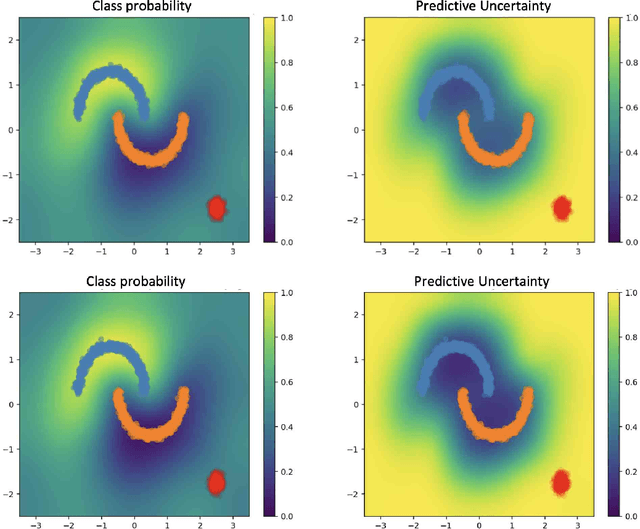

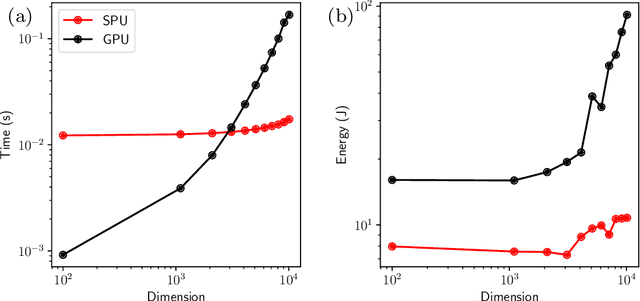

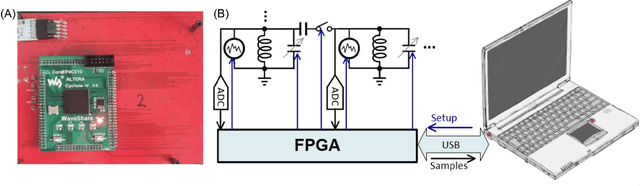

Abstract:Recent breakthroughs in artificial intelligence (AI) algorithms have highlighted the need for novel computing hardware in order to truly unlock the potential for AI. Physics-based hardware, such as thermodynamic computing, has the potential to provide a fast, low-power means to accelerate AI primitives, especially generative AI and probabilistic AI. In this work, we present the first continuous-variable thermodynamic computer, which we call the stochastic processing unit (SPU). Our SPU is composed of RLC circuits, as unit cells, on a printed circuit board, with 8 unit cells that are all-to-all coupled via switched capacitances. It can be used for either sampling or linear algebra primitives, and we demonstrate Gaussian sampling and matrix inversion on our hardware. The latter represents the first thermodynamic linear algebra experiment. We also illustrate the applicability of the SPU to uncertainty quantification for neural network classification. We envision that this hardware, when scaled up in size, will have significant impact on accelerating various probabilistic AI applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge