Thiago Vallin Spina

FOMTrace: Interactive Video Segmentation By Image Graphs and Fuzzy Object Models

Jun 10, 2016

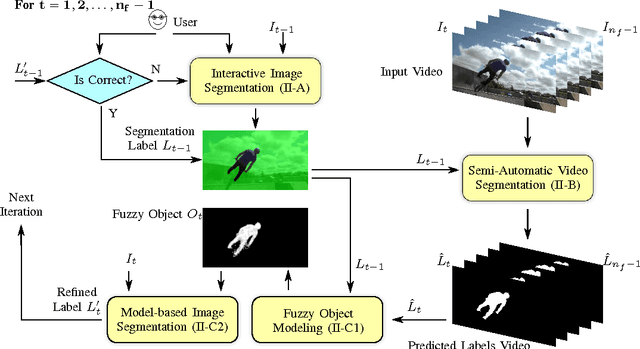

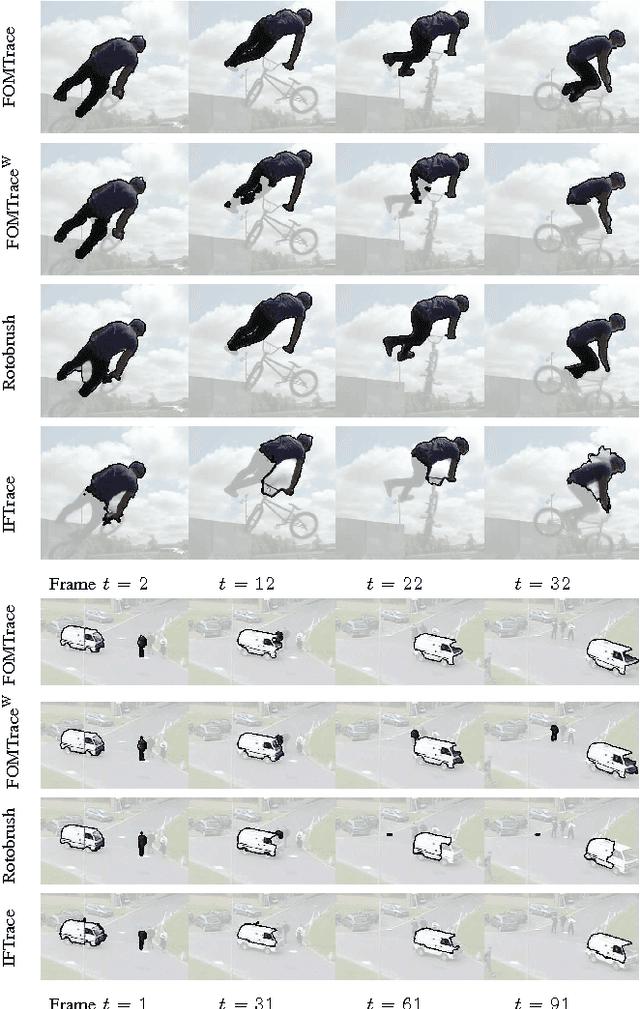

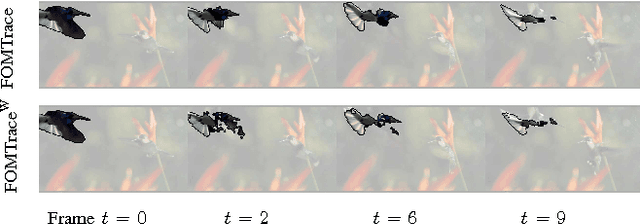

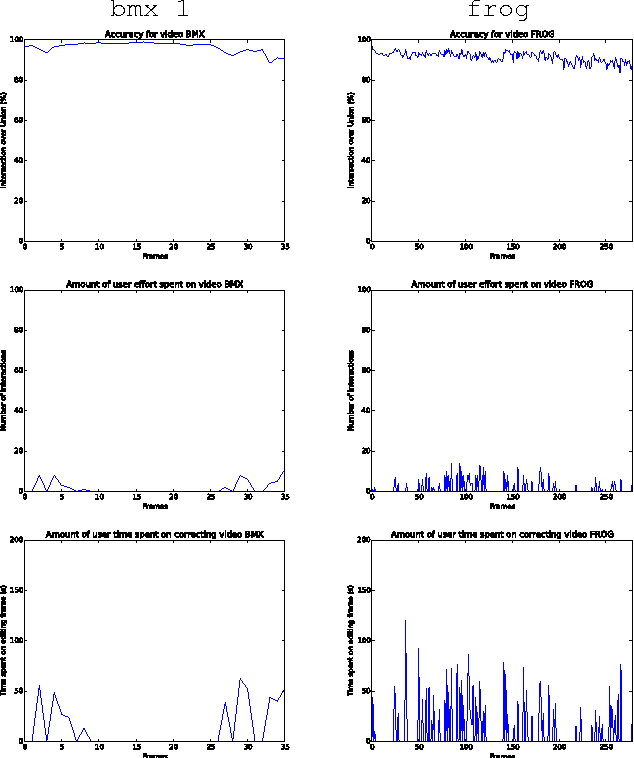

Abstract:Common users have changed from mere consumers to active producers of multimedia data content. Video editing plays an important role in this scenario, calling for simple segmentation tools that can handle fast-moving and deformable video objects with possible occlusions, color similarities with the background, among other challenges. We present an interactive video segmentation method, named FOMTrace, which addresses the problem in an effective and efficient way. From a user-provided object mask in a first frame, the method performs semi-automatic video segmentation on a spatiotemporal superpixel-graph, and then estimates a Fuzzy Object Model (FOM), which refines segmentation of the second frame by constraining delineation on a pixel-graph within a region where the object's boundary is expected to be. The user can correct/accept the refined object mask in the second frame, which is then similarly used to improve the spatiotemporal video segmentation of the remaining frames. Both steps are repeated alternately, within interactive response times, until the segmentation refinement of the final frame is accepted by the user. Extensive experiments demonstrate FOMTrace's ability for tracing objects in comparison with state-of-the-art approaches for interactive video segmentation, supervised, and unsupervised object tracking.

Computer vision tools for the non-invasive assessment of autism-related behavioral markers

Nov 08, 2012

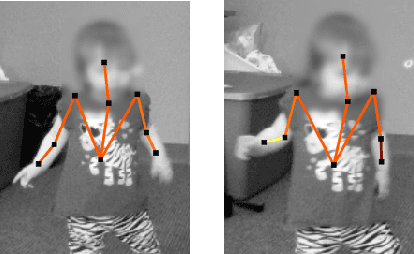

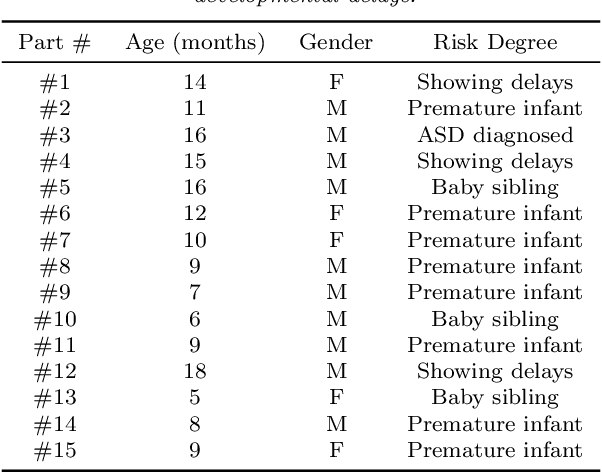

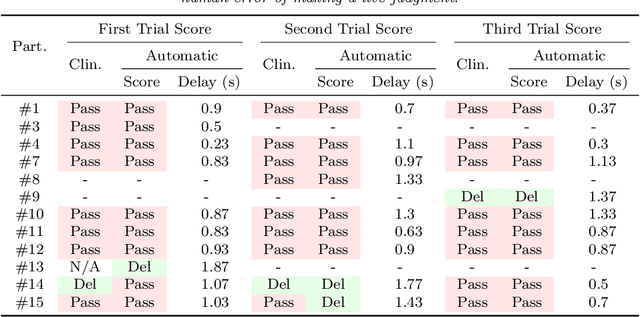

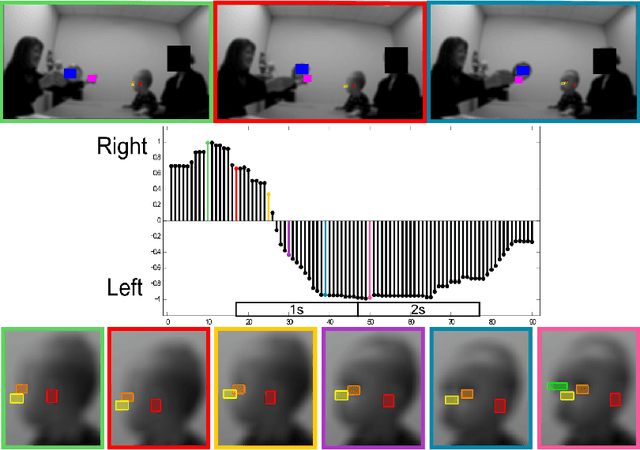

Abstract:The early detection of developmental disorders is key to child outcome, allowing interventions to be initiated that promote development and improve prognosis. Research on autism spectrum disorder (ASD) suggests behavioral markers can be observed late in the first year of life. Many of these studies involved extensive frame-by-frame video observation and analysis of a child's natural behavior. Although non-intrusive, these methods are extremely time-intensive and require a high level of observer training; thus, they are impractical for clinical and large population research purposes. Diagnostic measures for ASD are available for infants but are only accurate when used by specialists experienced in early diagnosis. This work is a first milestone in a long-term multidisciplinary project that aims at helping clinicians and general practitioners accomplish this early detection/measurement task automatically. We focus on providing computer vision tools to measure and identify ASD behavioral markers based on components of the Autism Observation Scale for Infants (AOSI). In particular, we develop algorithms to measure three critical AOSI activities that assess visual attention. We augment these AOSI activities with an additional test that analyzes asymmetrical patterns in unsupported gait. The first set of algorithms involves assessing head motion by tracking facial features, while the gait analysis relies on joint foreground segmentation and 2D body pose estimation in video. We show results that provide insightful knowledge to augment the clinician's behavioral observations obtained from real in-clinic assessments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge