Thanh Ngo

An Analysis of State-of-the-art Activation Functions For Supervised Deep Neural Network

Apr 05, 2021

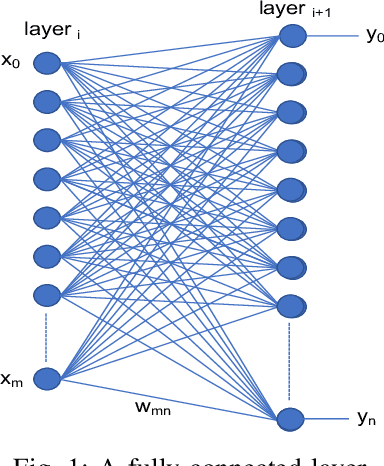

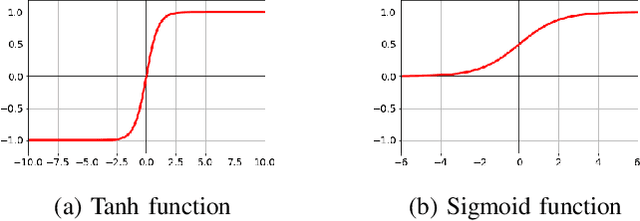

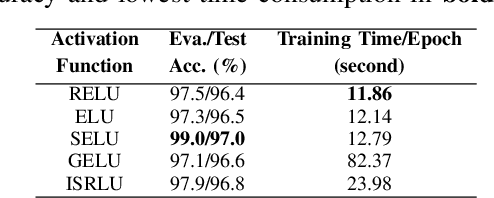

Abstract:This paper provides an analysis of state-of-the-art activation functions with respect to supervised classification of deep neural network. These activation functions comprise of Rectified Linear Units (ReLU), Exponential Linear Unit (ELU), Scaled Exponential Linear Unit (SELU), Gaussian Error Linear Unit (GELU), and the Inverse Square Root Linear Unit (ISRLU). To evaluate, experiments over two deep learning network architectures integrating these activation functions are conducted. The first model, basing on Multilayer Perceptron (MLP), is evaluated with MNIST dataset to perform these activation functions. Meanwhile, the second model, likely VGGish-based architecture, is applied for Acoustic Scene Classification (ASC) Task 1A in DCASE 2018 challenge, thus evaluate whether these activation functions work well in different datasets as well as different network architectures.

Binomial Tails for Community Analysis

Dec 17, 2020

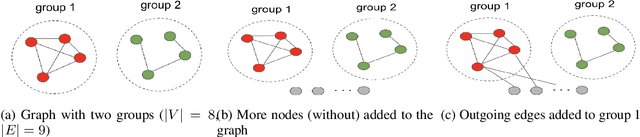

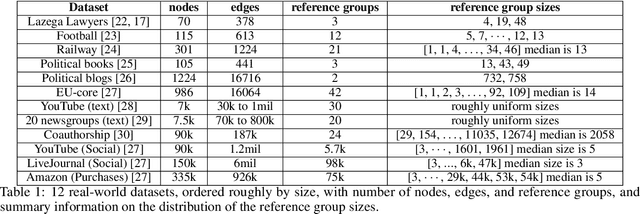

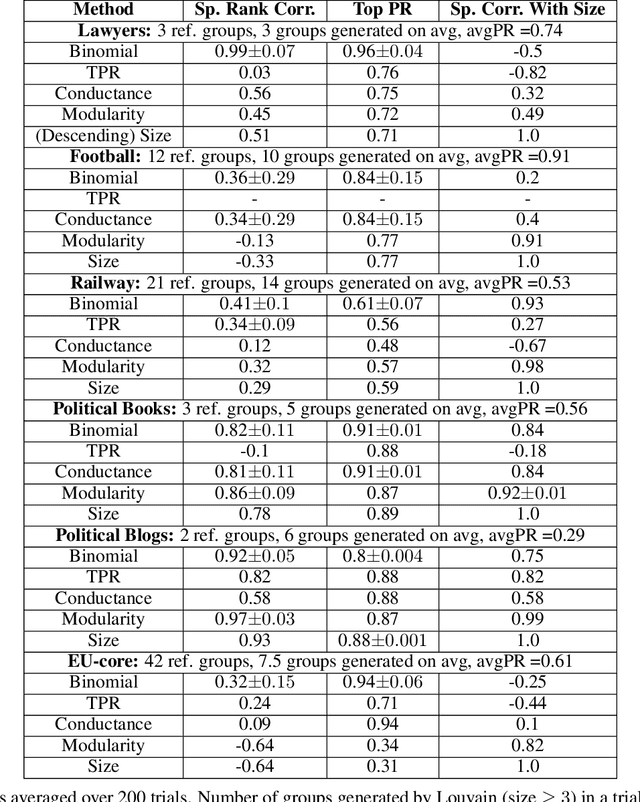

Abstract:An important task of community discovery in networks is assessing significance of the results and robust ranking of the generated candidate groups. Often in practice, numerous candidate communities are discovered, and focusing the analyst's time on the most salient and promising findings is crucial. We develop simple efficient group scoring functions derived from tail probabilities using binomial models. Experiments on synthetic and numerous real-world data provides evidence that binomial scoring leads to a more robust ranking than other inexpensive scoring functions, such as conductance. Furthermore, we obtain confidence values ($p$-values) that can be used for filtering and labeling the discovered groups. Our analyses shed light on various properties of the approach. The binomial tail is simple and versatile, and we describe two other applications for community analysis: degree of community membership (which in turn yields group-scoring functions), and the discovery of significant edges in the community-induced graph.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge