Teng Gao

CycleGAN with Dual Adversarial Loss for Bone-Conducted Speech Enhancement

Nov 02, 2021

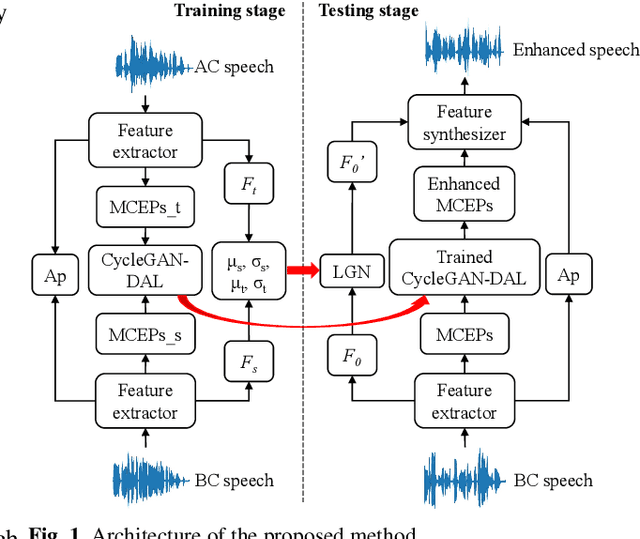

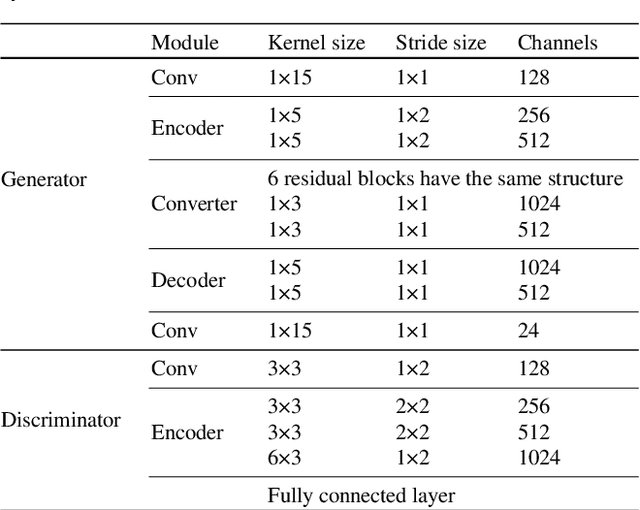

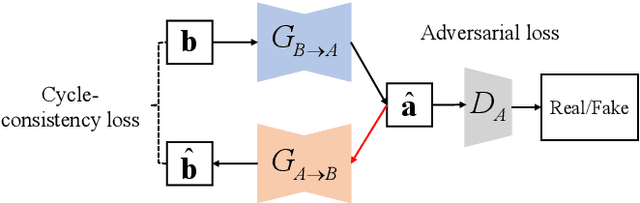

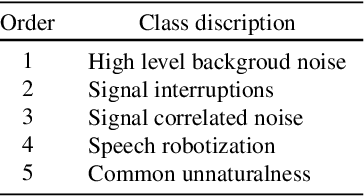

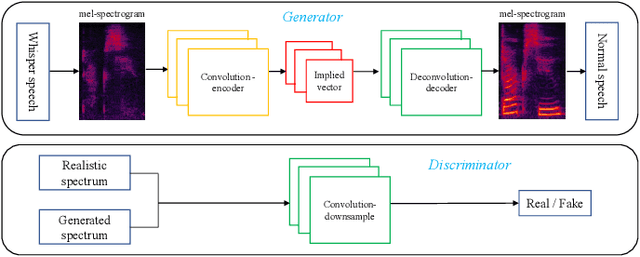

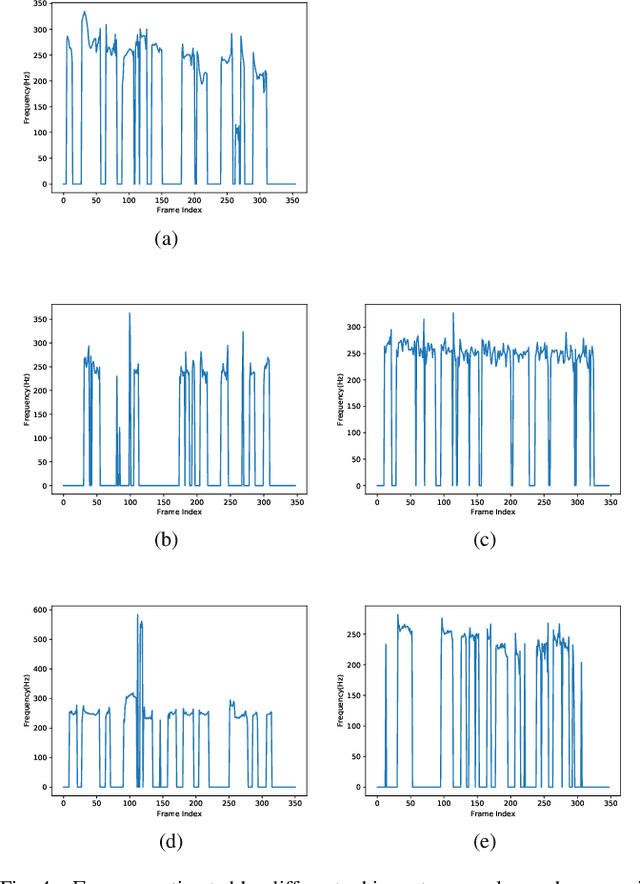

Abstract:Compared with air-conducted speech, bone-conducted speech has the unique advantage of shielding background noise. Enhancement of bone-conducted speech helps to improve its quality and intelligibility. In this paper, a novel CycleGAN with dual adversarial loss (CycleGAN-DAL) is proposed for bone-conducted speech enhancement. The proposed method uses an adversarial loss and a cycle-consistent loss simultaneously to learn forward and cyclic mapping, in which the adversarial loss is replaced with the classification adversarial loss and the defect adversarial loss to consolidate the forward mapping. Compared with conventional baseline methods, it can learn feature mapping between bone-conducted speech and target speech without additional air-conducted speech assistance. Moreover, the proposed method also avoids the oversmooth problem which is occurred commonly in conventional statistical based models. Experimental results show that the proposed method outperforms baseline methods such as CycleGAN, GMM, and BLSTM. Keywords: Bone-conducted speech enhancement, dual adversarial loss, Parallel CycleGAN, high frequency speech reconstruction

Attention-Guided Generative Adversarial Network for Whisper to Normal Speech Conversion

Nov 02, 2021

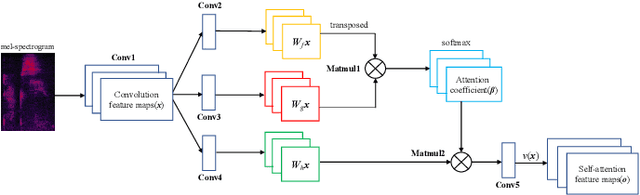

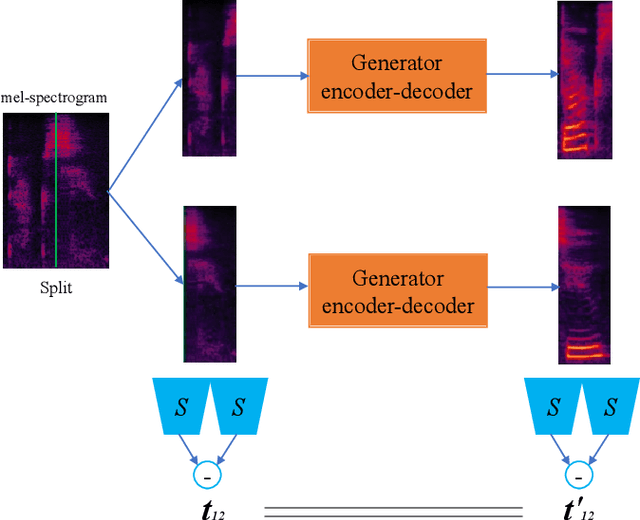

Abstract:Whispered speech is a special way of pronunciation without using vocal cord vibration. A whispered speech does not contain a fundamental frequency, and its energy is about 20dB lower than that of a normal speech. Converting a whispered speech into a normal speech can improve speech quality and intelligibility. In this paper, a novel attention-guided generative adversarial network model incorporating an autoencoder, a Siamese neural network, and an identity mapping loss function for whisper to normal speech conversion (AGAN-W2SC) is proposed. The proposed method avoids the challenge of estimating the fundamental frequency of the normal voiced speech converted from a whispered speech. Specifically, the proposed model is more amendable to practical applications because it does not need to align speech features for training. Experimental results demonstrate that the proposed AGAN-W2SC can obtain improved speech quality and intelligibility compared with dynamic-time-warping-based methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge