Tena Dubček

Binary classification of spoken words with passive elastic metastructures

Nov 14, 2021

Abstract:Many electronic devices spend most of their time waiting for a wake-up event: pacemakers waiting for an anomalous heartbeat, security systems on alert to detect an intruder, smartphones listening for the user to say a wake-up phrase. These devices continuously convert physical signals into electrical currents that are then analyzed on a digital computer -- leading to power consumption even when no event is taking place. Solving this problem requires the ability to passively distinguish relevant from irrelevant events (e.g. tell a wake-up phrase from a regular conversation). Here, we experimentally demonstrate an elastic metastructure, consisting of a network of coupled silicon resonators, that passively discriminates between pairs of spoken words -- solving the wake-up problem for scenarios where only two classes of events are possible. This passive speech recognition is demonstrated on a dataset from speakers with significant gender and accent diversity. The geometry of the metastructure is determined during the design process, in which the network of resonators ('mechanical neurones') learns to selectively respond to spoken words. Training is facilitated by a machine learning model that reduces the number of computationally expensive three-dimensional elastic wave simulations. By embedding event detection in the structural dynamics, mechanical neural networks thus enable novel classes of always-on smart devices with no standby power consumption.

Tunable Efficient Unitary Neural Networks (EUNN) and their application to RNNs

Apr 03, 2017

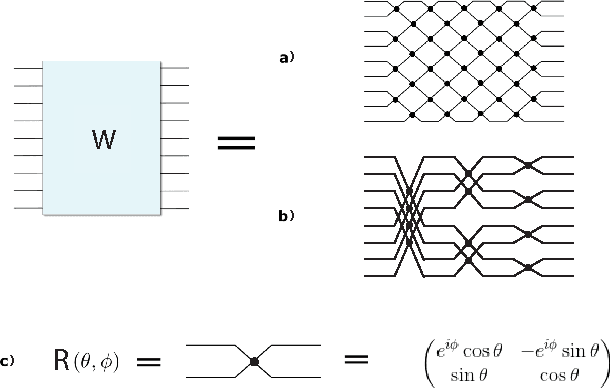

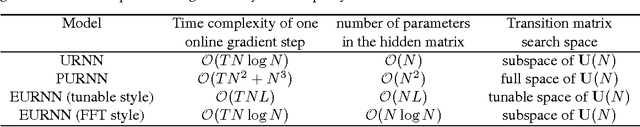

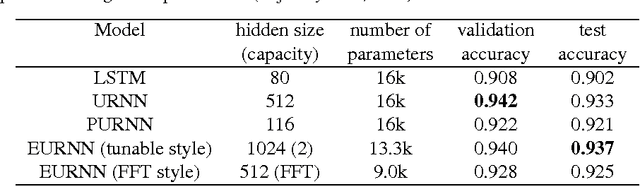

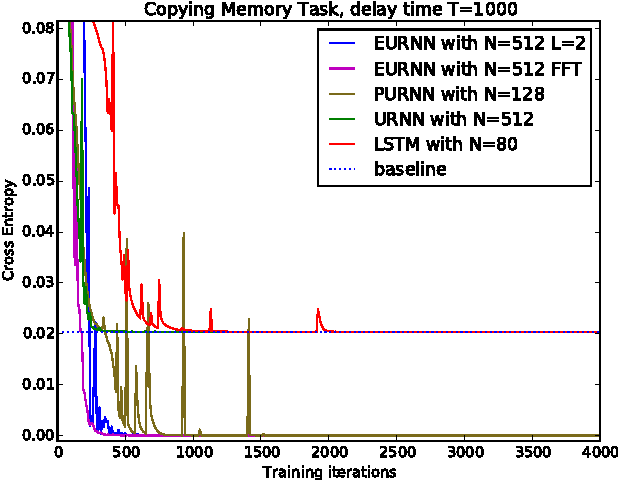

Abstract:Using unitary (instead of general) matrices in artificial neural networks (ANNs) is a promising way to solve the gradient explosion/vanishing problem, as well as to enable ANNs to learn long-term correlations in the data. This approach appears particularly promising for Recurrent Neural Networks (RNNs). In this work, we present a new architecture for implementing an Efficient Unitary Neural Network (EUNNs); its main advantages can be summarized as follows. Firstly, the representation capacity of the unitary space in an EUNN is fully tunable, ranging from a subspace of SU(N) to the entire unitary space. Secondly, the computational complexity for training an EUNN is merely $\mathcal{O}(1)$ per parameter. Finally, we test the performance of EUNNs on the standard copying task, the pixel-permuted MNIST digit recognition benchmark as well as the Speech Prediction Test (TIMIT). We find that our architecture significantly outperforms both other state-of-the-art unitary RNNs and the LSTM architecture, in terms of the final performance and/or the wall-clock training speed. EUNNs are thus promising alternatives to RNNs and LSTMs for a wide variety of applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge