Tassadit Bouadi

LACODAM, UR1

Generating robust counterfactual explanations

Apr 24, 2023

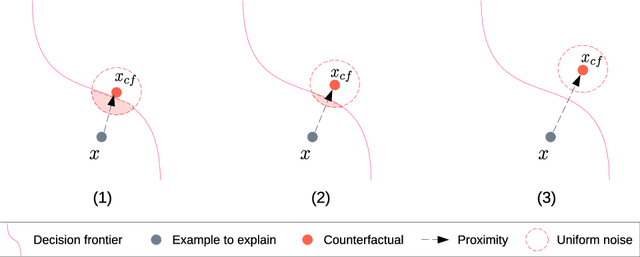

Abstract:Counterfactual explanations have become a mainstay of the XAI field. This particularly intuitive statement allows the user to understand what small but necessary changes would have to be made to a given situation in order to change a model prediction. The quality of a counterfactual depends on several criteria: realism, actionability, validity, robustness, etc. In this paper, we are interested in the notion of robustness of a counterfactual. More precisely, we focus on robustness to counterfactual input changes. This form of robustness is particularly challenging as it involves a trade-off between the robustness of the counterfactual and the proximity with the example to explain. We propose a new framework, CROCO, that generates robust counterfactuals while managing effectively this trade-off, and guarantees the user a minimal robustness. An empirical evaluation on tabular datasets confirms the relevance and effectiveness of our approach.

VCNet: A self-explaining model for realistic counterfactual generation

Dec 21, 2022Abstract:Counterfactual explanation is a common class of methods to make local explanations of machine learning decisions. For a given instance, these methods aim to find the smallest modification of feature values that changes the predicted decision made by a machine learning model. One of the challenges of counterfactual explanation is the efficient generation of realistic counterfactuals. To address this challenge, we propose VCNet-Variational Counter Net-a model architecture that combines a predictor and a counterfactual generator that are jointly trained, for regression or classification tasks. VCNet is able to both generate predictions, and to generate counterfactual explanations without having to solve another minimisation problem. Our contribution is the generation of counterfactuals that are close to the distribution of the predicted class. This is done by learning a variational autoencoder conditionally to the output of the predictor in a join-training fashion. We present an empirical evaluation on tabular datasets and across several interpretability metrics. The results are competitive with the state-of-the-art method.

Preference fusion and Condorcet's Paradox under uncertainty

Aug 09, 2017

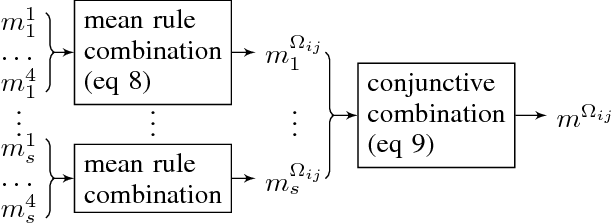

Abstract:Facing an unknown situation, a person may not be able to firmly elicit his/her preferences over different alternatives, so he/she tends to express uncertain preferences. Given a community of different persons expressing their preferences over certain alternatives under uncertainty, to get a collective representative opinion of the whole community, a preference fusion process is required. The aim of this work is to propose a preference fusion method that copes with uncertainty and escape from the Condorcet paradox. To model preferences under uncertainty, we propose to develop a model of preferences based on belief function theory that accurately describes and captures the uncertainty associated with individual or collective preferences. This work improves and extends the previous results. This work improves and extends the contribution presented in a previous work. The benefits of our contribution are twofold. On the one hand, we propose a qualitative and expressive preference modeling strategy based on belief-function theory which scales better with the number of sources. On the other hand, we propose an incremental distance-based algorithm (using Jousselme distance) for the construction of the collective preference order to avoid the Condorcet Paradox.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge