Tanveer Khan

To Vaccinate or not to Vaccinate? Analyzing $\mathbb{X}$ Power over the Pandemic

Mar 04, 2025

Abstract:The COVID-19 pandemic has profoundly affected the normal course of life -- from lock-downs and virtual meetings to the unprecedentedly swift creation of vaccines. To halt the COVID-19 pandemic, the world has started preparing for the global vaccine roll-out. In an effort to navigate the immense volume of information about COVID-19, the public has turned to social networks. Among them, $\mathbb{X}$ (formerly Twitter) has played a key role in distributing related information. Most people are not trained to interpret medical research and remain skeptical about the efficacy of new vaccines. Measuring their reactions and perceptions is gaining significance in the fight against COVID-19. To assess the public perception regarding the COVID-19 vaccine, our work applies a sentiment analysis approach, using natural language processing of $\mathbb{X}$ data. We show how to use textual analytics and textual data visualization to discover early insights (for example, by analyzing the most frequently used keywords and hashtags). Furthermore, we look at how people's sentiments vary across the countries. Our results indicate that although the overall reaction to the vaccine is positive, there are also negative sentiments associated with the tweets, especially when examined at the country level. Additionally, from the extracted tweets, we manually labeled 100 tweets as positive and 100 tweets as negative and trained various One-Class Classifiers (OCCs). The experimental results indicate that the S-SVDD classifiers outperform other OCCs.

Make Split, not Hijack: Preventing Feature-Space Hijacking Attacks in Split Learning

Apr 14, 2024

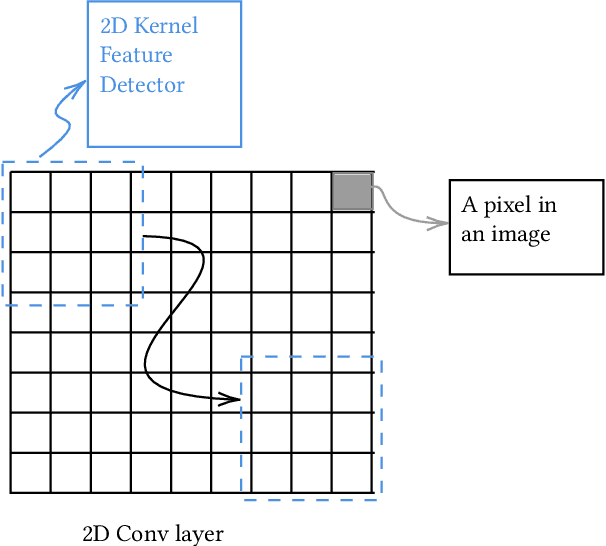

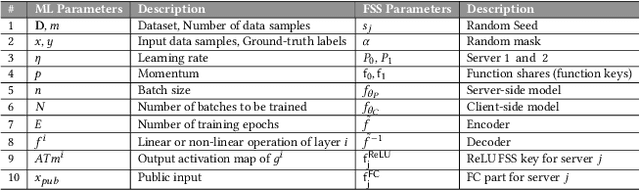

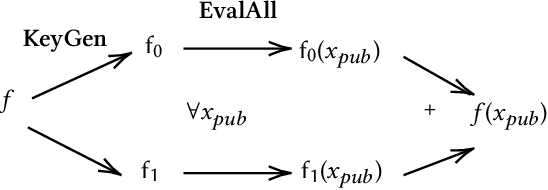

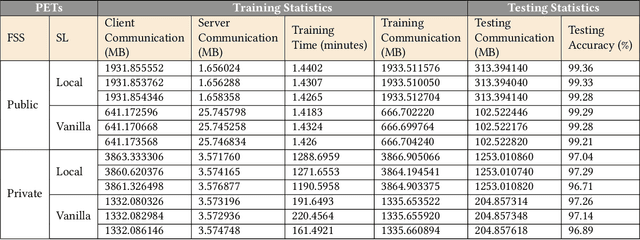

Abstract:The popularity of Machine Learning (ML) makes the privacy of sensitive data more imperative than ever. Collaborative learning techniques like Split Learning (SL) aim to protect client data while enhancing ML processes. Though promising, SL has been proved to be vulnerable to a plethora of attacks, thus raising concerns about its effectiveness on data privacy. In this work, we introduce a hybrid approach combining SL and Function Secret Sharing (FSS) to ensure client data privacy. The client adds a random mask to the activation map before sending it to the servers. The servers cannot access the original function but instead work with shares generated using FSS. Consequently, during both forward and backward propagation, the servers cannot reconstruct the client's raw data from the activation map. Furthermore, through visual invertibility, we demonstrate that the server is incapable of reconstructing the raw image data from the activation map when using FSS. It enhances privacy by reducing privacy leakage compared to other SL-based approaches where the server can access client input information. Our approach also ensures security against feature space hijacking attack, protecting sensitive information from potential manipulation. Our protocols yield promising results, reducing communication overhead by over 2x and training time by over 7x compared to the same model with FSS, without any SL. Also, we show that our approach achieves >96% accuracy and remains equivalent to the plaintext models.

Wildest Dreams: Reproducible Research in Privacy-preserving Neural Network Training

Mar 06, 2024Abstract:Machine Learning (ML), addresses a multitude of complex issues in multiple disciplines, including social sciences, finance, and medical research. ML models require substantial computing power and are only as powerful as the data utilized. Due to high computational cost of ML methods, data scientists frequently use Machine Learning-as-a-Service (MLaaS) to outsource computation to external servers. However, when working with private information, like financial data or health records, outsourcing the computation might result in privacy issues. Recent advances in Privacy-Preserving Techniques (PPTs) have enabled ML training and inference over protected data through the use of Privacy-Preserving Machine Learning (PPML). However, these techniques are still at a preliminary stage and their application in real-world situations is demanding. In order to comprehend discrepancy between theoretical research suggestions and actual applications, this work examines the past and present of PPML, focusing on Homomorphic Encryption (HE) and Secure Multi-party Computation (SMPC) applied to ML. This work primarily focuses on the ML model's training phase, where maintaining user data privacy is of utmost importance. We provide a solid theoretical background that eases the understanding of current approaches and their limitations. In addition, we present a SoK of the most recent PPML frameworks for model training and provide a comprehensive comparison in terms of the unique properties and performances on standard benchmarks. Also, we reproduce the results for some of the papers and examine at what level existing works in the field provide support for open science. We believe our work serves as a valuable contribution by raising awareness about the current gap between theoretical advancements and real-world applications in PPML, specifically regarding open-source availability, reproducibility, and usability.

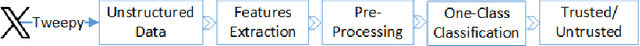

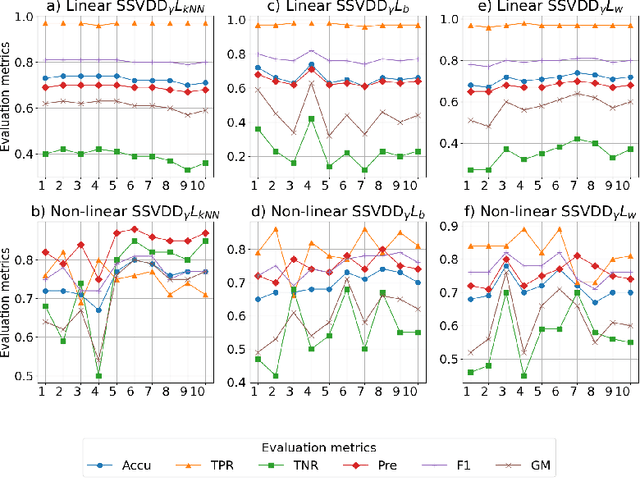

Trustworthiness of $\mathbb{X}$ Users: A One-Class Classification Approach

Feb 03, 2024

Abstract:$\mathbb{X}$ (formerly Twitter) is a prominent online social media platform that plays an important role in sharing information making the content generated on this platform a valuable source of information. Ensuring trust on $\mathbb{X}$ is essential to determine the user credibility and prevents issues across various domains. While assigning credibility to $\mathbb{X}$ users and classifying them as trusted or untrusted is commonly carried out using traditional machine learning models, there is limited exploration about the use of One-Class Classification (OCC) models for this purpose. In this study, we use various OCC models for $\mathbb{X}$ user classification. Additionally, we propose using a subspace-learning-based approach that simultaneously optimizes both the subspace and data description for OCC. We also introduce a novel regularization term for Subspace Support Vector Data Description (SSVDD), expressing data concentration in a lower-dimensional subspace that captures diverse graph structures. Experimental results show superior performance of the introduced regularization term for SSVDD compared to baseline models and state-of-the-art techniques for $\mathbb{X}$ user classification.

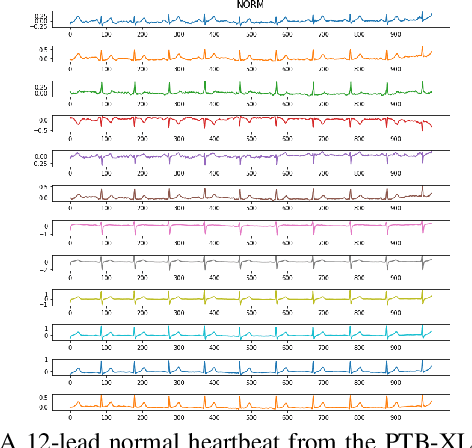

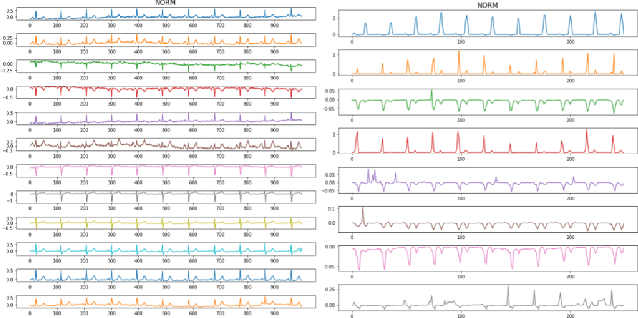

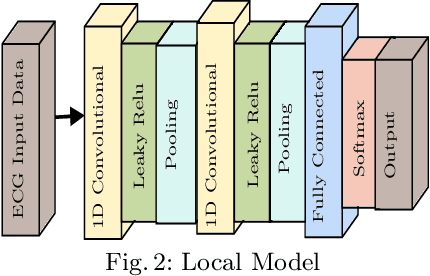

GuardML: Efficient Privacy-Preserving Machine Learning Services Through Hybrid Homomorphic Encryption

Jan 26, 2024Abstract:Machine Learning (ML) has emerged as one of data science's most transformative and influential domains. However, the widespread adoption of ML introduces privacy-related concerns owing to the increasing number of malicious attacks targeting ML models. To address these concerns, Privacy-Preserving Machine Learning (PPML) methods have been introduced to safeguard the privacy and security of ML models. One such approach is the use of Homomorphic Encryption (HE). However, the significant drawbacks and inefficiencies of traditional HE render it impractical for highly scalable scenarios. Fortunately, a modern cryptographic scheme, Hybrid Homomorphic Encryption (HHE), has recently emerged, combining the strengths of symmetric cryptography and HE to surmount these challenges. Our work seeks to introduce HHE to ML by designing a PPML scheme tailored for end devices. We leverage HHE as the fundamental building block to enable secure learning of classification outcomes over encrypted data, all while preserving the privacy of the input data and ML model. We demonstrate the real-world applicability of our construction by developing and evaluating an HHE-based PPML application for classifying heart disease based on sensitive ECG data. Notably, our evaluations revealed a slight reduction in accuracy compared to inference on plaintext data. Additionally, both the analyst and end devices experience minimal communication and computation costs, underscoring the practical viability of our approach. The successful integration of HHE into PPML provides a glimpse into a more secure and privacy-conscious future for machine learning on relatively constrained end devices.

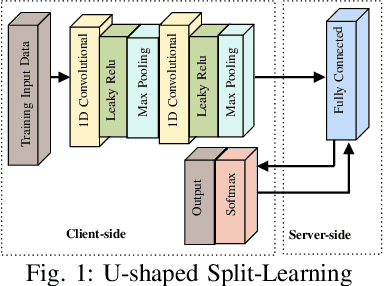

Love or Hate? Share or Split? Privacy-Preserving Training Using Split Learning and Homomorphic Encryption

Sep 19, 2023

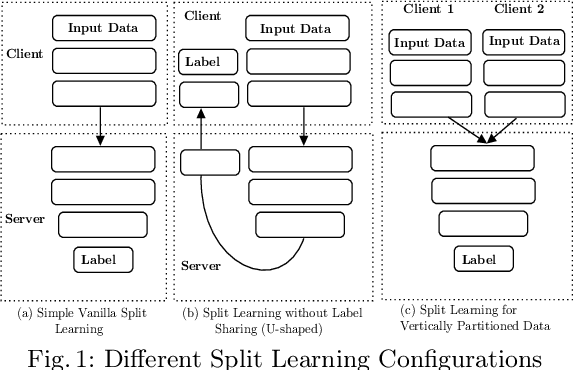

Abstract:Split learning (SL) is a new collaborative learning technique that allows participants, e.g. a client and a server, to train machine learning models without the client sharing raw data. In this setting, the client initially applies its part of the machine learning model on the raw data to generate activation maps and then sends them to the server to continue the training process. Previous works in the field demonstrated that reconstructing activation maps could result in privacy leakage of client data. In addition to that, existing mitigation techniques that overcome the privacy leakage of SL prove to be significantly worse in terms of accuracy. In this paper, we improve upon previous works by constructing a protocol based on U-shaped SL that can operate on homomorphically encrypted data. More precisely, in our approach, the client applies homomorphic encryption on the activation maps before sending them to the server, thus protecting user privacy. This is an important improvement that reduces privacy leakage in comparison to other SL-based works. Finally, our results show that, with the optimum set of parameters, training with HE data in the U-shaped SL setting only reduces accuracy by 2.65% compared to training on plaintext. In addition, raw training data privacy is preserved.

Split Without a Leak: Reducing Privacy Leakage in Split Learning

Aug 30, 2023

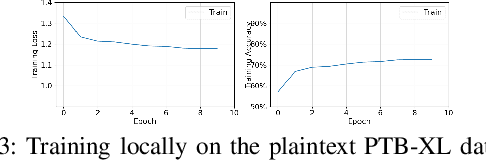

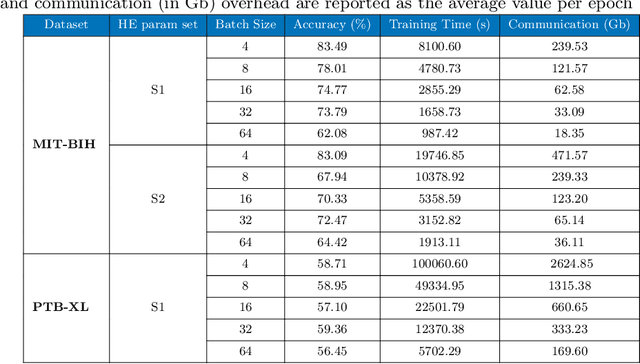

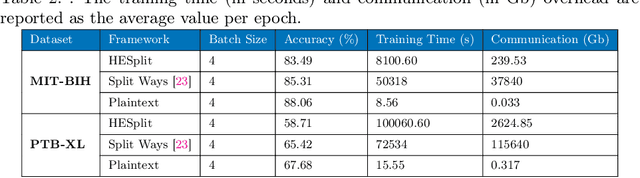

Abstract:The popularity of Deep Learning (DL) makes the privacy of sensitive data more imperative than ever. As a result, various privacy-preserving techniques have been implemented to preserve user data privacy in DL. Among various privacy-preserving techniques, collaborative learning techniques, such as Split Learning (SL) have been utilized to accelerate the learning and prediction process. Initially, SL was considered a promising approach to data privacy. However, subsequent research has demonstrated that SL is susceptible to many types of attacks and, therefore, it cannot serve as a privacy-preserving technique. Meanwhile, countermeasures using a combination of SL and encryption have also been introduced to achieve privacy-preserving deep learning. In this work, we propose a hybrid approach using SL and Homomorphic Encryption (HE). The idea behind it is that the client encrypts the activation map (the output of the split layer between the client and the server) before sending it to the server. Hence, during both forward and backward propagation, the server cannot reconstruct the client's input data from the intermediate activation map. This improvement is important as it reduces privacy leakage compared to other SL-based works, where the server can gain valuable information about the client's input. In addition, on the MIT-BIH dataset, our proposed hybrid approach using SL and HE yields faster training time (about 6 times) and significantly reduced communication overhead (almost 160 times) compared to other HE-based approaches, thereby offering improved privacy protection for sensitive data in DL.

Split Ways: Privacy-Preserving Training of Encrypted Data Using Split Learning

Jan 20, 2023Abstract:Split Learning (SL) is a new collaborative learning technique that allows participants, e.g. a client and a server, to train machine learning models without the client sharing raw data. In this setting, the client initially applies its part of the machine learning model on the raw data to generate activation maps and then sends them to the server to continue the training process. Previous works in the field demonstrated that reconstructing activation maps could result in privacy leakage of client data. In addition to that, existing mitigation techniques that overcome the privacy leakage of SL prove to be significantly worse in terms of accuracy. In this paper, we improve upon previous works by constructing a protocol based on U-shaped SL that can operate on homomorphically encrypted data. More precisely, in our approach, the client applies Homomorphic Encryption (HE) on the activation maps before sending them to the server, thus protecting user privacy. This is an important improvement that reduces privacy leakage in comparison to other SL-based works. Finally, our results show that, with the optimum set of parameters, training with HE data in the U-shaped SL setting only reduces accuracy by 2.65% compared to training on plaintext. In addition, raw training data privacy is preserved.

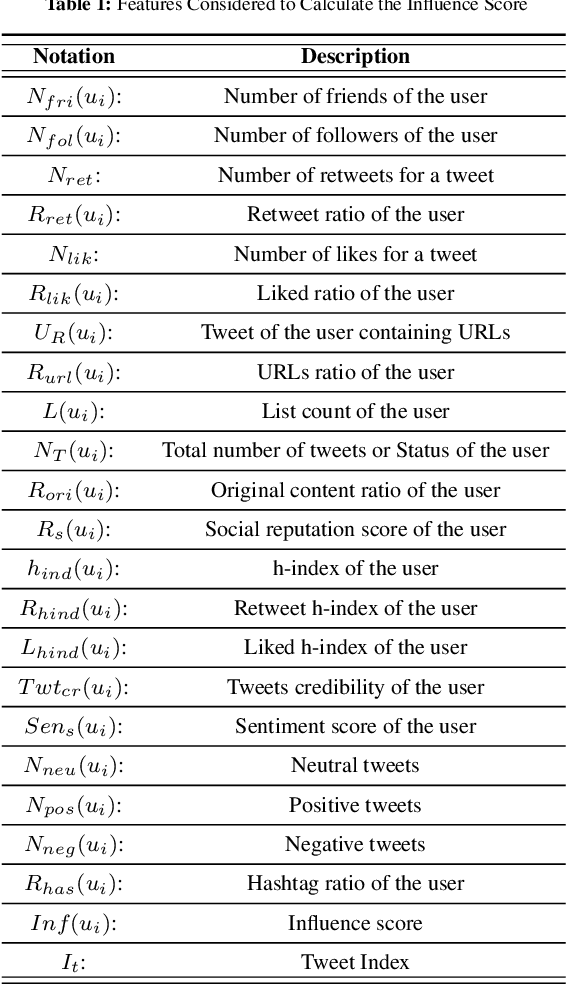

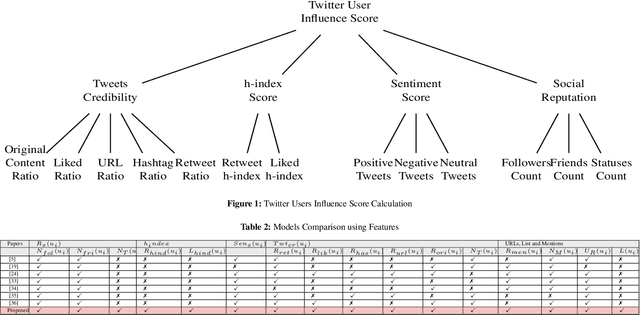

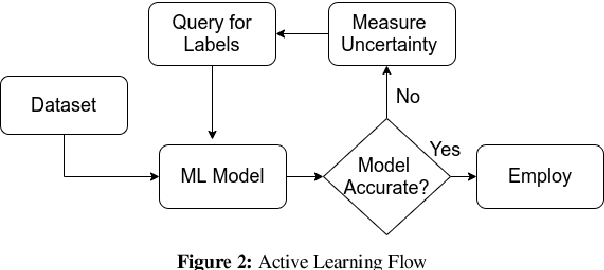

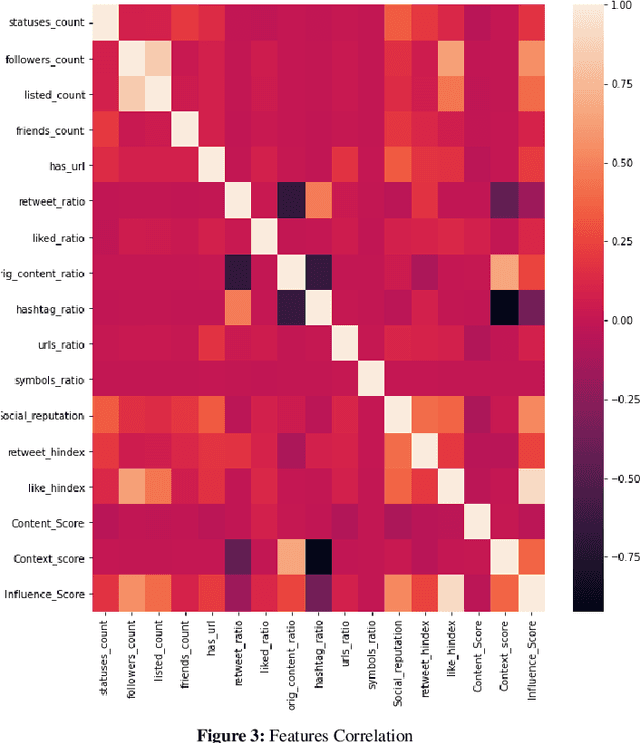

Trust and Believe -- Should We? Evaluating the Trustworthiness of Twitter Users

Oct 27, 2022Abstract:Social networking and micro-blogging services, such as Twitter, play an important role in sharing digital information. Despite the popularity and usefulness of social media, they are regularly abused by corrupt users. One of these nefarious activities is so-called fake news -- a "virus" that has been spreading rapidly thanks to the hospitable environment provided by social media platforms. The extensive spread of fake news is now becoming a major problem with far-reaching negative repercussions on both individuals and society. Hence, the identification of fake news on social media is a problem of utmost importance that has attracted the interest not only of the research community but most of the big players on both sides - such as Facebook, on the industry side, and political parties on the societal one. In this work, we create a model through which we hope to be able to offer a solution that will instill trust in social network communities. Our model analyses the behaviour of 50,000 politicians on Twitter and assigns an influence score for each evaluated user based on several collected and analysed features and attributes. Next, we classify political Twitter users as either trustworthy or untrustworthy using random forest and support vector machine classifiers. An active learning model has been used to classify any unlabeled ambiguous records from our dataset. Finally, to measure the performance of the proposed model, we used accuracy as the main evaluation metric.

Seeing and Believing: Evaluating the Trustworthiness of Twitter Users

Jul 22, 2021

Abstract:Social networking and micro-blogging services, such as Twitter, play an important role in sharing digital information. Despite the popularity and usefulness of social media, there have been many instances where corrupted users found ways to abuse it, as for instance, through raising or lowering user's credibility. As a result, while social media facilitates an unprecedented ease of access to information, it also introduces a new challenge - that of ascertaining the credibility of shared information. Currently, there is no automated way of determining which news or users are credible and which are not. Hence, establishing a system that can measure the social media user's credibility has become an issue of great importance. Assigning a credibility score to a user has piqued the interest of not only the research community but also most of the big players on both sides - such as Facebook, on the side of industry, and political parties on the societal one. In this work, we created a model which, we hope, will ultimately facilitate and support the increase of trust in the social network communities. Our model collected data and analysed the behaviour of~50,000 politicians on Twitter. Influence score, based on several chosen features, was assigned to each evaluated user. Further, we classified the political Twitter users as either trusted or untrusted using random forest, multilayer perceptron, and support vector machine. An active learning model was used to classify any unlabelled ambiguous records from our dataset. Finally, to measure the performance of the proposed model, we used precision, recall, F1 score, and accuracy as the main evaluation metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge