Tanjeem Azwad Zaman

Connecting the Dots: Leveraging Spatio-Temporal Graph Neural Networks for Accurate Bangla Sign Language Recognition

Jan 22, 2024Abstract:Recent advances in Deep Learning and Computer Vision have been successfully leveraged to serve marginalized communities in various contexts. One such area is Sign Language - a primary means of communication for the deaf community. However, so far, the bulk of research efforts and investments have gone into American Sign Language, and research activity into low-resource sign languages - especially Bangla Sign Language - has lagged significantly. In this research paper, we present a new word-level Bangla Sign Language dataset - BdSL40 - consisting of 611 videos over 40 words, along with two different approaches: one with a 3D Convolutional Neural Network model and another with a novel Graph Neural Network approach for the classification of BdSL40 dataset. This is the first study on word-level BdSL recognition, and the dataset was transcribed from Indian Sign Language (ISL) using the Bangla Sign Language Dictionary (1997). The proposed GNN model achieved an F1 score of 89%. The study highlights the significant lexical and semantic similarity between BdSL, West Bengal Sign Language, and ISL, and the lack of word-level datasets for BdSL in the literature. We release the dataset and source code to stimulate further research.

Ophthalmic Biomarker Detection Using Ensembled Vision Transformers -- Winning Solution to IEEE SPS VIP Cup 2023

Oct 21, 2023

Abstract:This report outlines our approach in the IEEE SPS VIP Cup 2023: Ophthalmic Biomarker Detection competition. Our primary objective in this competition was to identify biomarkers from Optical Coherence Tomography (OCT) images obtained from a diverse range of patients. Using robust augmentations and 5-fold cross-validation, we trained two vision transformer-based models: MaxViT and EVA-02, and ensembled them at inference time. We find MaxViT's use of convolution layers followed by strided attention to be better suited for the detection of local features while EVA-02's use of normal attention mechanism and knowledge distillation is better for detecting global features. Ours was the best-performing solution in the competition, achieving a patient-wise F1 score of 0.814 in the first phase and 0.8527 in the second and final phase of VIP Cup 2023, scoring 3.8% higher than the next-best solution.

Applying wav2vec2 for Speech Recognition on Bengali Common Voices Dataset

Sep 11, 2022

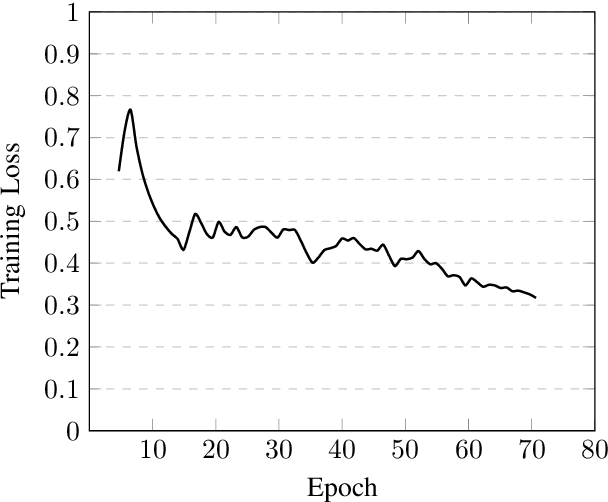

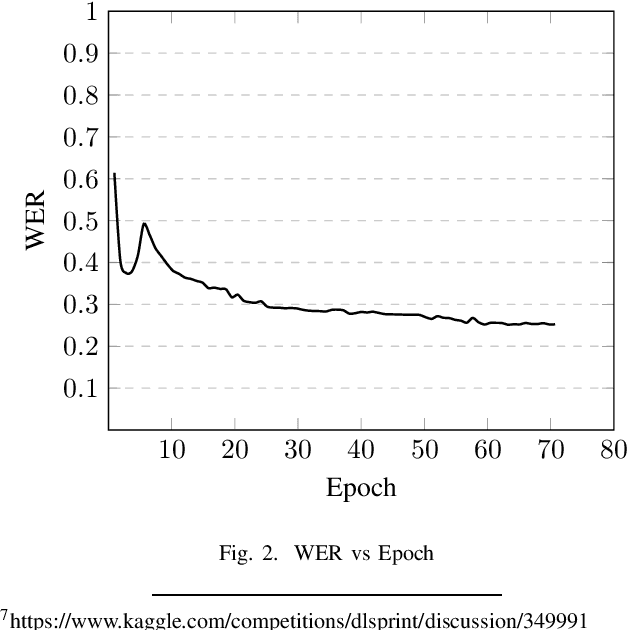

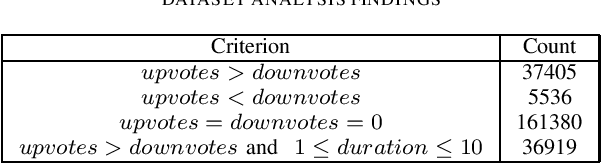

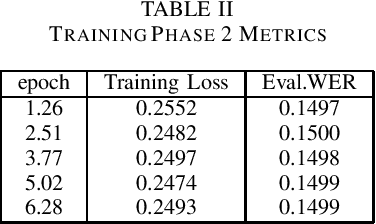

Abstract:Speech is inherently continuous, where discrete words, phonemes and other units are not clearly segmented, and so speech recognition has been an active research problem for decades. In this work we have fine-tuned wav2vec 2.0 to recognize and transcribe Bengali speech -- training it on the Bengali Common Voice Speech Dataset. After training for 71 epochs, on a training set consisting of 36919 mp3 files, we achieved a training loss of 0.3172 and WER of 0.2524 on a validation set of size 7,747. Using a 5-gram language model, the Levenshtein Distance was 2.6446 on a test set of size 7,747. Then the training set and validation set were combined, shuffled and split into 85-15 ratio. Training for 7 more epochs on this combined dataset yielded an improved Levenshtein Distance of 2.60753 on the test set. Our model was the best performing one, achieving a Levenshtein Distance of 6.234 on a hidden dataset, which was 1.1049 units lower than other competing submissions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge