Tamas J. Madarasz

Long-tail Recognition via Compositional Knowledge Transfer

Dec 13, 2021

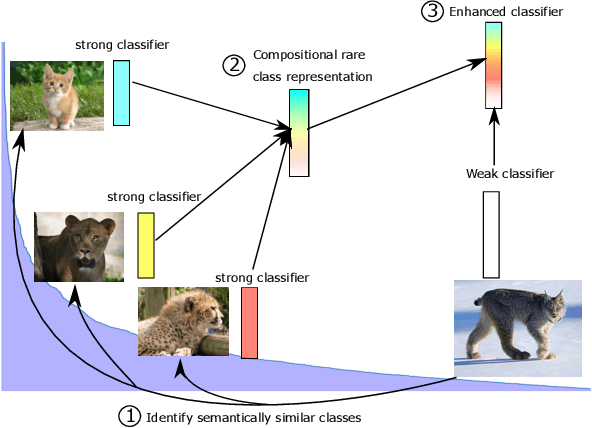

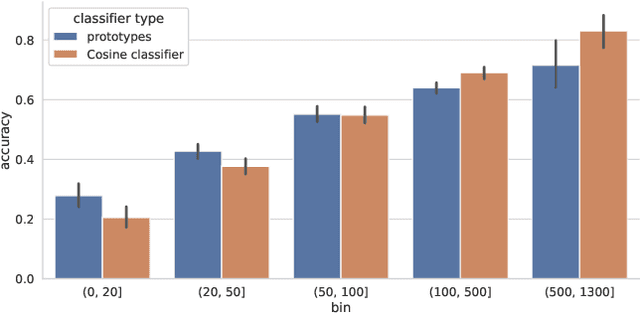

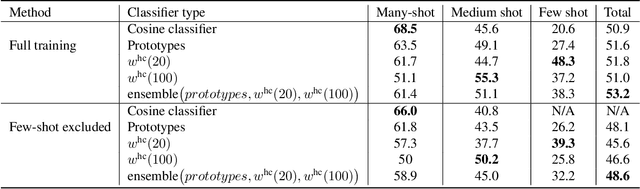

Abstract:In this work, we introduce a novel strategy for long-tail recognition that addresses the tail classes' few-shot problem via training-free knowledge transfer. Our objective is to transfer knowledge acquired from information-rich common classes to semantically similar, and yet data-hungry, rare classes in order to obtain stronger tail class representations. We leverage the fact that class prototypes and learned cosine classifiers provide two different, complementary representations of class cluster centres in feature space, and use an attention mechanism to select and recompose learned classifier features from common classes to obtain higher quality rare class representations. Our knowledge transfer process is training free, reducing overfitting risks, and can afford continual extension of classifiers to new classes. Experiments show that our approach can achieve significant performance boosts on rare classes while maintaining robust common class performance, outperforming directly comparable state-of-the-art models.

Inferred successor maps for better transfer learning

Jul 02, 2019

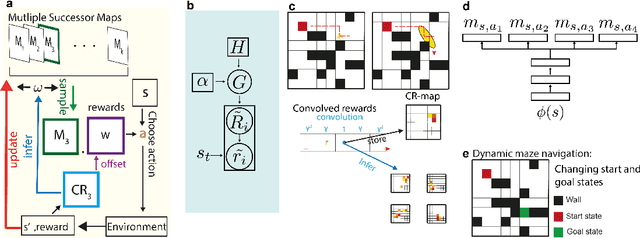

Abstract:Humans and animals show remarkable flexibility in adjusting their behaviour when their goals, or rewards in the environment change. While such flexibility is a hallmark of intelligent behaviour, these multi-task scenarios remain an important challenge for machine learning algorithms and neurobiological models alike. Factored representations can enable flexible behaviour by abstracting away general aspects of a task from those prone to change, while nonparametric methods provide a principled way of using similarity to past experiences to guide current behaviour. Here we combine the successor representation (SR), that factors the value of actions into expected outcomes and corresponding rewards, with evaluating task similarity through nonparametric inference and clustering the space of rewards. The proposed algorithm improves SR's transfer capabilities by inverting a generative model over tasks, while also explaining important neurobiological signatures of place cell representation in the hippocampus. It dynamically samples from a flexible number of distinct SR maps while accumulating evidence about the current reward context, and outperforms competing algorithms in settings with both known and unsignalled rewards changes. It reproduces the "flickering" behaviour of hippocampal maps seen when rodents navigate to changing reward locations, and gives a quantitative account of trajectory-dependent hippocampal representations (so-called splitter cells) and their dynamics. We thus provide a novel algorithmic approach for multi-task learning, as well as a common normative framework that links together these different characteristics of the brain's spatial representation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge